如何计算连续变量的熵

背景

做特征选择时,有时候会用到计算特征的信息熵,可是离散的好计算,但连续的呢?按照把连续变量离散的方法设置阈值点吗?好像比较麻烦,需要排序, 计算阈值。没有能自动的方法吗?

找资料

How Can I Compute Information-Gain for Continuous- Valued Attributes 中有提到

If you wanted to find the entropy of a continuous variable, you could use Differential entropy metrics such as KL divergence,

似乎要用微分熵直接计算连续变量的熵?

再查资料,How to find entropy of Continuous variable in Python?. 中提到

For continuous distributions, you are better off using the Kozachenko-Leonenko k-nearest neighbour estimator for entropy (K & L 1987) and the corresponding Kraskov, …, Grassberger (2004) estimator for mutual information.

好像有突破,找到大佬的github

大佬的实现

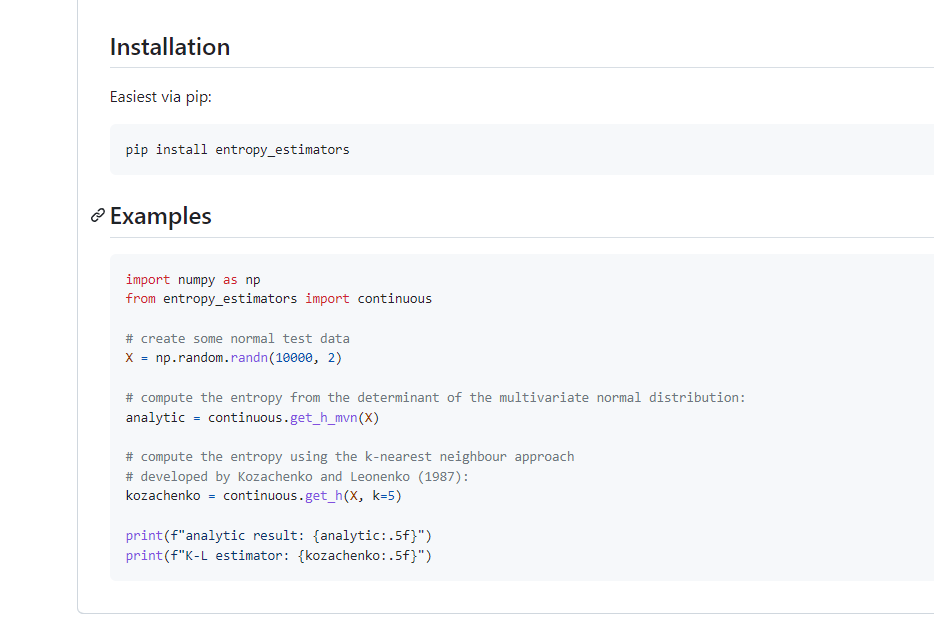

Entropy estimators

到了这个github后,发现确实不错能很好的解决我的问题,只需要调库能搞定了

Differential entropy?

趁着有时间又去看了 微分熵 。然后 enmmmm,好多数学,不想看,然后就找Differential entropy 的 python实现

然后突然发现,哦,原来是SCIpy里面就有实现,调库就行。 scipy.stats.differential_entropy

from scipy.stats import differential_entropyent = differential_entropy(x) # x是连续特征向量,行向量列向量都可以

两者比较

import numpy as np

from scipy.stats import differential_entropy, norm

from entropy_estimators import continuous# Generate the data

X = np.random.randn(10000, 1)# compute the entropy from the determinant of the multivariate normal distribution:

analytic = continuous.get_h_mvn(X)# compute the entropy using the k-nearest neighbour approach

# developed by Kozachenko and Leonenko (1987):

kozachenko = continuous.get_h(X, k=5)# compute the entropy using SCI Differential entropy

SCI = differential_entropy(X)print(f"analytic result: {analytic:.5f}")

print(f"K-L estimator: {kozachenko:.5f}")

print(f"SCI result: {SCI[0]:.5f}")

输出:

analytic result: 1.42191

K-L estimator: 1.42436

SCI result: 1.42043

没啥大的结果区别,想用哪个都行。差距最大在0.01左右,我个人能接受,但是力求精准的同学可用SCI的结果,应该更精确些,毕竟标准库,名气也大。