Spark 连接 Hive

目录

导包

修改配置文件

修改hive-site.xml文件

启动hadoop

启动hive

启动spark

查看表

导包

spark连接hive需要六个关键的jar包,以及将hive的配置文件hive-site.xml拷贝到spark的conf目录下。如果你hive配置没问题的话,这些jar都在hive的目录中。

将jar包导入到 opt/soft/spark312/jars/

[root@hadoop3 jars]# cp /opt/soft/hive312/lib/hive-beeline-3.1.2.jar ./

[root@hadoop3 jars]# cp /opt/soft/hive312/lib/hive-cli-3.1.2.jar ./

[root@hadoop3 jars]# cp /opt/soft/hive312/lib/hive-exec-3.1.2.jar ./

[root@hadoop3 jars]# cp /opt/soft/hive312/lib/hive-jdbc-3.1.2.jar ./

[root@hadoop3 jars]# cp /opt/soft/hive312/lib/hive-metastore-3.1.2.jar ./

[root@hadoop3 jars]# cp /opt/soft/hive312/lib/mysql-connector-java-8.0.25.jar ./

修改配置文件

切换目录

[root@hadoop3 /]# cd opt/soft/spark312/conf/

把hive312/conf中的hive-site.xml复制到spark312/conf目录下

[root@hadoop3 conf]# cp /opt/soft/hive312/conf/hive-site.xml ./

修改hive-site.xml文件

检查少什么,少则添加

<configuration><property><name>hive.metastore.warehouse.dir</name><value>/opt/soft/hive312/warehouse</value></property><property><name>hive.metastore.db.type</name><value>mysql</value></property><property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://192.168.152.184:3306/hiveone?createDatabaseIfNotExist=true</value></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.cj.jdbc.Driver</value></property><property><name>javax.jdo.option.ConnectionUserName</name><value>root</value></property><property><name>javax.jdo.option.ConnectionPassword</name><value>123456</value></property><property><name>hive.metastore.schema.verification</name><value>false</value><description>关闭schema验证</description></property><property><name>hive.cli.print.current.db</name><value>true</value><description>提示当前数据库名</description></property><property><name>hive.cli.print.header</name><value>true</value><description>查询输出时带列名一起输出</description></property>

<property><name>hive.zookeeper.quorum</name><value>192.168.152.192</value></property><property><name>hbase.zookeeper.quorum</name><value>192.168.152.192</value></property><property><name>hbase.zookeeper.quorum</name><value>192.168.152.192</value></property><property><name>hive.aux.jars.path</name><value>file:///opt/soft/hive312/lib/hive-hbase-handler-3.1.2.jar,file:///opt/soft/hive312/lib/zookeeper-3.4.6.jar,file:///opt/soft/hive312/lib/hbase-client-2.3.5.jar,file:///opt/soft/hive312/lib/hbase-common-2.3.5-tests.jar,file:///opt/soft/hive312/lib/hbase-server-2.3.5.jar,file:///opt/soft/hive312/lib/hbase-common-2.3.5.jar,file:///opt/soft/hive312/lib/hbase-protocol-2.3.5.jar,file:///opt/soft/hive312/lib/htrace-core-3.2.0-incubating.jar</value>

</property>

<property><name>hadoop.proxyuser.hadoop.hosts</name><value>*</value>

</property><property><name>hadoop.proxyuser.hdfs.groups</name><value>*</value>

</property>

<property><name>hive.metastore.uris</name><value>thrift://192.168.152.192:9083</value>

</property></configuration>配置完成,下面开始测试

启动hadoop

[root@gree2 ~]# start-all.sh

启动hive

nohup hive --service metastore &

nohup hive --service hiveserver2 &

beeline -u jdbc:hive2://192.168.152.192:10000

启动spark

spark-shell

测试

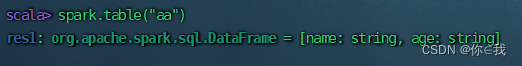

查看表

scala> spark.table("aa")

这里的aa为hive默认库default下面的数据表

使用 spark.sql

scala> spark.sql("use default")

scala> spark.sql("select * from aa")