自然语言处理(七): Deep Learning for NLP: Recurrent Networks

目录

1. N-gram Language Models

2. Recurrent Neural Networks

2.1 RNN Unrolled

2.2 RNN Training

2.3 (Simple) RNN for Language Model

2.4 RNN Language Model: Training

2.5 RNN Language Model: Generation

3. Long Short-term Memory Networks

3.1 Language Model… Solved?

3.2 Long Short-term Memory (LSTM)

3.3 Gating Vector

3.4 Simple RNN vs. LSTM

3.5 LSTM: Forget Gate

3.6 LSTM: Input Gate

3.7 LSTM: Update Memory Cell

3.8 LSTM: Output Gate

3.9 LSTM: Summary

4. Applications

4.1 Shakespeare Generator

4.2 Wikipedia Generator

4.3 Code Generator

4.4 Deep-Speare

4.5 Text Classification

4.6 Sequence Labeling

4.7 Variants

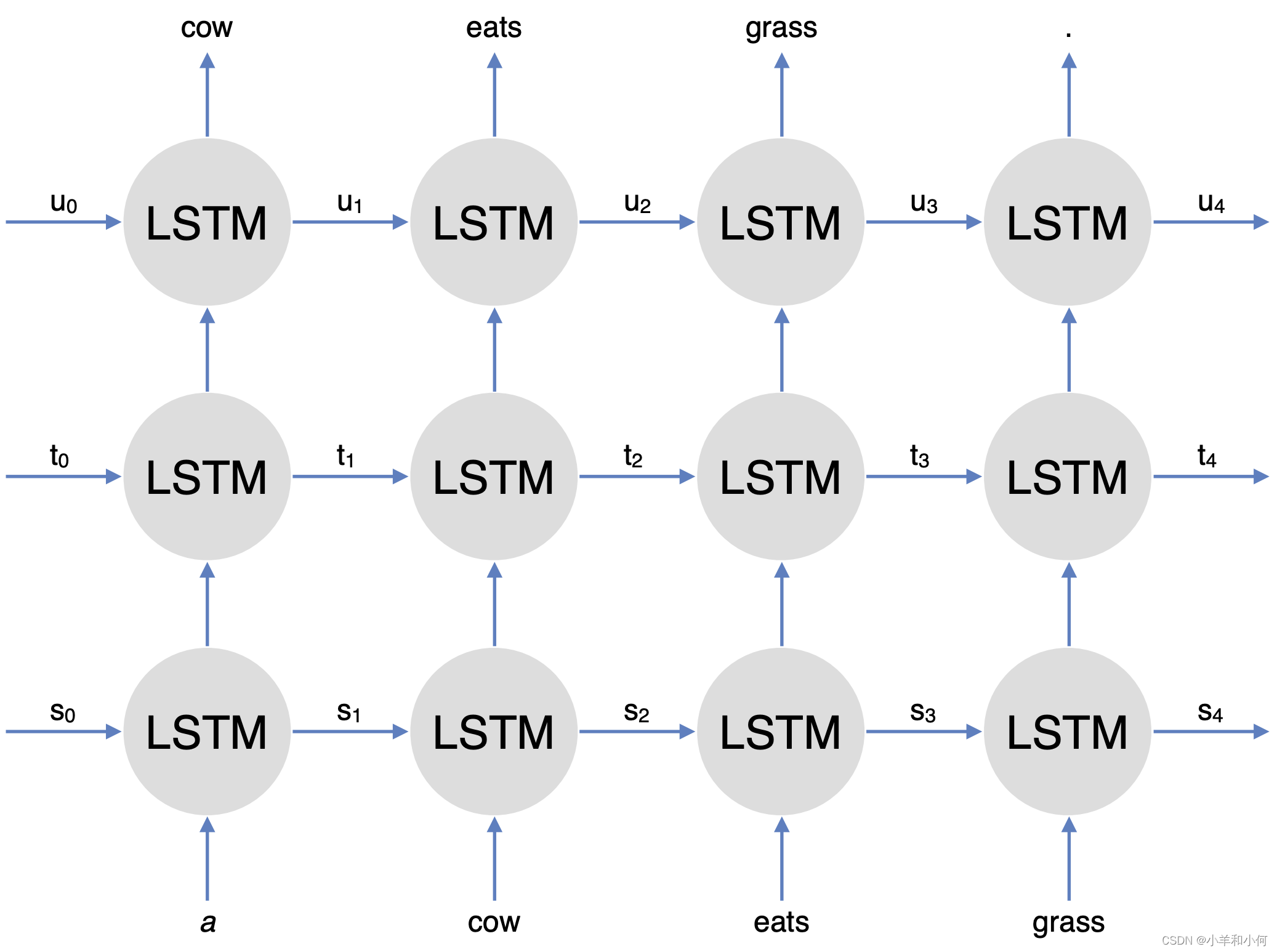

4.8 Multi-layer LSTM

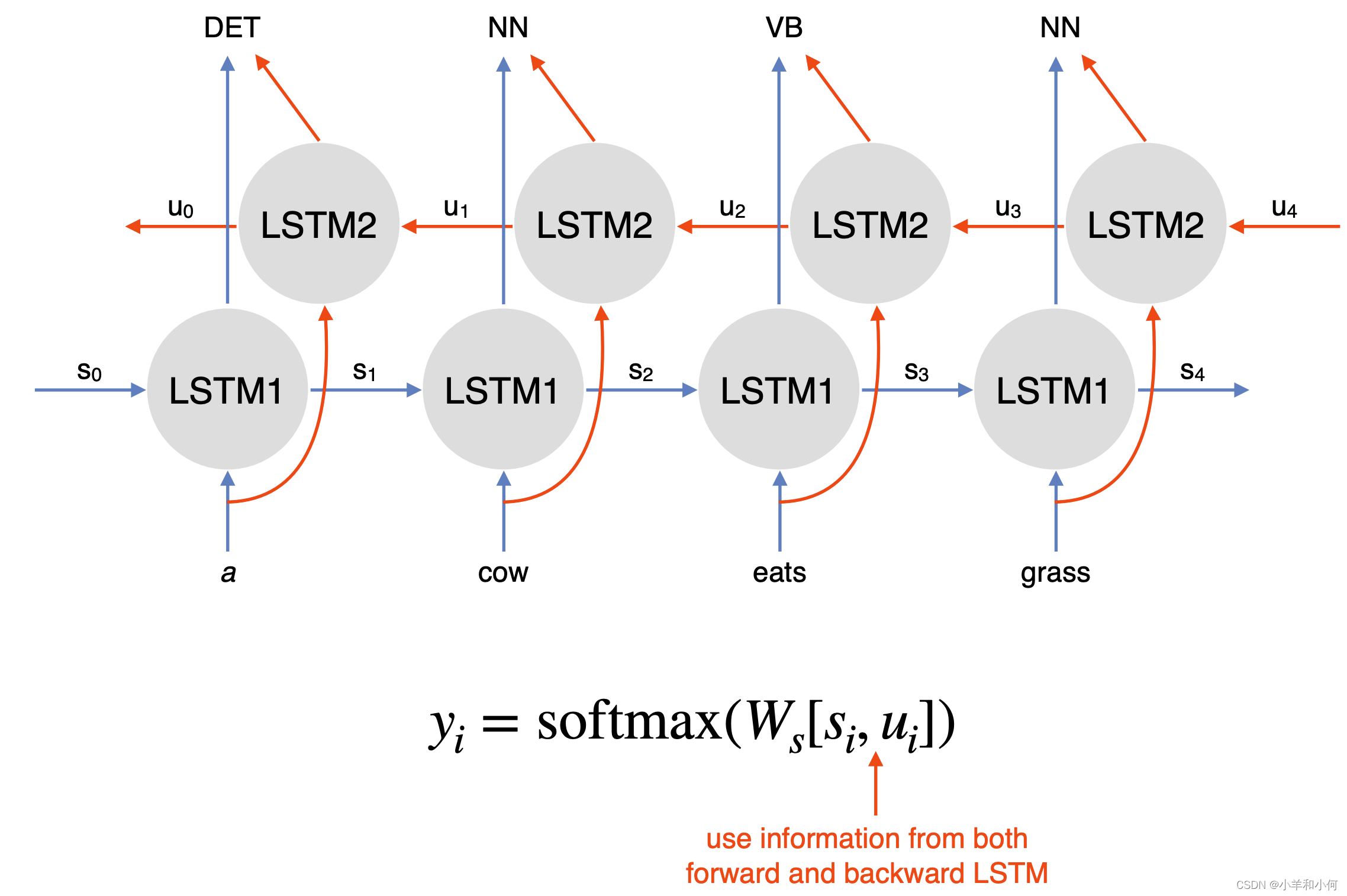

4.9 Bidirectional LSTM

5. Final Words

1. N-gram Language Models

Can be implemented using counts (with smoothing) 可利用计数(经平滑处理)来实施

Can be implemented using feed-forward neural networks 可以使用前馈神经网络实现

Generates sentences like (trigram model): 生成类似(三元模型)的句子:

- I saw a table is round and about

- I saw a

- I saw a table

- I saw a table is

- I saw a table is round

- I saw a table is round and

- Problem: limited context

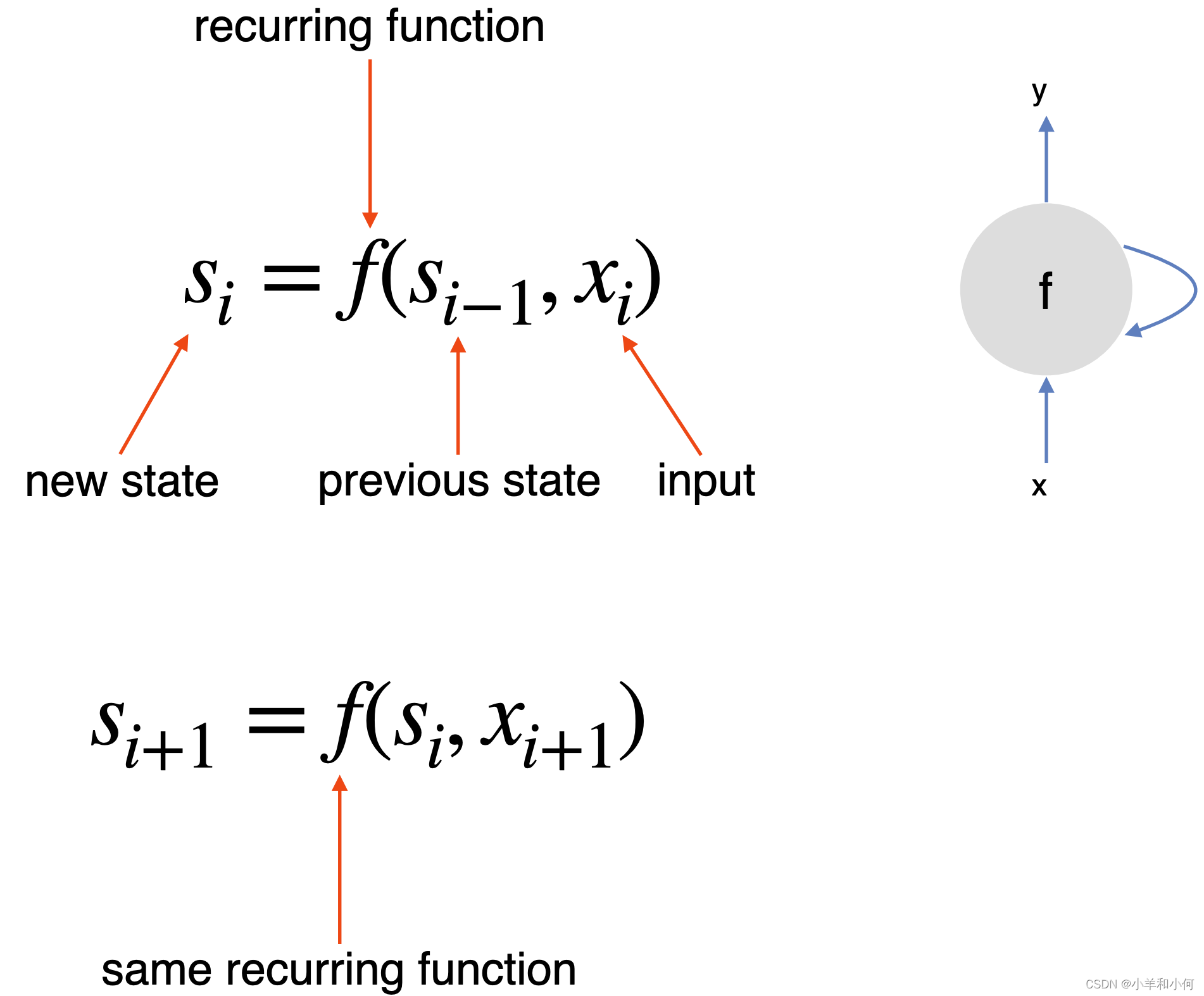

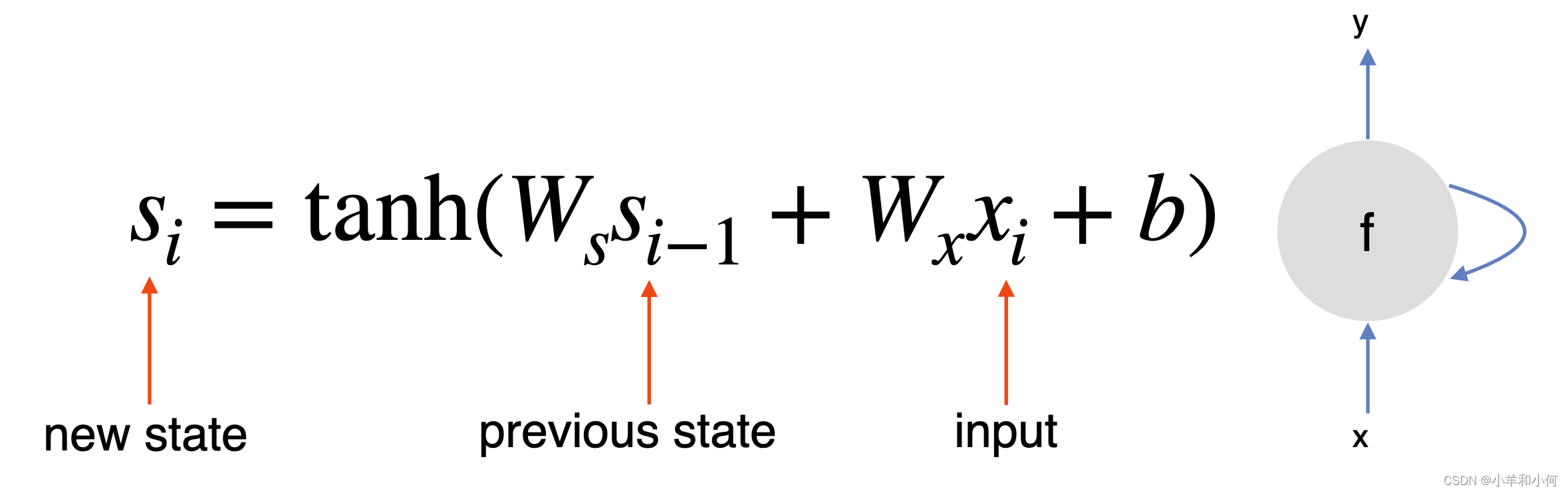

2. Recurrent Neural Networks

Allow representation of arbitrarily sized inputs 允许表示任意大小的输入

Core ldea: processes the input sequence one at a time, by applying a recurrence formula 核心理念: 通过应用递归公式,一次处理一个输入序列

Uses a state vector to represent contexts that have been previously processed 使用状态向量表示以前处理过的上下文

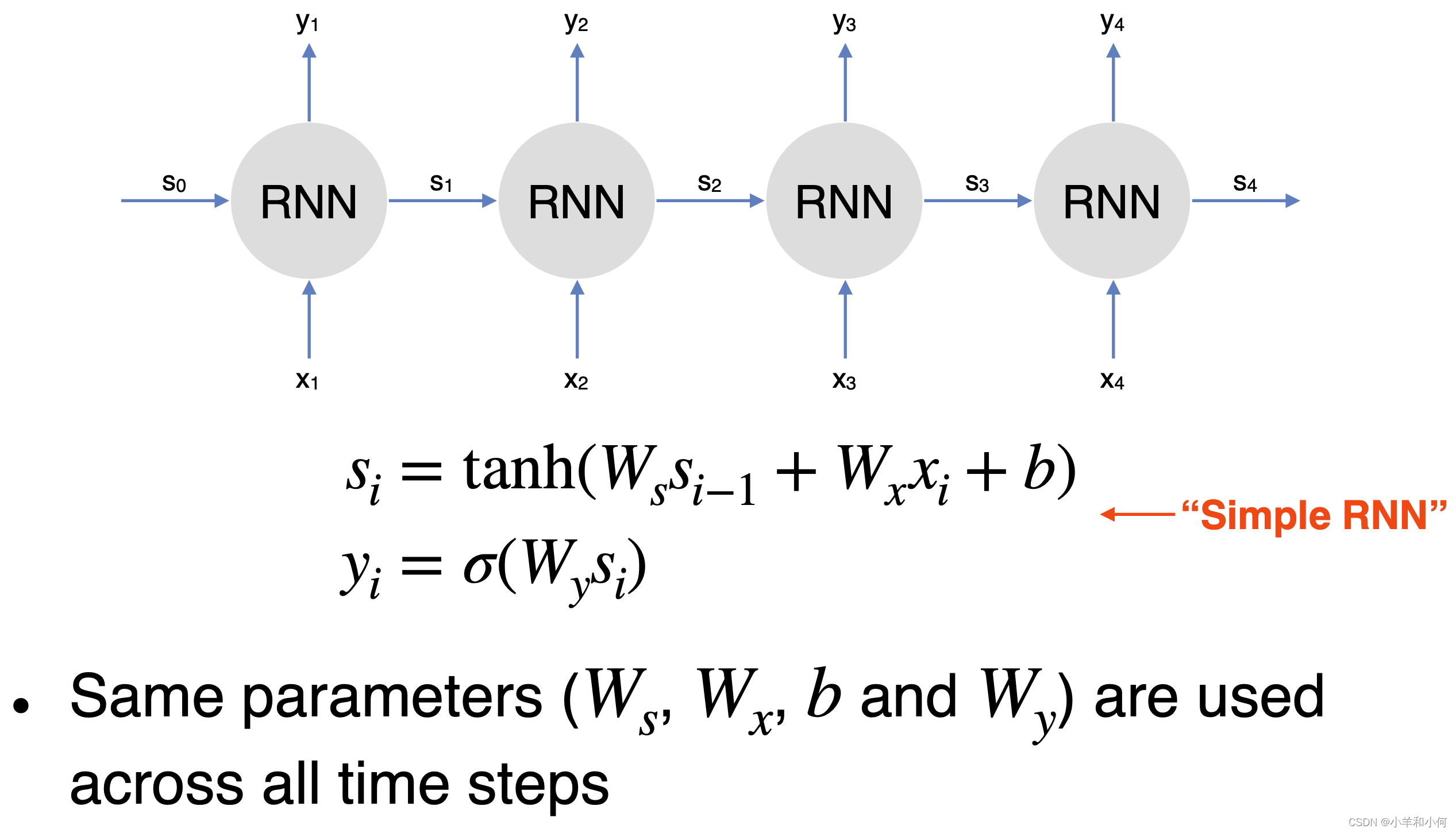

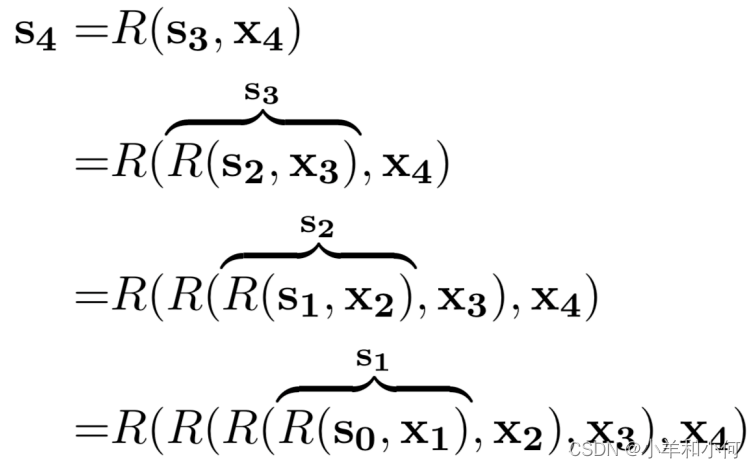

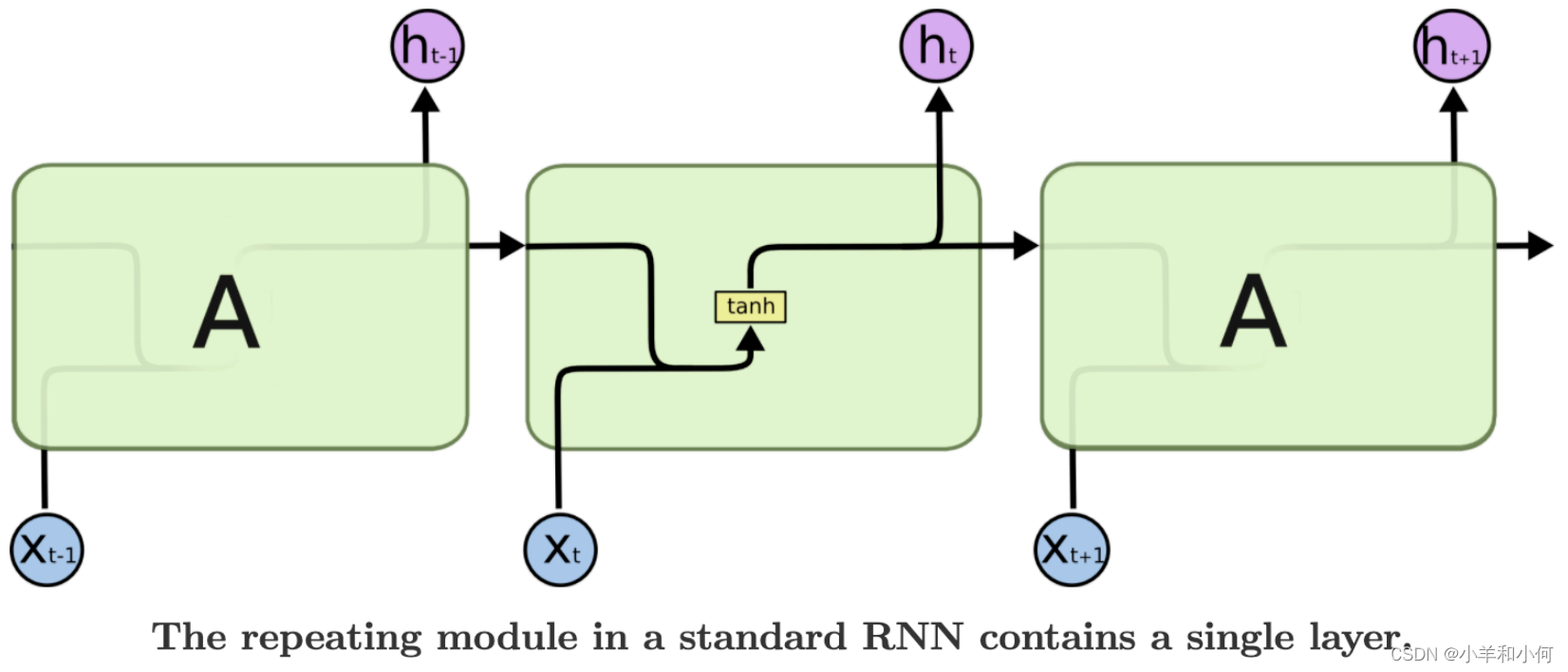

2.1 RNN Unrolled

2.2 RNN Training

An unrolled RNN is just a very deep neural network 一个展开的 RNN 只是一个非常深的神经网络

But parameters are shared across all time steps 但是参数是在所有时间步骤中共享的

To train RNN, we just need to create the unrolled computation graph given an input sequence 为了训练 RNN,我们只需要创建一个给定输入序列的展开计算图

And use backpropagation algorithm to compute gradients as usual 并像往常一样使用反向传播算法计算梯度

This procedure is called backpropagation through time 这个过程叫做时间反向传播

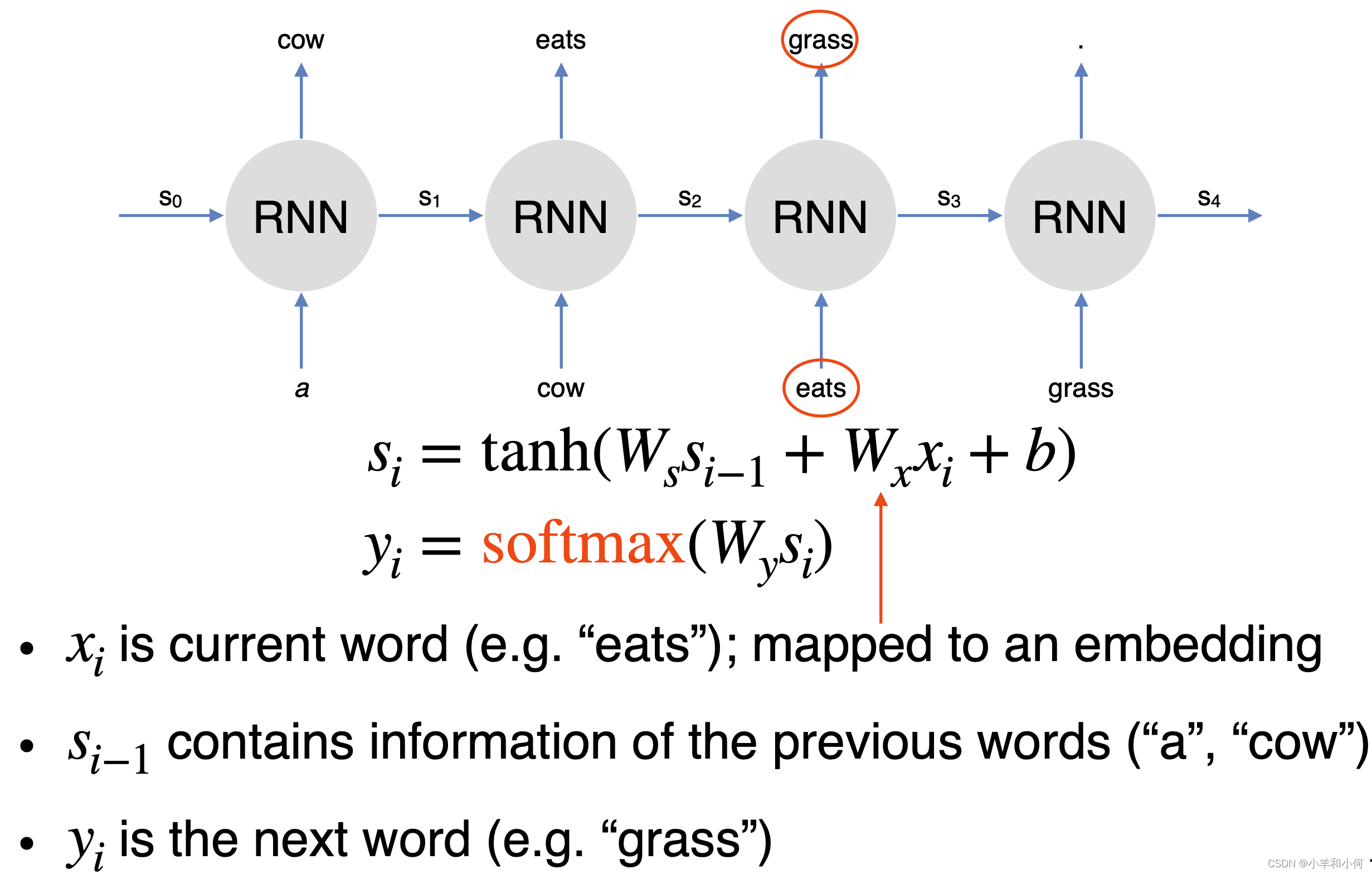

2.3 (Simple) RNN for Language Model

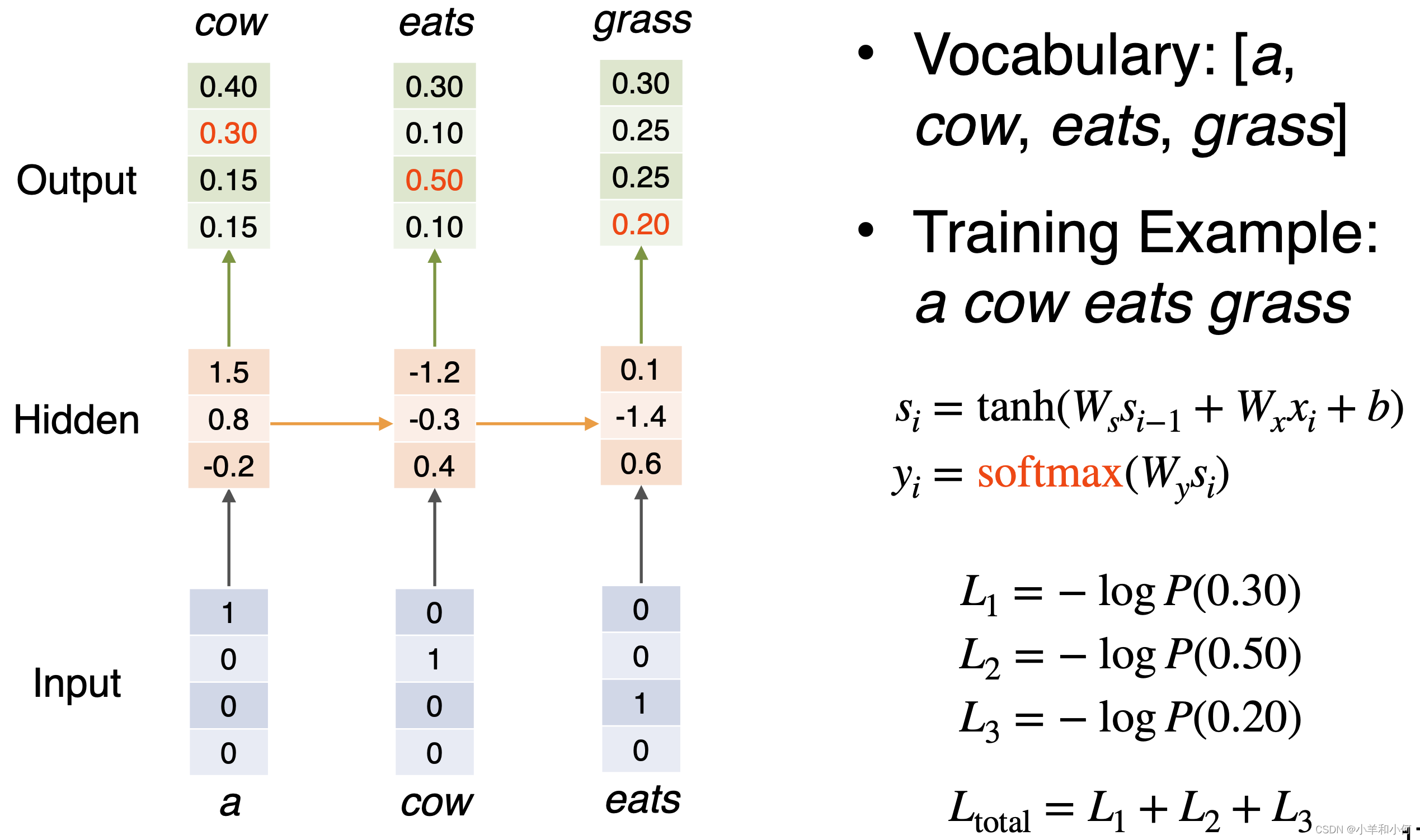

2.4 RNN Language Model: Training

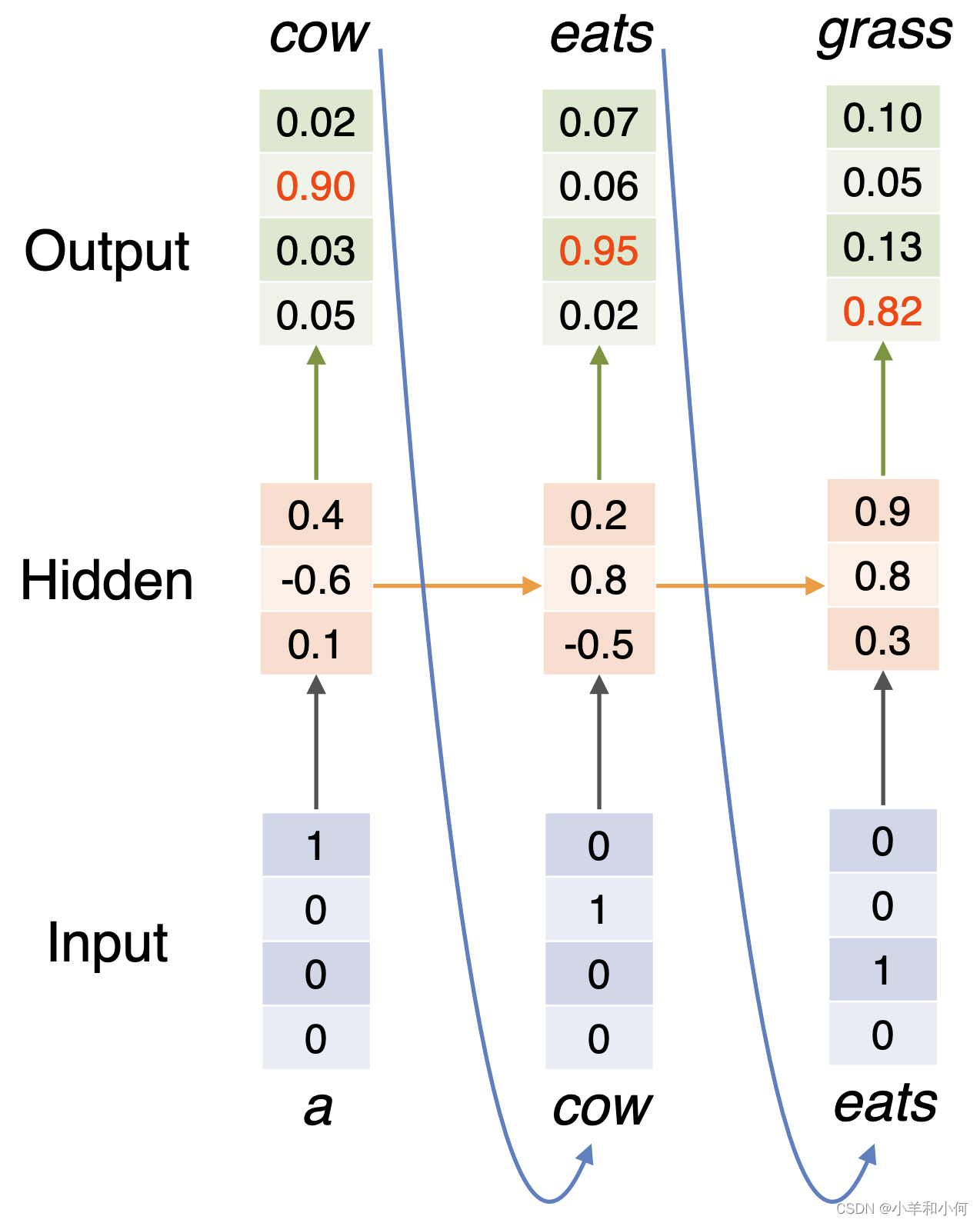

2.5 RNN Language Model: Generation

3. Long Short-term Memory Networks

3.1 Language Model… Solved?

RNN has the capability to model infinite context RNN 具有对无限上下文进行建模的能力

But can it actually capture long-range dependencies in practice? 但是它真的能够在实践中捕获长期依赖吗?

No… due to “vanishing gradients” 没有,因为“消失的梯度”

Gradients in later steps diminish quickly during backpropagation 后阶梯度在反向传播过程中迅速减小

Earlier inputs do not get much update 早期的输入不会得到太多更新

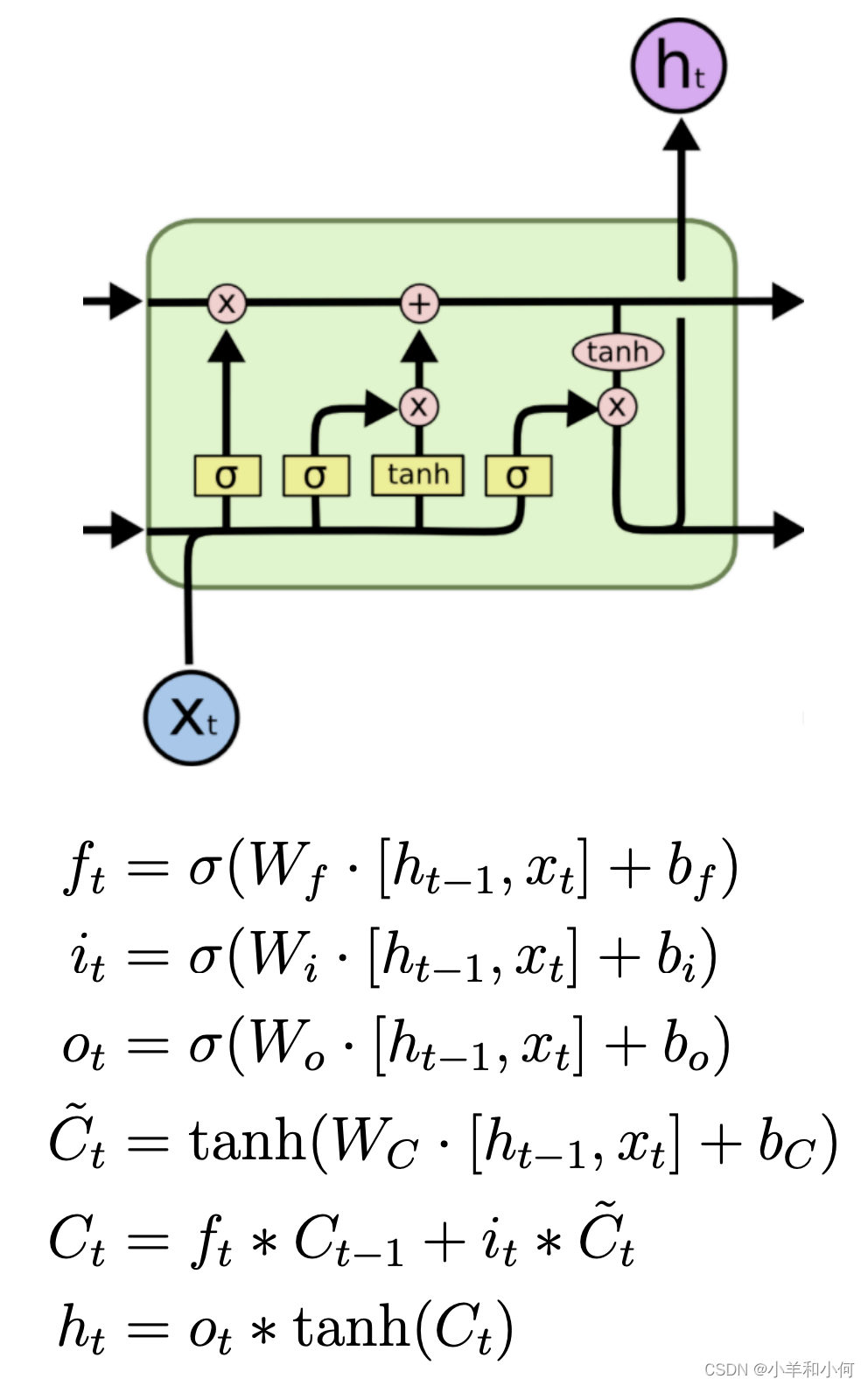

3.2 Long Short-term Memory (LSTM)

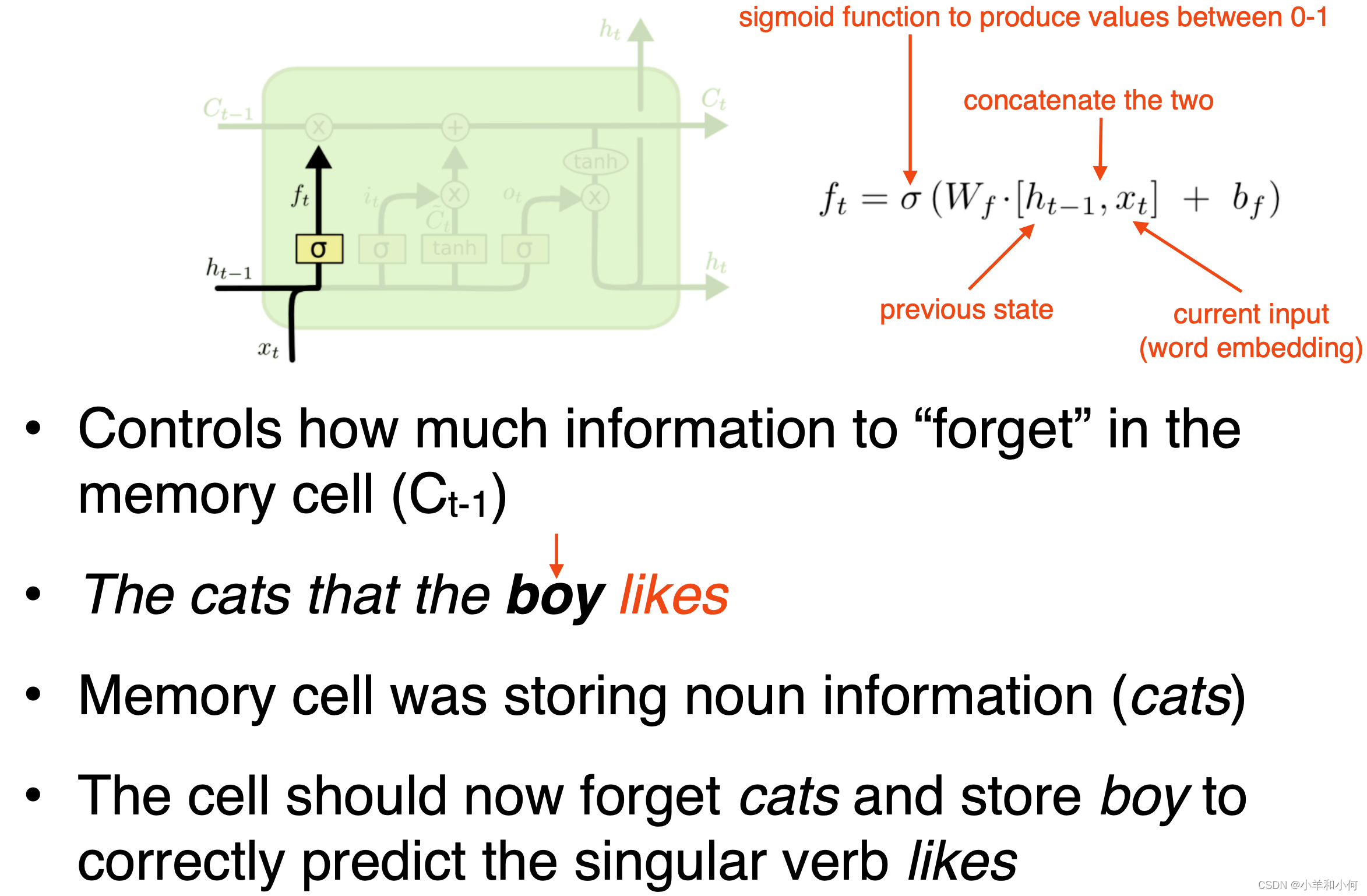

LSTM is introduced to solve vanishing gradients 引入 LSTM 方法解决消失梯度问题

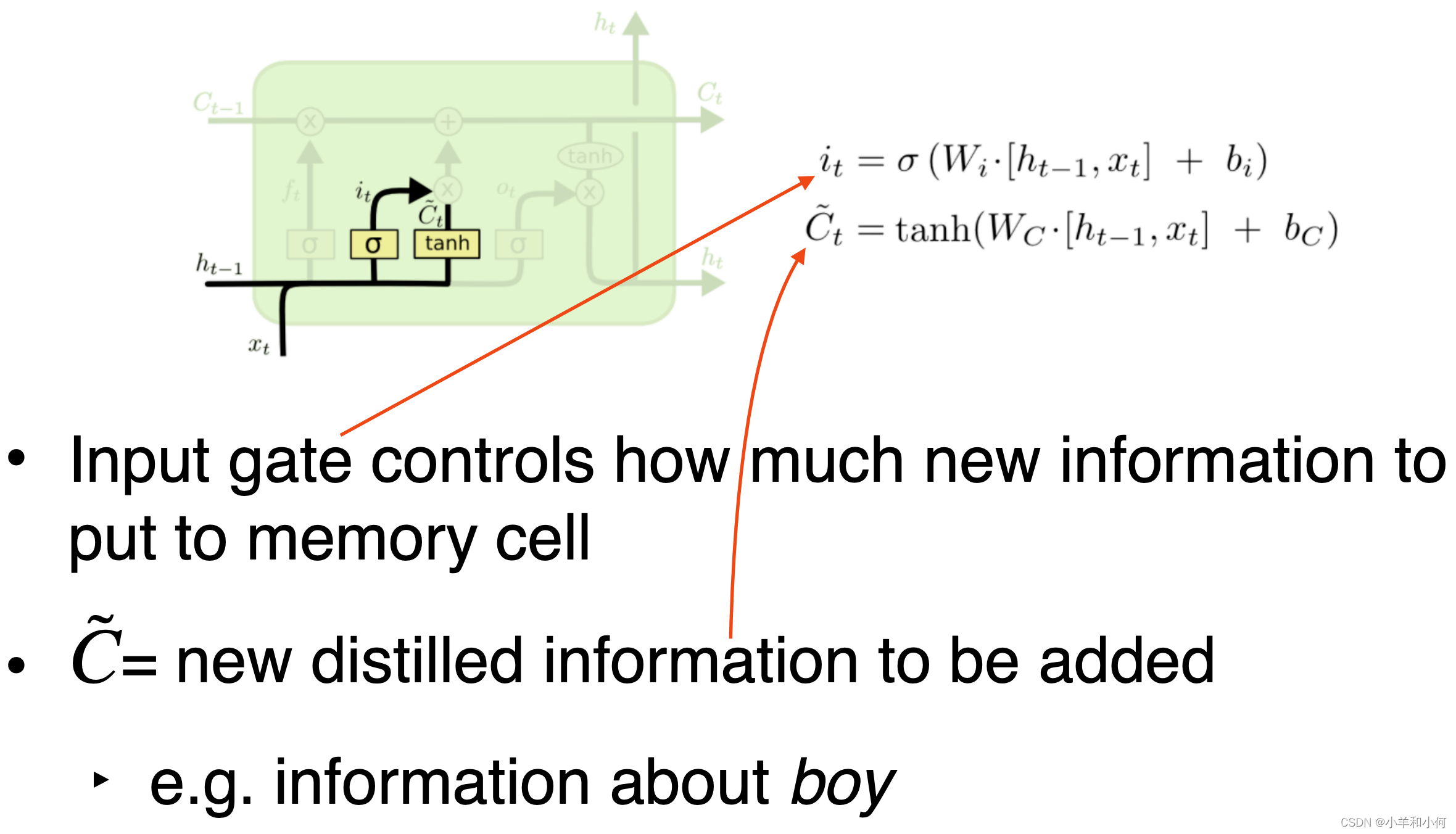

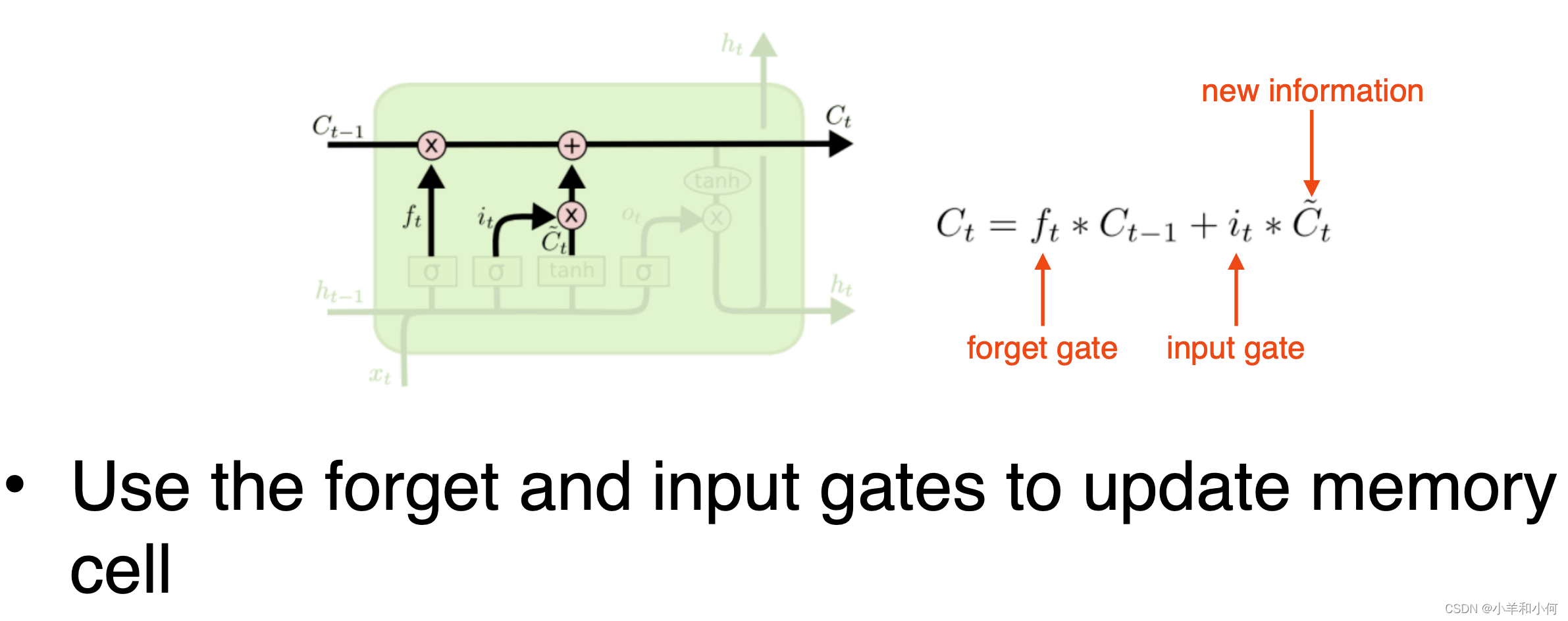

Core idea: have "memory cells" that preserve gradients across time 核心理念: 拥有“记忆单元”,可以跨时间保持渐变

Access to the memory cells is controlled by "gates" 进入存储单元是由“门”控制的

For each input, a gate decides:

- how much the new input should be written to the memory cell 应该向存储单元写入多少新输入

- and how much content of the current memory cell should be forgotten 以及当前内存单元格中有多少内容应该被遗忘

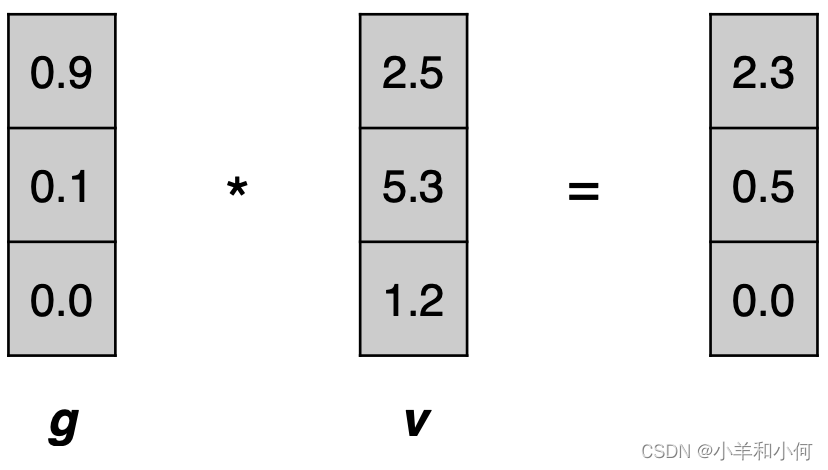

3.3 Gating Vector

A gate g is a vector

- each element has values between 0 to 1

g is multiplied component-wise with vector v, to determine how much information to keep for v 将 g 与向量 v 按分量相乘,以确定要为 v 保留多少信息

Use sigmoid function to produce g:

- values between 0 to 1

3.4 Simple RNN vs. LSTM

3.5 LSTM: Forget Gate

3.6 LSTM: Input Gate

3.7 LSTM: Update Memory Cell

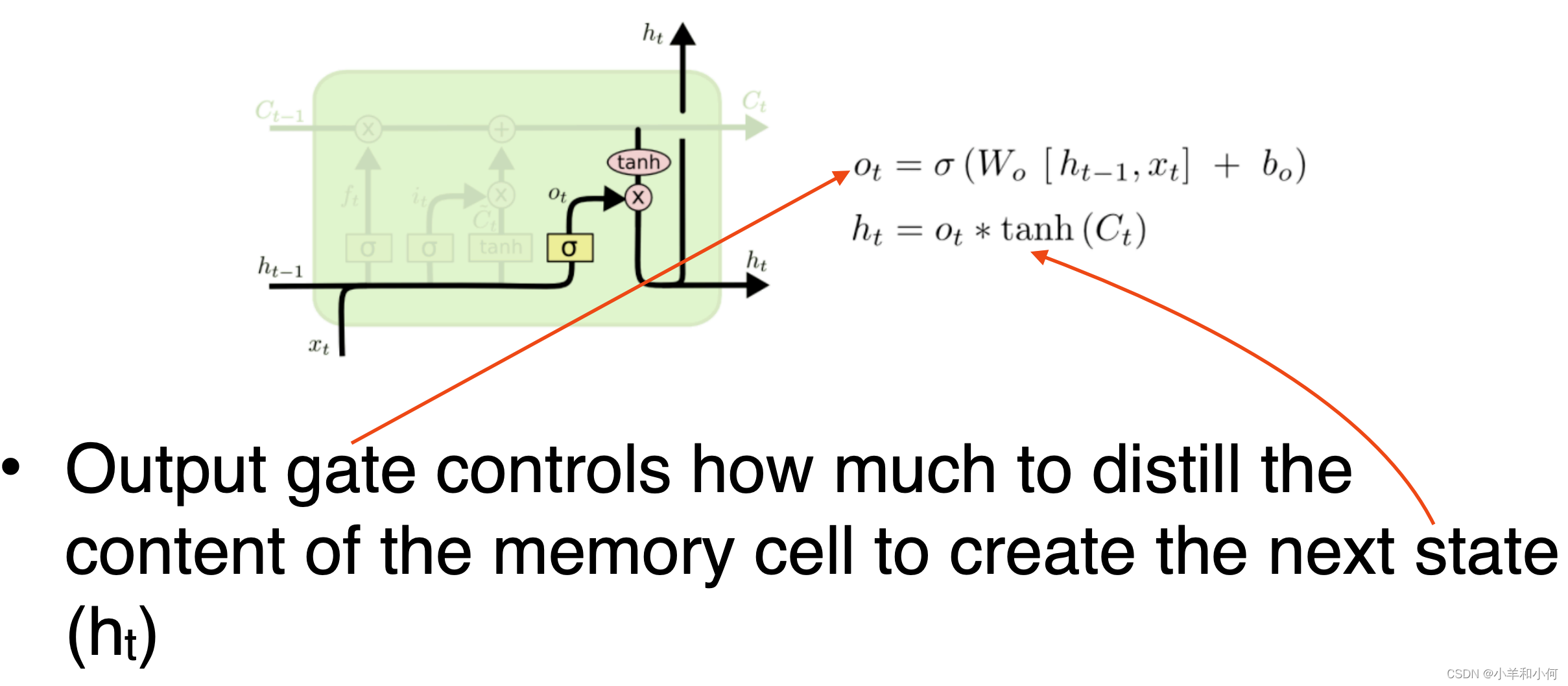

3.8 LSTM: Output Gate

3.9 LSTM: Summary

4. Applications

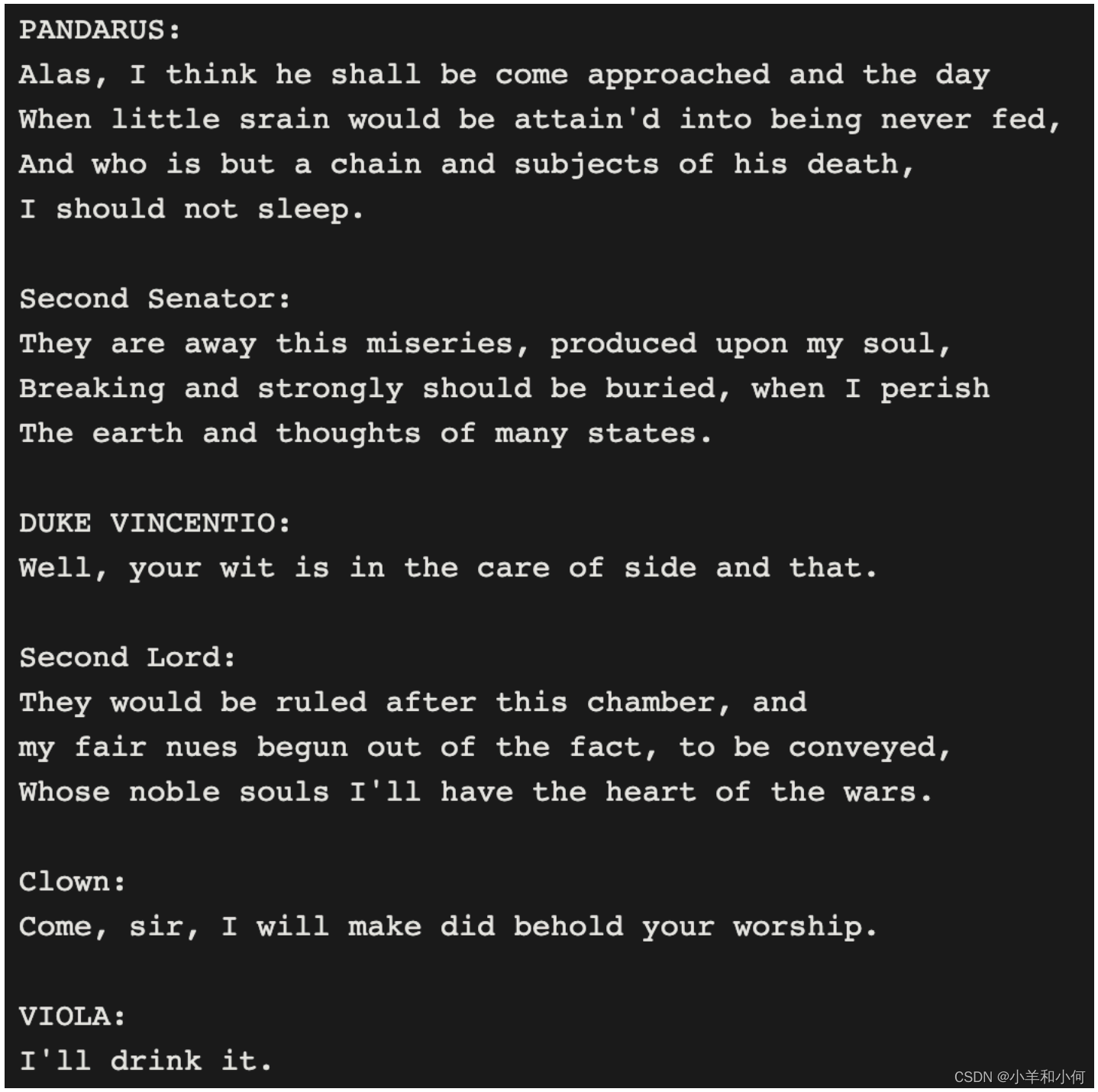

4.1 Shakespeare Generator

Training data = all works of Shakespeare

Model: character RNN, hidden dimension = 512

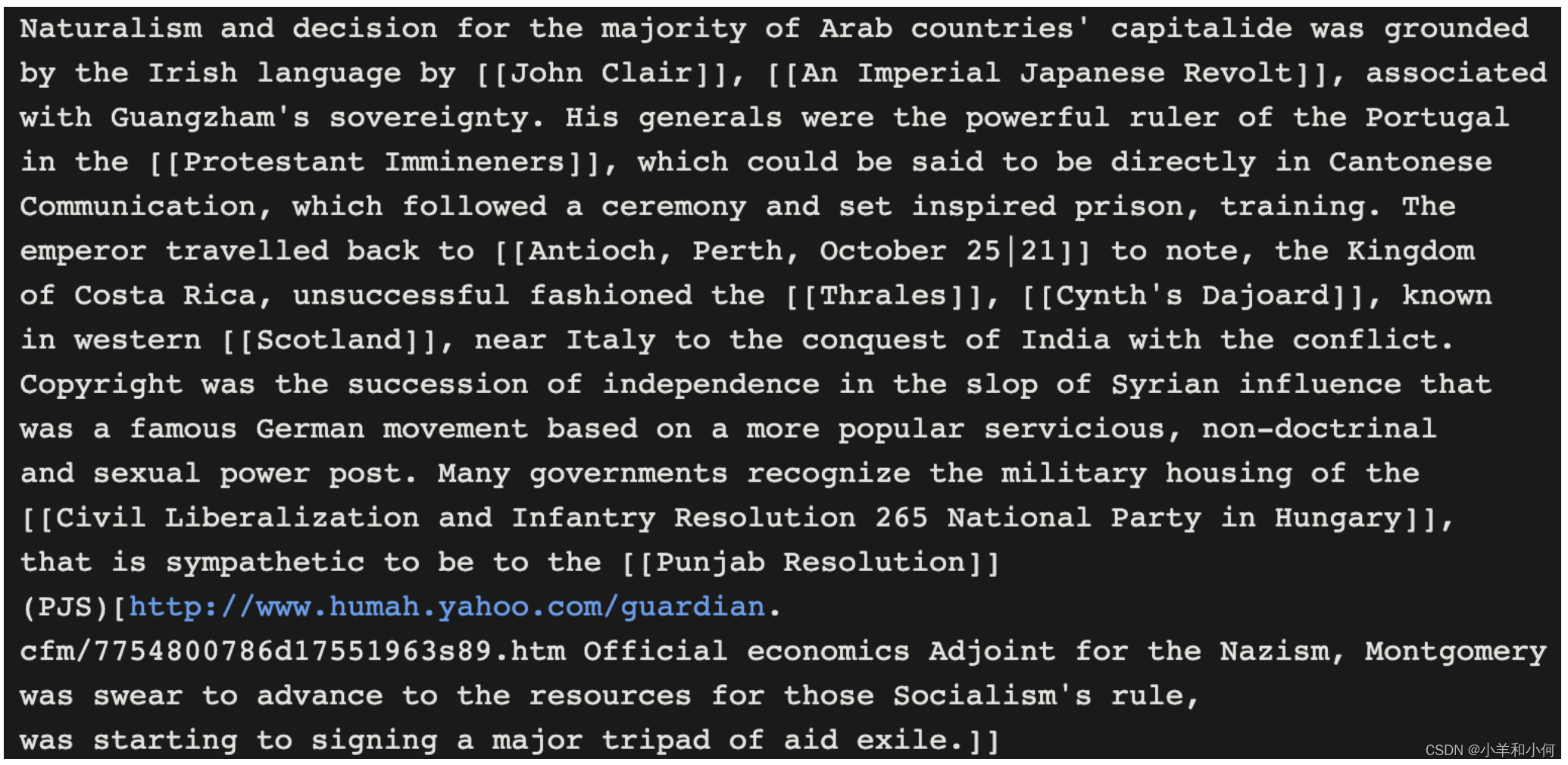

4.2 Wikipedia Generator

Training data = 100MB of Wikipedia raw data

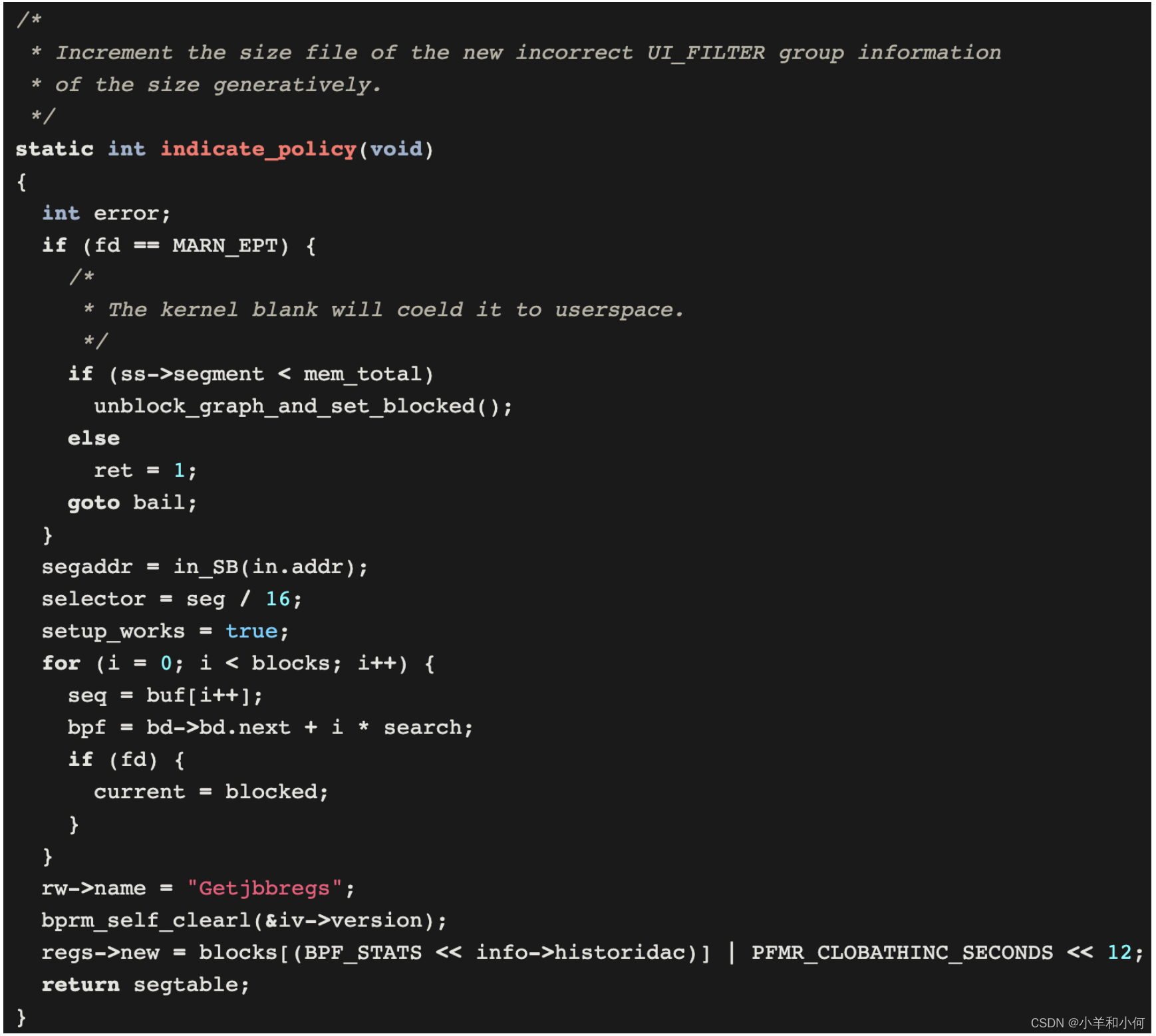

4.3 Code Generator

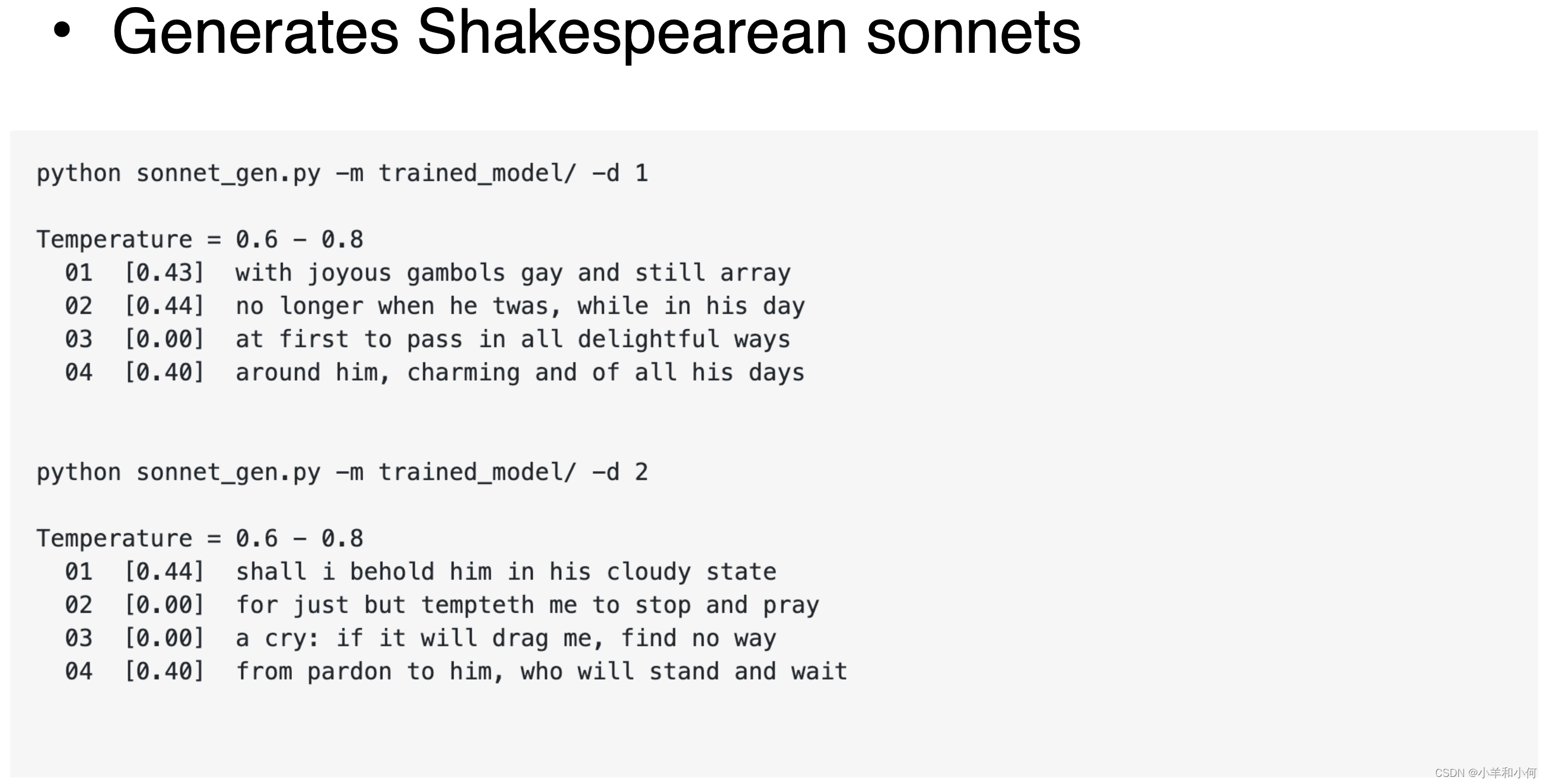

4.4 Deep-Speare

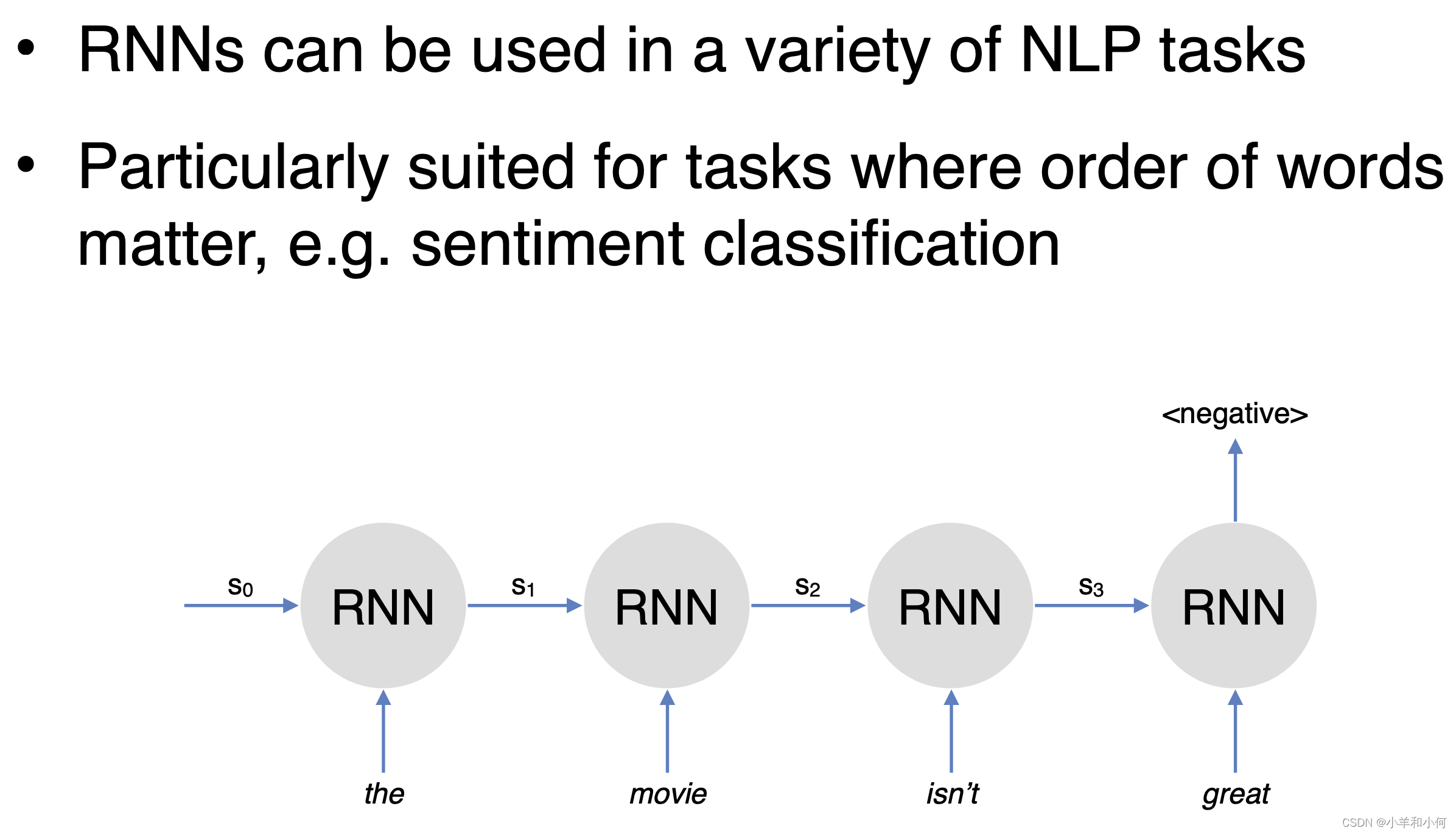

4.5 Text Classification

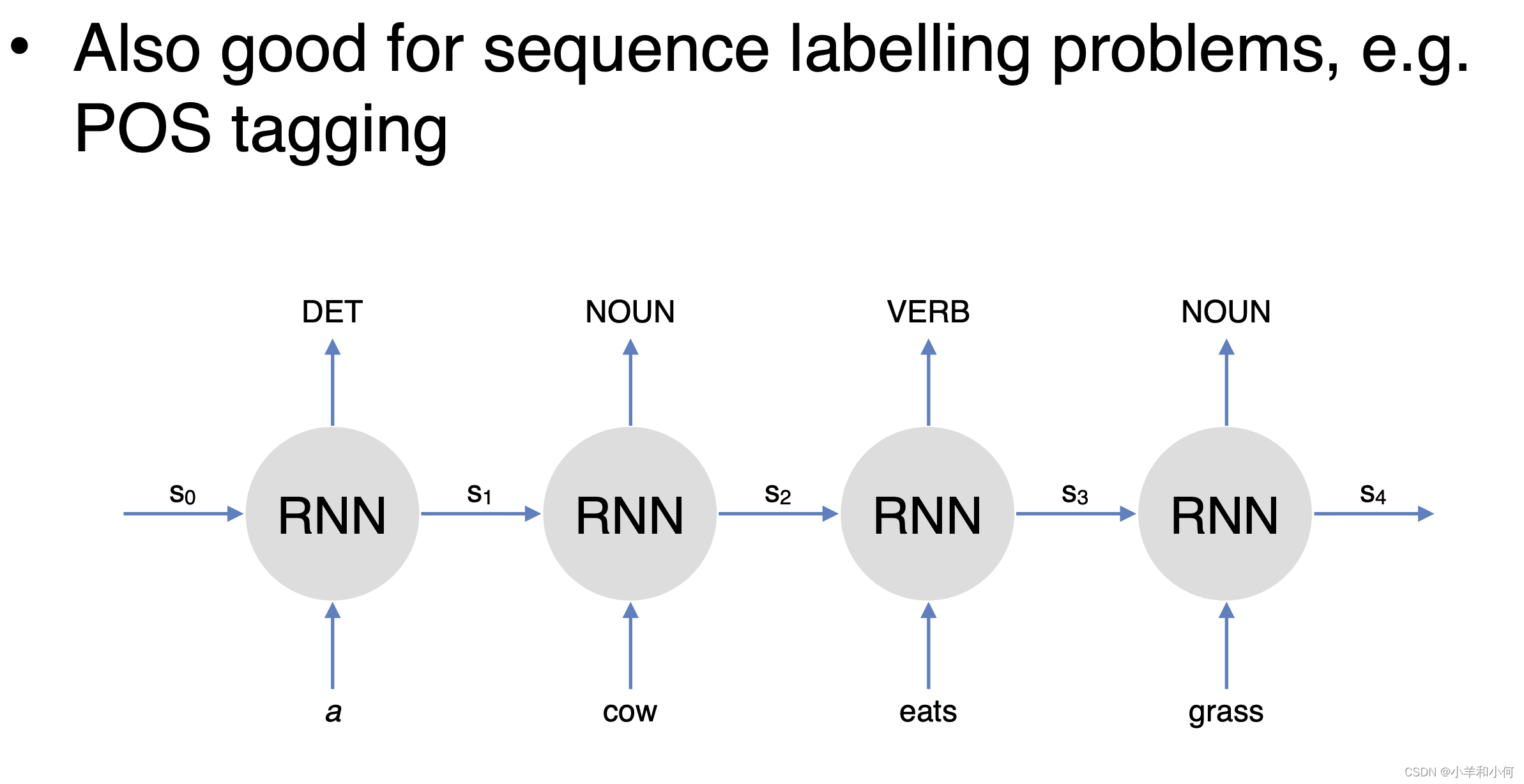

4.6 Sequence Labeling

4.7 Variants

4.8 Multi-layer LSTM

4.9 Bidirectional LSTM

5. Final Words

Pros

Has the ability to capture long range contexts 有能力捕捉远距离环境

Just like feedforward networks: flexible 就像前馈网络一样: 灵活

Cons

Slower than FF networks due to sequential processing 由于顺序处理,比 FF 网络慢

In practice doesn't capture long range dependency very well (evident when generating very long text) 实际上并不能很好地捕捉到长距离依赖关系(当生成非常长的文本时显而易见)

In practice also doesn't stack well (multi-layer LSTM) 实际上也不能很好地叠加(多层 LSTM)

Less popular nowadays due to the emergence of more advanced architectures 现在没那么受欢迎了