softmax 反向传播 代码 python 实现

概念

反向传播求导

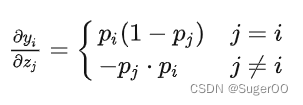

可以看到,softmax 计算了多个神经元的输入,在反向传播求导时,需要考虑对不同神经元的参数求导。

分两种情况考虑:

- 当求导的参数位于分子时

- 当求导的参数位于分母时

pi=softmax(zi)=ezi∑j=1Nezj=ez1ez1+ez2+ez3p_i=softmax(z_i)=\\frac{e^{z_i}}{\\sum_{j=1}^Ne^{z_j}}=\\frac{e^{z_1}}{e^{z_1}+e^{z_2}+e^{z_3}} pi=softmax(zi)=∑j=1Nezjezi=ez1+ez2+ez3ez1

当求导的参数位于分子时:

∂(ezi∑j=1Nezj)∂(ezi)=∂(ez1ez1+ez2+ez3)∂(ezi)=ez1(ez1+ez2+ez3)−ez1⋅ez1(ez1+ez2+ez3)2=pi−pi2=pi(1−pi)\\begin{array}{c} \\frac{\\partial(\\frac{ e^{z_i}}{\\sum_{j = 1}^Ne^{z_j}}) }{\\partial(e^{z_i}) } = \\frac{\\partial(\\frac{e^{z_1}}{e^{z_1}+e^{z_2}+e^{z_3}})}{\\partial(e^{z_i})} \\\\ = \\frac{e^{z_1}(e^{z_1}+e^{z_2}+e^{z_3})-e^{z_1}·e^{z_1}}{(e^{z_1}+e^{z_2}+e^{z_3})^2} \\\\ =p_i-p_i^2 \\\\ =p_i(1-p_i) \\end{array} ∂(ezi)∂(∑j=1Nezjezi)=∂(ezi)∂(ez1+ez2+ez3ez1)=(ez1+ez2+ez3)2ez1(ez1+ez2+ez3)−ez1⋅ez1=pi−pi2=pi(1−pi)

当求导的参数位于分母时(ez2e^{z_2}ez2 or ez3e^{z_3}ez3 这两个是对称的,求导结果是一样的):

∂(ezi∑j=1Nezj)∂(ezj)=∂(ez1ez1+ez2+ez3)∂(ezi)=0−ez1⋅ez2(ez1+ez2+ez3)2=−pj⋅pi\\begin{array}{c} \\frac{ \\partial( \\frac{ e^{z_i}}{\\sum_{j = 1}^Ne^{z_j}}) }{\\partial(e^{z_j}) } = \\frac{\\partial(\\frac{e^{z_1}}{e^{z_1}+e^{z_2}+e^{z_3}})}{\\partial(e^{z_i})} \\\\ = \\frac{0-e^{z_1}·e^{z_2}}{(e^{z_1}+e^{z_2}+e^{z_3})^2} \\\\ =-p_j·p_i \\\\ \\end{array} ∂(ezj)∂(∑j=1Nezjezi)=∂(ezi)∂(ez1+ez2+ez3ez1)=(ez1+ez2+ez3)20−ez1⋅ez2=−pj⋅pi

代码

import torch

import mathdef my_softmax(features):_sum = 0for i in features:_sum += math.e ireturn torch.Tensor([ math.e i / _sum for i in features ])def my_softmax_grad(outputs): n = len(outputs)grad = []for i in range(n):temp = []for j in range(n):if i == j:temp.append(outputs[i] * (1- outputs[i]))else:temp.append(-outputs[j] * outputs[i])grad.append(torch.Tensor(temp))return gradif __name__ == '__main__':features = torch.randn(10)features.requires_grad_()torch_softmax = torch.nn.functional.softmaxp1 = torch_softmax(features,dim=0)p2 = my_softmax(features)print(torch.allclose(p1,p2))n = len(p1)p2_grad = my_softmax_grad(p2)for i in range(n):p1_grad = torch.autograd.grad(p1[i],features, retain_graph=True)print(torch.allclose(p1_grad[0], p2_grad[i]))相关资料

https://zhuanlan.zhihu.com/p/105722023

https://www.cnblogs.com/gzyatcnblogs/articles/15937870.html