Flink集群搭建

- 前置Hadoop2.7.5集群搭建:

https://blog.csdn.net/QYHuiiQ/article/details/123055389

- 集群角色分配

| Hostname | IP | Role |

| hadoop01 | 192.168.126.132 | JobManager |

| hadoop02 | 192.168.126.133 | TaskManager |

| hadoop03 | 192.168.126.134 | TaskManager |

- 下载flink

https://archive.apache.org/dist/flink/flink-1.13.0/

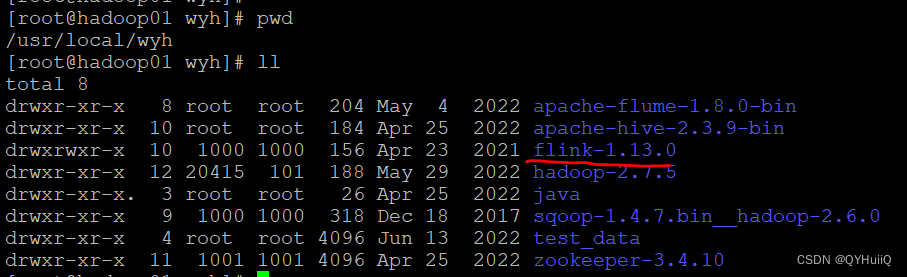

将压缩包上传服务器并解压:

[root@hadoop01 wyh]# tar -zxvf flink-1.13.0-bin-scala_2.12.tgz

- 配置JobManager节点

[root@hadoop01 conf]# pwd

/usr/local/wyh/flink-1.13.0/conf

[root@hadoop01 conf]# vi flink-conf.yaml

#将下面的配置修改为JobManager的主机名

jobmanager.rpc.address: hadoop01- 配置TaskManager节点

[root@hadoop01 conf]# pwd

/usr/local/wyh/flink-1.13.0/conf

[root@hadoop01 conf]# vi workers

[root@hadoop01 conf]# cat workers

hadoop02

hadoop03

- 将hadoop01上的flink包分发至hadoop02和haddop03机器上

[root@hadoop01 wyh]# scp -r flink-1.13.0/ hadoop02:$PWD

[root@hadoop01 wyh]# scp -r flink-1.13.0/ hadoop03:$PWD

- 启动flink集群

[root@hadoop01 bin]# pwd

/usr/local/wyh/flink-1.13.0/bin

[root@hadoop01 bin]# ./start-cluster.sh

Starting cluster.

Starting standalonesession daemon on host hadoop01.

Starting taskexecutor daemon on host hadoop02.

Starting taskexecutor daemon on host hadoop03.

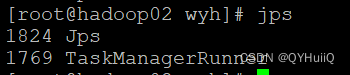

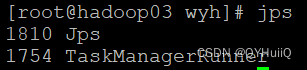

- 启动后查看三台机器上的进程

![]()

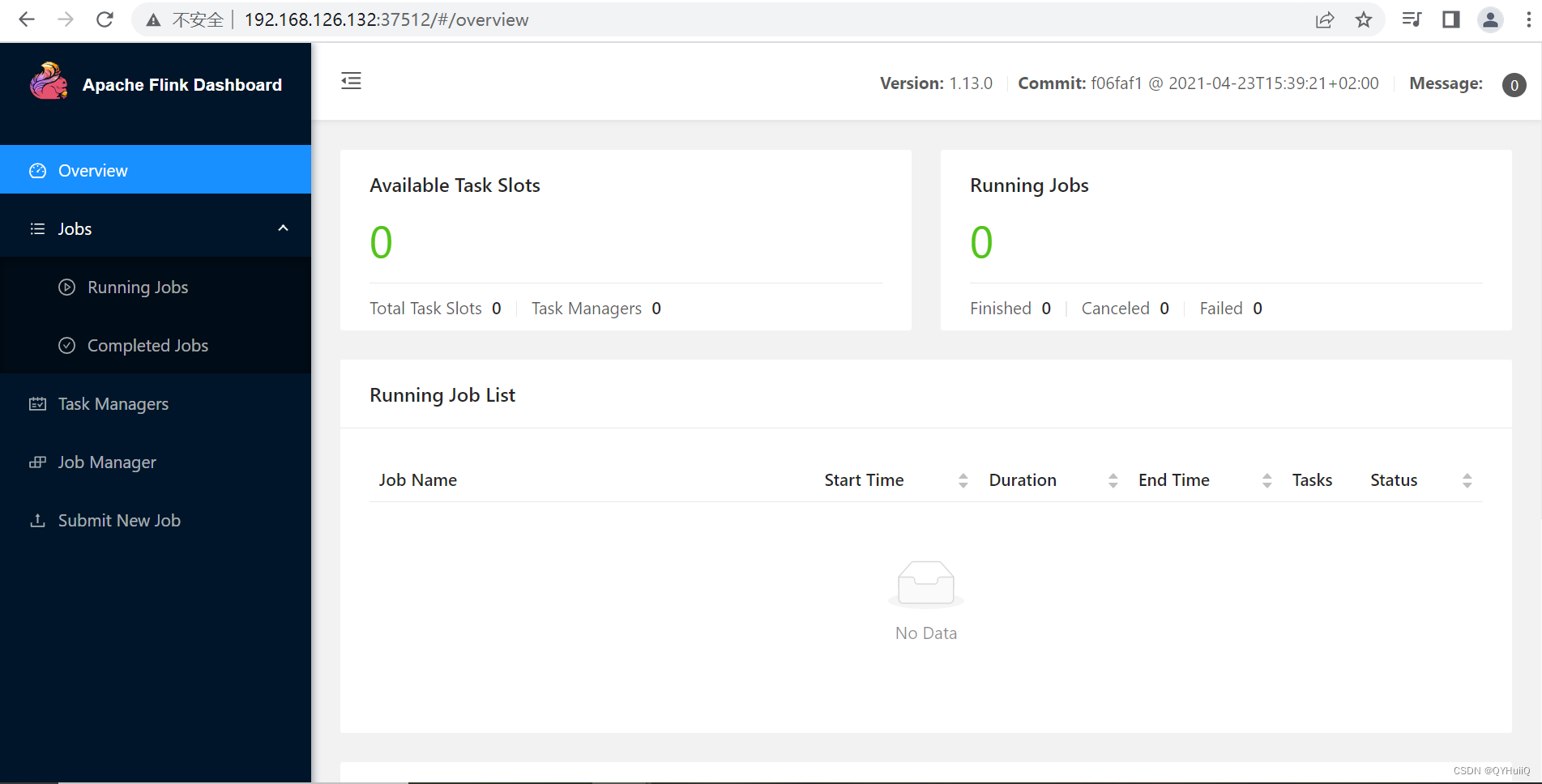

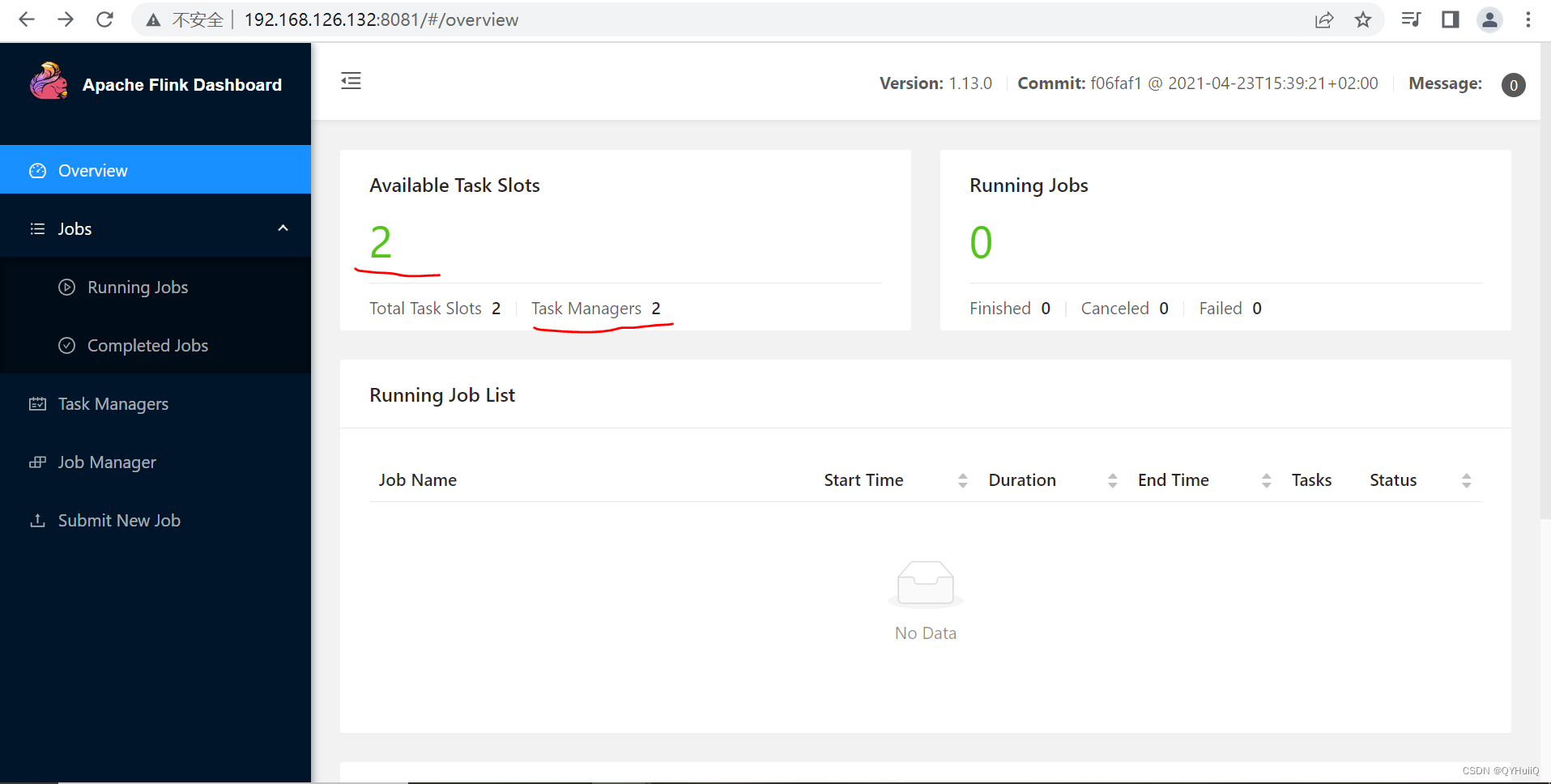

访问Web:

http://192.168.126.132:8081/

默认情况下每个TaskManager的任务槽是1,这里我们有两个TaskManager。

停掉flinnk集群。

[root@hadoop01 bin]# pwd

/usr/local/wyh/flink-1.13.0/bin

[root@hadoop01 bin]# ./stop-cluster.sh

然后我们尝试用Yarn模式启动集群,前提是要启动Hadoop。

- 启动Hadoop集群

[root@hadoop01 hadoop-2.7.5]# pwd

/usr/local/wyh/hadoop-2.7.5

[root@hadoop01 hadoop-2.7.5]# start-all.sh

- 以Yarn模式启动Flink集群

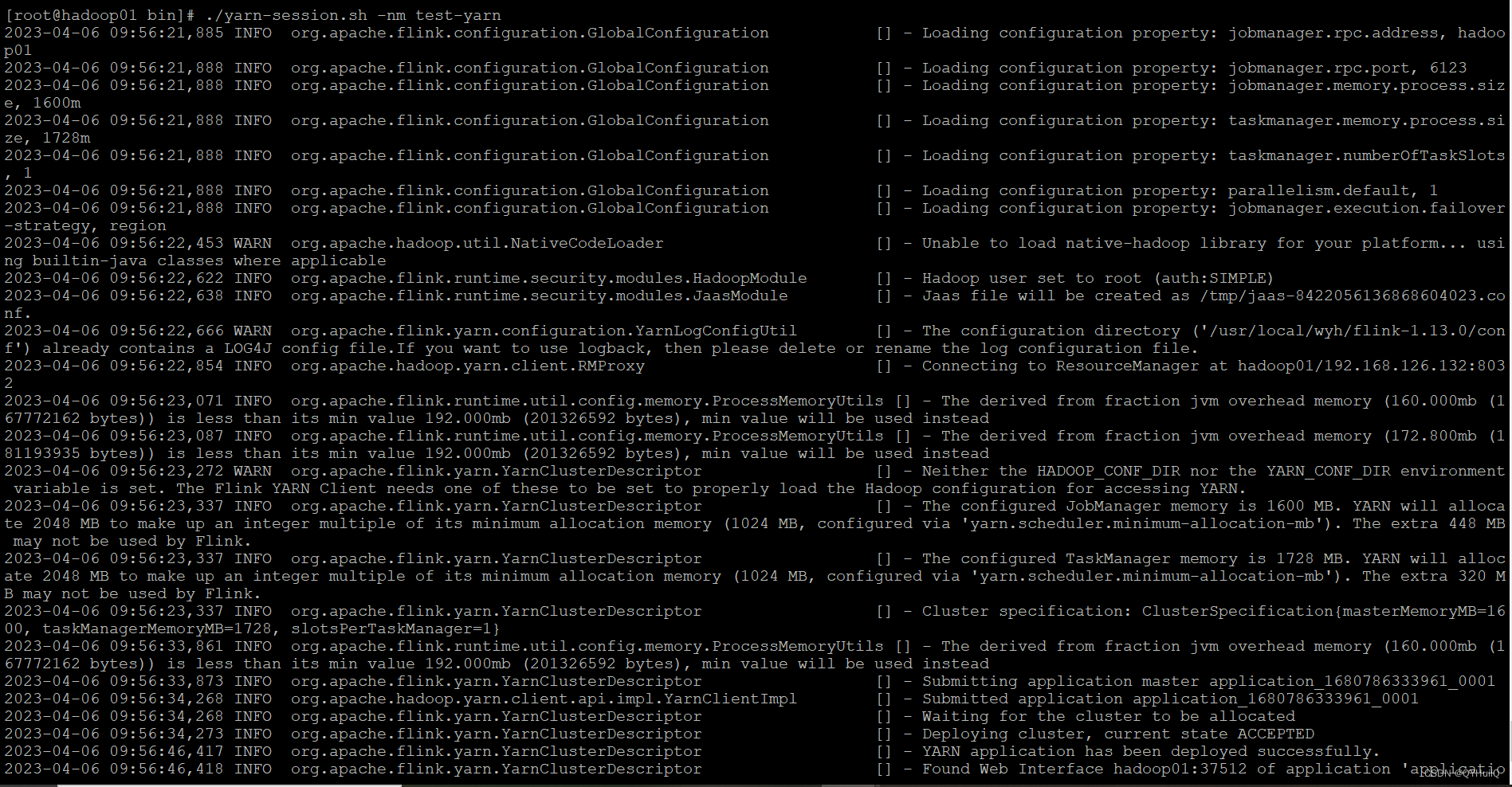

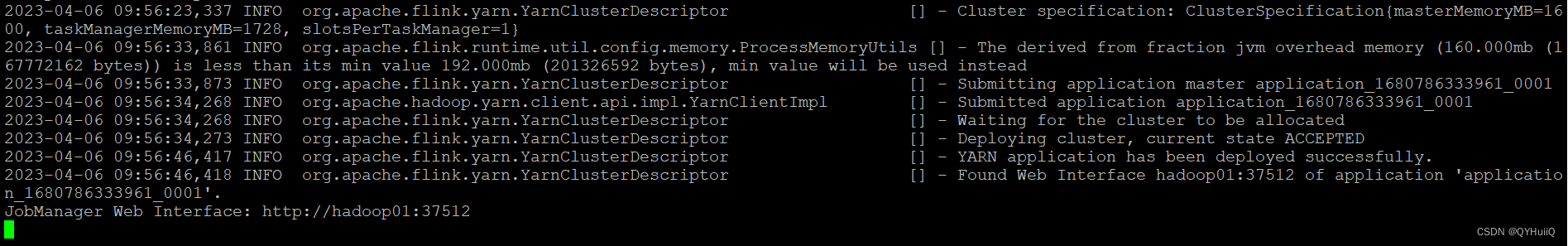

[root@hadoop01 bin]# ./yarn-session.sh -nm test-yarn

#此处nm参数后面跟的是自定义的命名空间启动成功:

访问UI:

访问UI: