余弦衰减学习率与linear warmup结合版代码

以下代码摘自tensorflow官方tpu仓库

def cosine_learning_rate_with_linear_warmup(global_step,init_learning_rate,warmup_learning_rate,warmup_steps,total_steps):"""Creates the cosine learning rate tensor with linear warmup."""global_step = tf.cast(global_step, dtype=tf.float32)linear_warmup = (warmup_learning_rate + global_step / warmup_steps *(init_learning_rate - warmup_learning_rate))cosine_learning_rate = (init_learning_rate * (tf.cos(np.pi * (global_step - warmup_steps) / (total_steps - warmup_steps))+ 1.0) / 2.0)learning_rate = tf.where(global_step < warmup_steps,linear_warmup, cosine_learning_rate)return learning_rate

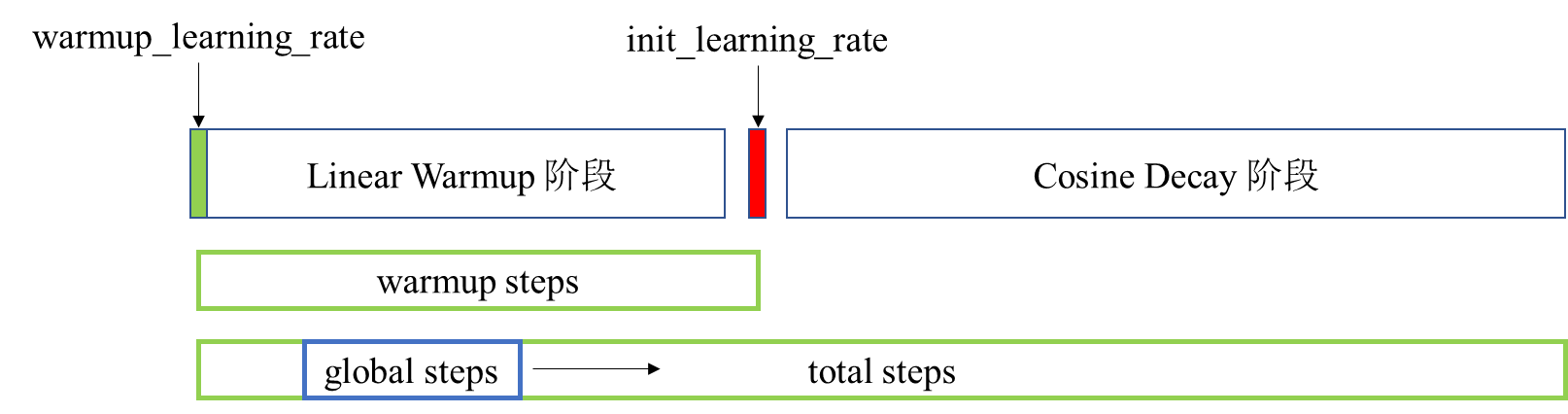

五个参数的含义,直接看图吧,代码瞅一眼也简单

在 warmup阶段,学习率从 warmup_learning_rate 变为 init_learning_rate,该阶段中学习率是线性递增或递减的

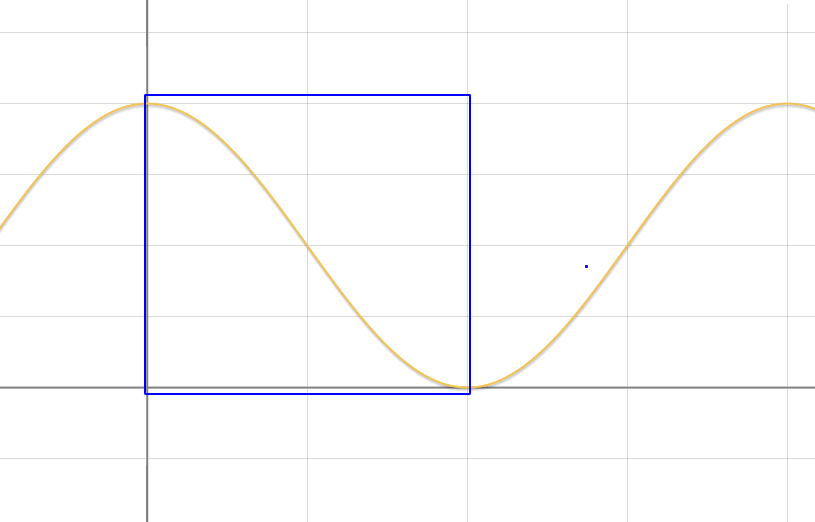

在余弦衰减阶段,学习率是这样衰减的:

lr=cos(gl−wt−wπ)+12∗init_learning_ratelr = \\frac{ cos \\left ( \\frac{gl-w} {t-w} \\pi \\right ) + 1 }{ 2 } * init\\_learning\\_rate lr=2cos(t−wgl−wπ)+1∗init_learning_rate

coscoscos中的变量:

- glglgl 是 global_stepglobal\\_stepglobal_step

- www 是 warmup_stepswarmup\\_stepswarmup_steps

- ttt 是 total_steptotal\\_steptotal_step

衰减曲线如下图蓝色框中的部分所示:

下降程度先逐渐加快,之后逐渐变慢,收敛到一个很小的值