Elasticsearch(黑马)

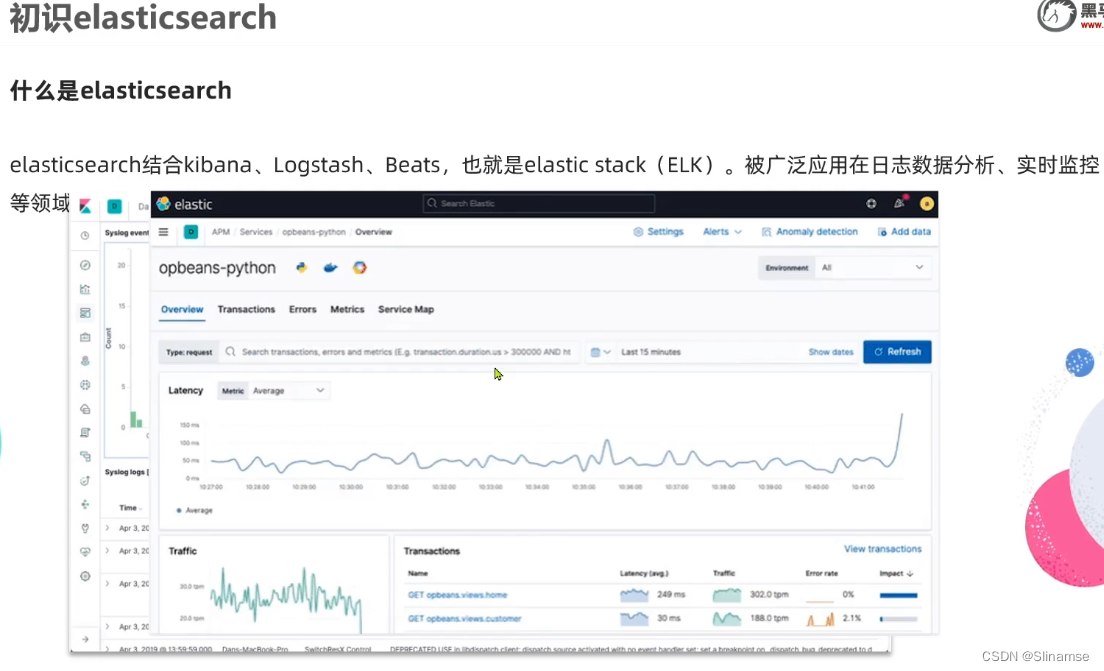

初识elasticsearch

.

安装elasticsearch

1.部署单点es

1.1.创建网络

因为我们还需要部署kibana容器,因此需要让es和kibana容器互联。这里先创建一个网络:

docker network create es-net1.2.加载镜像

这里我们采用elasticsearch的7.12.1版本的镜像,这个镜像体积非常大,接近1G。不建议大家自己pull。

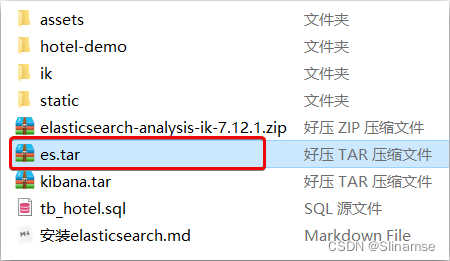

课前资料提供了镜像的tar包:

大家将其上传到虚拟机中,然后运行命令加载即可:

# 导入数据

docker load -i es.tar同理还有kibana的tar包也需要这样做。

1.3.运行

运行docker命令,部署单点es:

docker run -d \\--name es \\-e "ES_JAVA_OPTS=-Xms512m -Xmx512m" \\-e "discovery.type=single-node" \\-v es-data:/usr/share/elasticsearch/data \\-v es-plugins:/usr/share/elasticsearch/plugins \\--privileged \\--network es-net \\-p 9200:9200 \\-p 9300:9300 \\

elasticsearch:7.12.1命令解释:

-

-e "cluster.name=es-docker-cluster":设置集群名称 -

-e "http.host=0.0.0.0":监听的地址,可以外网访问 -

-e "ES_JAVA_OPTS=-Xms512m -Xmx512m":内存大小 -

-e "discovery.type=single-node":非集群模式 -

-v es-data:/usr/share/elasticsearch/data:挂载逻辑卷,绑定es的数据目录 -

-v es-logs:/usr/share/elasticsearch/logs:挂载逻辑卷,绑定es的日志目录 -

-v es-plugins:/usr/share/elasticsearch/plugins:挂载逻辑卷,绑定es的插件目录 -

--privileged:授予逻辑卷访问权 -

--network es-net:加入一个名为es-net的网络中 -

-p 9200:9200:端口映射配置

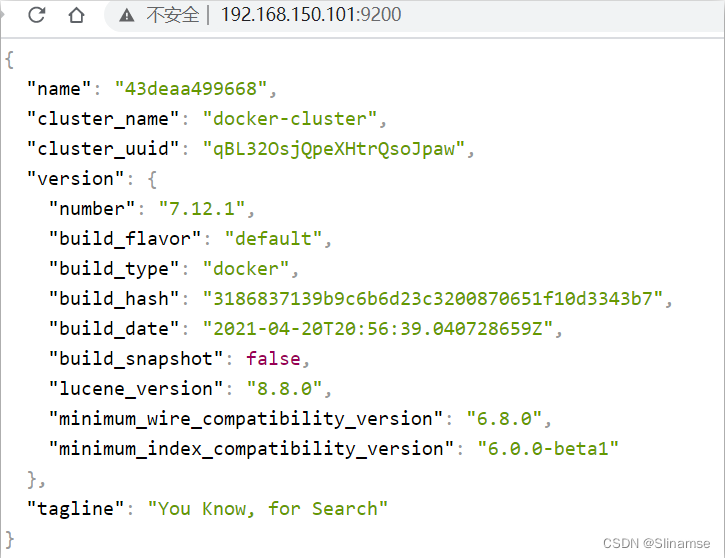

在浏览器中输入:http://192.168.150.101:9200 即可看到elasticsearch的响应结果:

2.部署kibana

kibana可以给我们提供一个elasticsearch的可视化界面,便于我们学习。

2.1.部署

运行docker命令,部署kibana

docker run -d \\

--name kibana \\

-e ELASTICSEARCH_HOSTS=http://es:9200 \\

--network=es-net \\

-p 5601:5601 \\

kibana:7.12.1-

--network es-net:加入一个名为es-net的网络中,与elasticsearch在同一个网络中 -

-e ELASTICSEARCH_HOSTS=http://es:9200":设置elasticsearch的地址,因为kibana已经与elasticsearch在一个网络,因此可以用容器名直接访问elasticsearch -

-p 5601:5601:端口映射配置

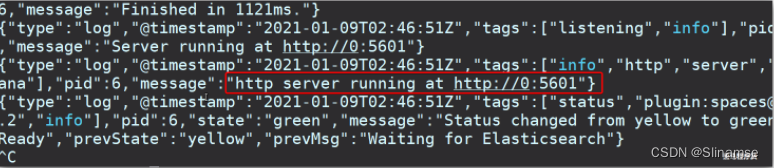

kibana启动一般比较慢,需要多等待一会,可以通过命令:

docker logs -f kibana查看运行日志,当查看到下面的日志,说明成功:

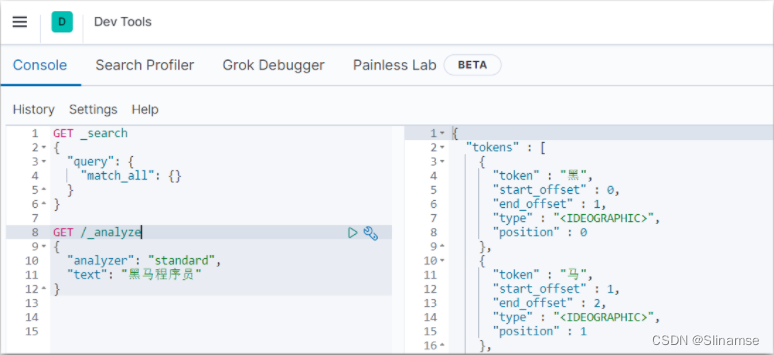

2.2.DevTools

kibana中提供了一个DevTools界面:

这个界面中可以编写DSL来操作elasticsearch。并且对DSL语句有自动补全功能。

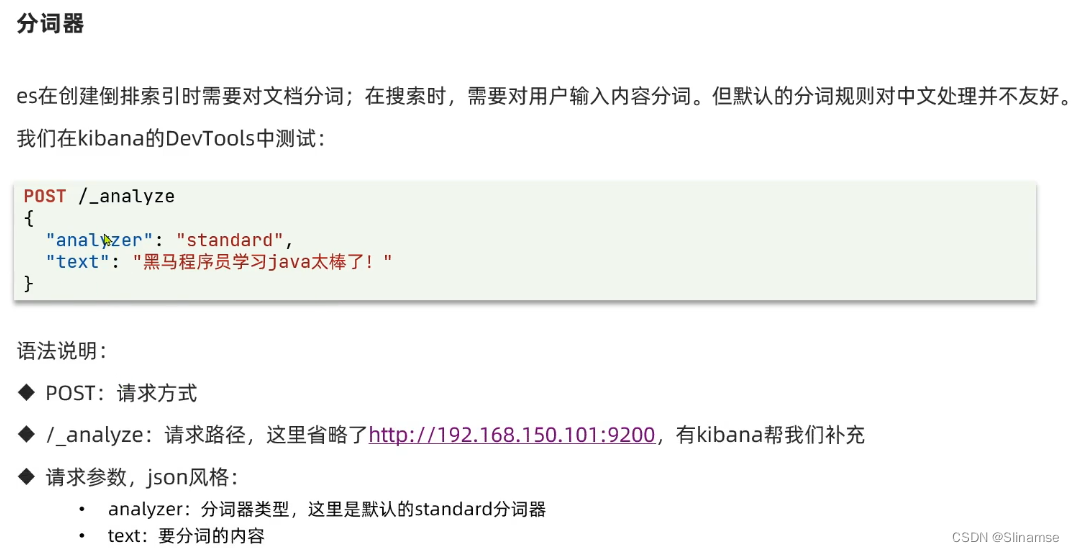

3.安装IK分词器

3.1.在线安装ik插件(较慢)

# 进入容器内部

docker exec -it elasticsearch /bin/bash

# 在线下载并安装

./bin/elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.12.1/elasticsearch-analysis-ik-7.12.1.zip

#退出

exit

#重启容器

docker restart elasticsearch3.2.离线安装ik插件(推荐)

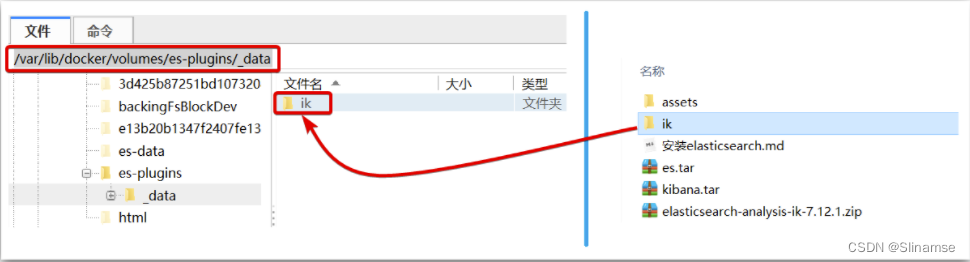

1)查看数据卷目录

安装插件需要知道elasticsearch的plugins目录位置,而我们用了数据卷挂载,因此需要查看elasticsearch的数据卷目录,通过下面命令查看:

docker volume inspect es-plugins显示结果:

[{"CreatedAt": "2022-05-06T10:06:34+08:00","Driver": "local","Labels": null,"Mountpoint": "/var/lib/docker/volumes/es-plugins/_data","Name": "es-plugins","Options": null,"Scope": "local"}

]说明plugins目录被挂载到了:/var/lib/docker/volumes/es-plugins/_data这个目录中。

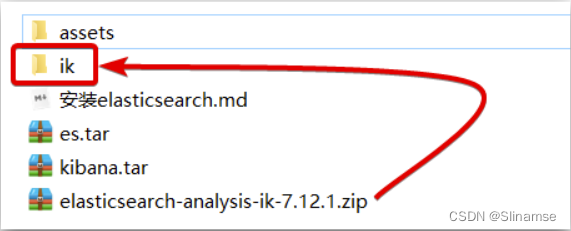

2)解压缩分词器安装包

下面我们需要把课前资料中的ik分词器解压缩,重命名为ik

3)上传到es容器的插件数据卷中

也就是/var/lib/docker/volumes/es-plugins/_data:

4)重启容器

# 4、重启容器

docker restart es

# 查看es日志

docker logs -f es5)测试:

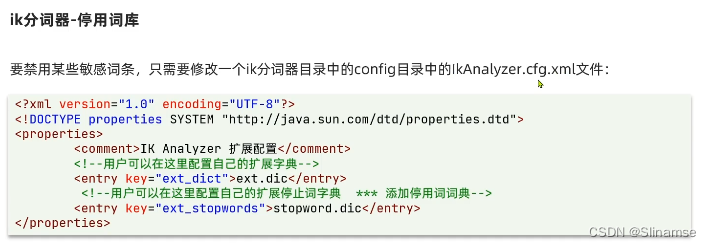

IK分词器包含两种模式:

-

ik_smart:最少切分 -

ik_max_word:最细切分

GET /_analyze

{"analyzer": "ik_max_word","text": "黑马程序员学习java太棒了"

}结果:

{"tokens" : [{"token" : "黑马","start_offset" : 0,"end_offset" : 2,"type" : "CN_WORD","position" : 0},{"token" : "程序员","start_offset" : 2,"end_offset" : 5,"type" : "CN_WORD","position" : 1},{"token" : "程序","start_offset" : 2,"end_offset" : 4,"type" : "CN_WORD","position" : 2},{"token" : "员","start_offset" : 4,"end_offset" : 5,"type" : "CN_CHAR","position" : 3},{"token" : "学习","start_offset" : 5,"end_offset" : 7,"type" : "CN_WORD","position" : 4},{"token" : "java","start_offset" : 7,"end_offset" : 11,"type" : "ENGLISH","position" : 5},{"token" : "太棒了","start_offset" : 11,"end_offset" : 14,"type" : "CN_WORD","position" : 6},{"token" : "太棒","start_offset" : 11,"end_offset" : 13,"type" : "CN_WORD","position" : 7},{"token" : "了","start_offset" : 13,"end_offset" : 14,"type" : "CN_CHAR","position" : 8}]

}

GET /hotel/_search

{"query": {"match_all": {}},"sort": [{"score": {"order": "desc"}},{"price": {"order": "asc"}}]

}

GET /hotel/_search

{"query": {"match_all": {}},"sort": [{"_geo_distance": {"location": "31.034661,121.612282","order": "asc","unit": "km"}}]

}

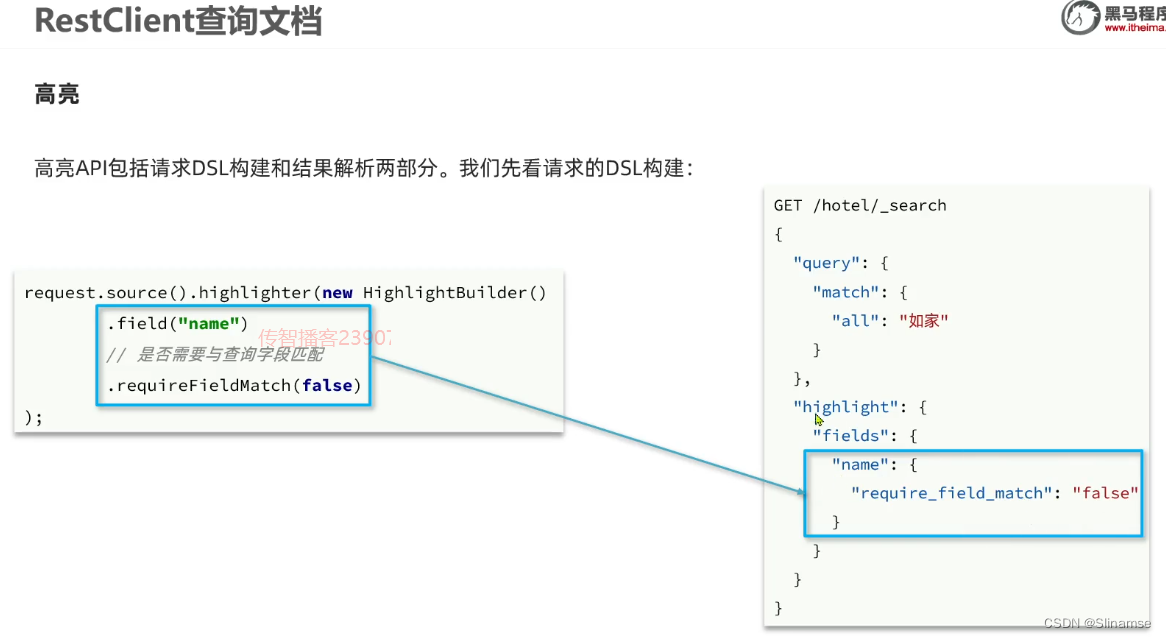

#高亮查询,默认情况下,ES搜索字段必须与高亮字段一致

GET /hotel/_search

{"query": {"match": {"all":"如家"}},"highlight": {"fields": {"name": {"require_field_match": "false"}}}

}

controller

controller

@RestController

@RequestMapping("/hotel")

public class HotelController {@Autowiredprivate IHotelService hotelService;@PostMapping("/list")public PageResult list(@RequestBody RequestParams params) throws IOException {System.out.println(params);PageResult pageResult = hotelService.search(params);return pageResult;}

}service

@Service

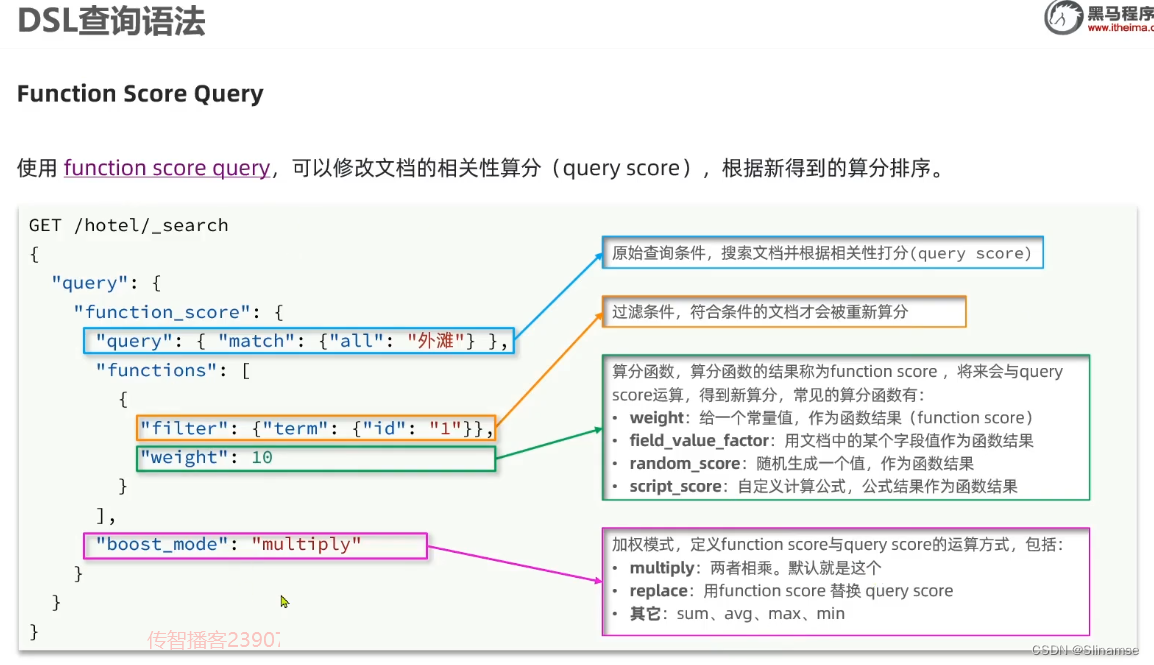

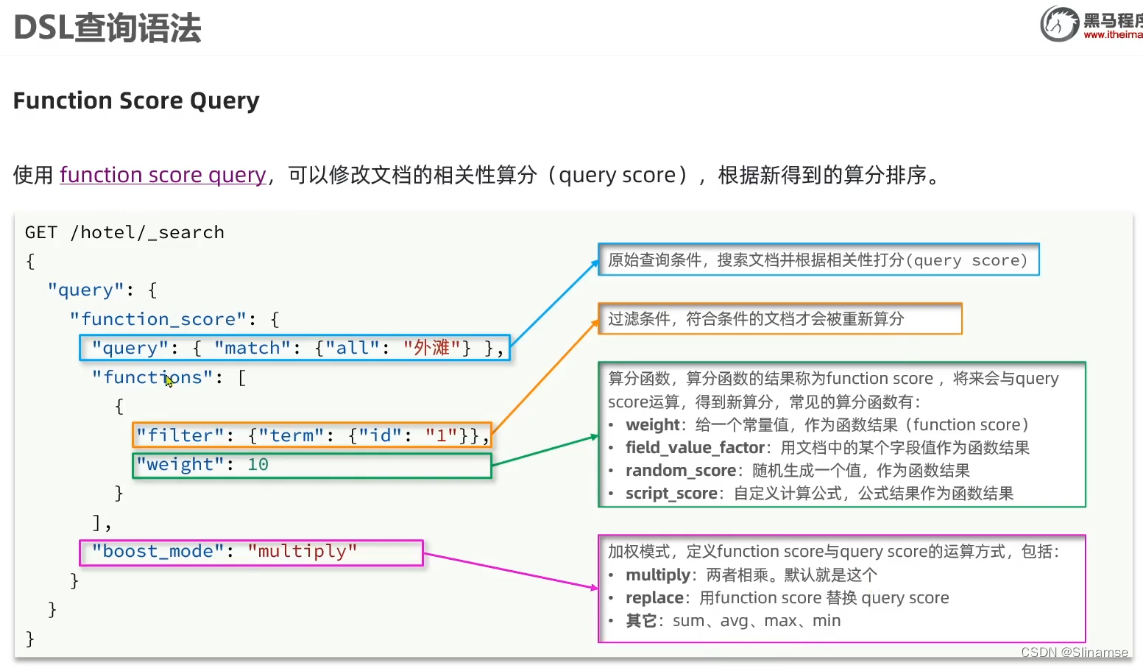

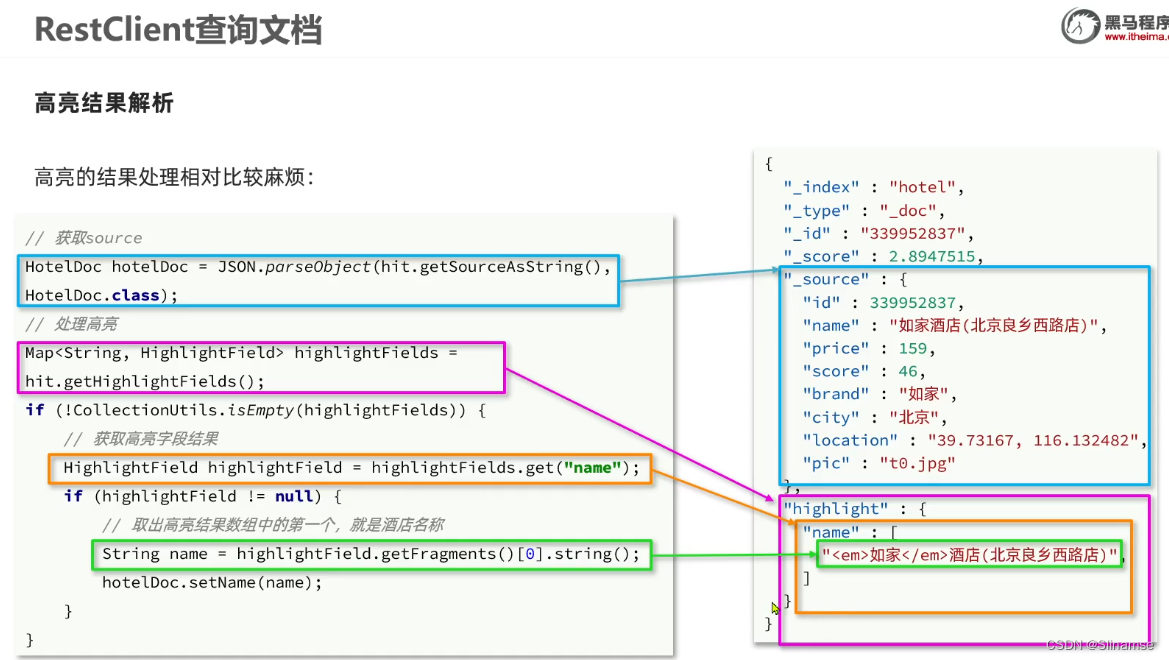

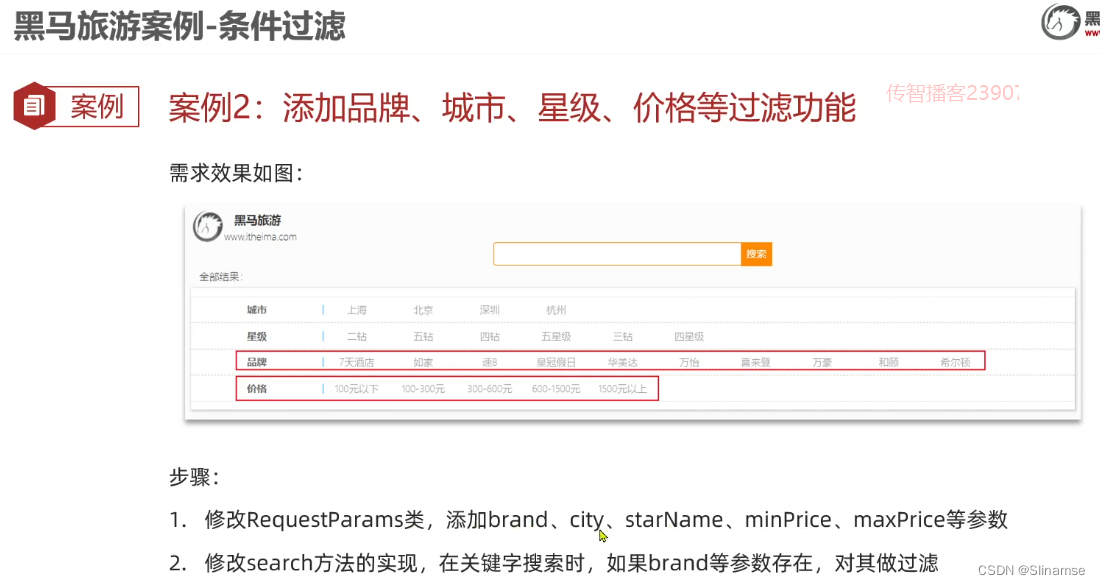

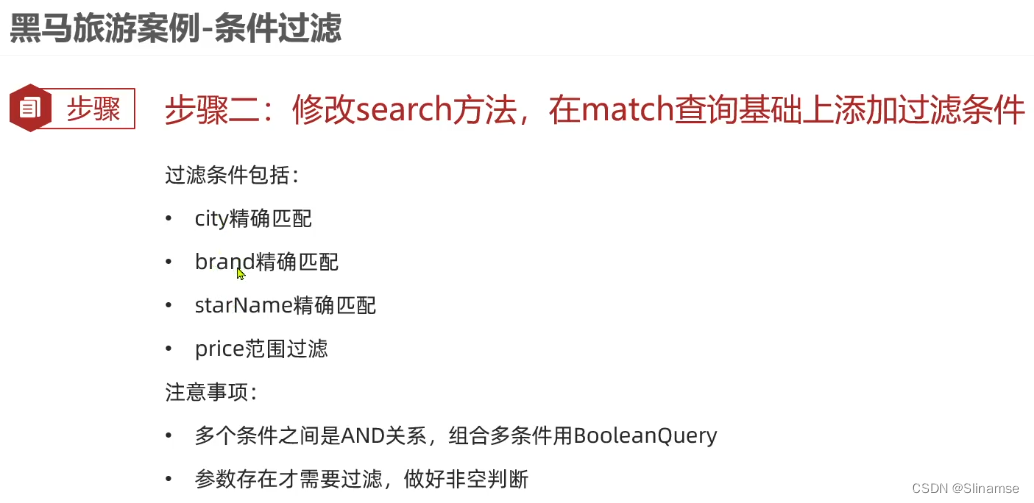

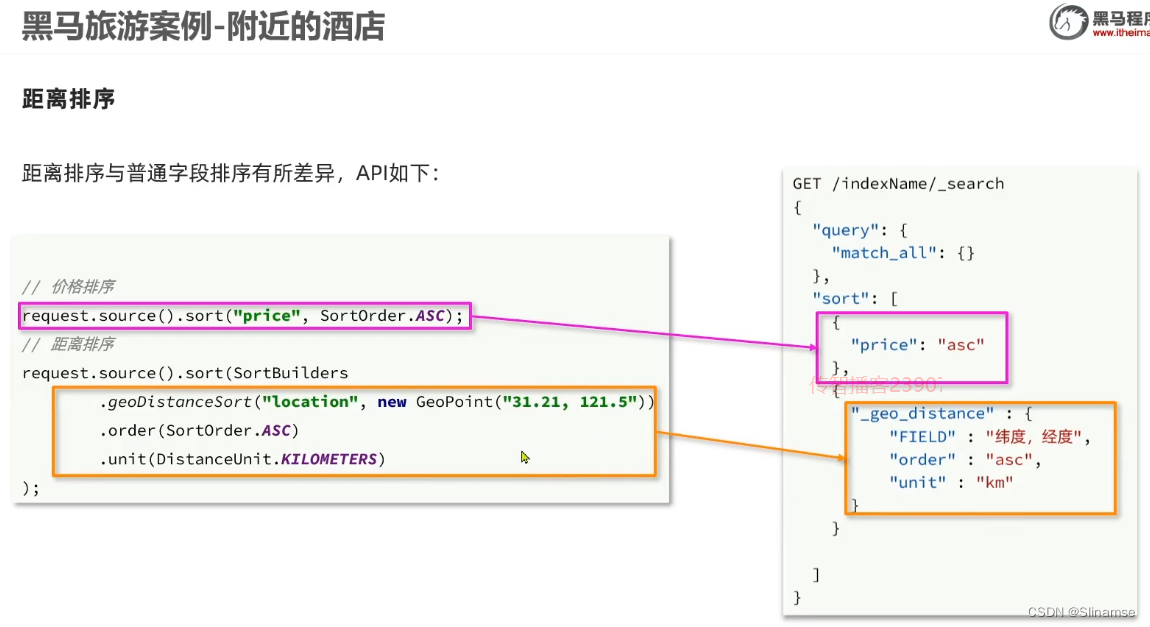

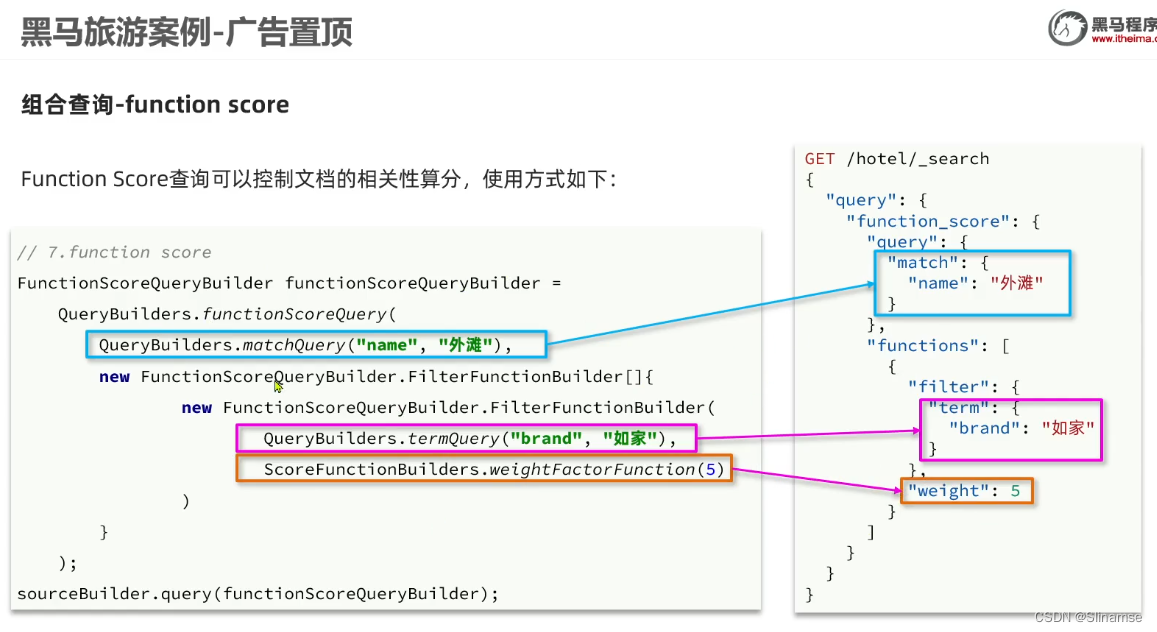

public class HotelService extends ServiceImpl<HotelMapper, Hotel> implements IHotelService {@Autowiredprivate RestHighLevelClient client;@Overridepublic PageResult search(RequestParams params) throws IOException {//连接elasticsearchthis.client = new RestHighLevelClient(RestClient.builder(HttpHost.create("http://192.168.136.150:9200")));//1.准备ResquestSearchRequest request = new SearchRequest("hotel");//2.组织DSL参数buidBasicQuery(params, request);//地理位置排序String location = params.getLocation();if(!StringUtils.isEmpty(location)){request.source().sort(SortBuilders.geoDistanceSort("location",new GeoPoint(location)).order(SortOrder.ASC).unit(DistanceUnit.KILOMETERS));}String sortBy = params.getSortBy();if(!sortBy.equals("default")){request.source().sort(sortBy);}//分页Integer page = params.getPage();Integer size = params.getSize();if(page != null&& size !=null){request.source().from((page-1)*size).size(size);}//地理位置//3.发送请求SearchResponse response = client.search(request, RequestOptions.DEFAULT);System.out.println(response);//4.解析结果PageResult pageResult = handleResponse(response);//关闭连接this.client.close();return pageResult;}private void buidBasicQuery(RequestParams params, SearchRequest request) {//过滤条件BoolQueryBuilder boolQuery = QueryBuilders.boolQuery();String key = params.getKey();if(!StringUtils.isEmpty(key)){boolQuery.must(QueryBuilders.matchQuery("all",key));}else{boolQuery.must(QueryBuilders.matchAllQuery());}//品牌String brand = params.getBrand();if(!StringUtils.isEmpty(brand)){boolQuery.must(QueryBuilders.termQuery("brand",brand));}//城市String city = params.getCity();if(!StringUtils.isEmpty(city)){boolQuery.must(QueryBuilders.termQuery("city",city));}//星级String starName = params.getStarName();if(!StringUtils.isEmpty(starName)){boolQuery.must(QueryBuilders.termQuery("starName",starName));}//价钱Integer minPrice = params.getMinPrice();Integer maxPrice = params.getMaxPrice();if(minPrice != null && maxPrice != null){boolQuery.filter(QueryBuilders.rangeQuery("price").gte(minPrice).lte(maxPrice));}//算分控制FunctionScoreQueryBuilder functionScoreQuery =QueryBuilders.functionScoreQuery(//原始查询boolQuery,//function score数组new FunctionScoreQueryBuilder.FilterFunctionBuilder[]{//其中的一个function score元素new FunctionScoreQueryBuilder.FilterFunctionBuilder(//过滤条件QueryBuilders.termQuery("isAD",true),//算分函数ScoreFunctionBuilders.weightFactorFunction(10))});request.source().query(functionScoreQuery);}//解析结果函数private PageResult handleResponse(SearchResponse response){SearchHits searchHits = response.getHits();//1.查询总条数Long total = searchHits.getTotalHits().value;//2.查询的结果数组SearchHit[] hits = searchHits.getHits();//3.遍历List<HotelDoc> hotels = new ArrayList<>();for (SearchHit hit : hits) {//获取文档sourceString json = hit.getSourceAsString();//反序列化HotelDoc hotelDoc = JSON.parseObject(json,HotelDoc.class);//获取排序值Object[] sortValues = hit.getSortValues();if(sortValues.length > 0){hotelDoc.setDistance(sortValues[0]);}//获取高亮结果Map<String, HighlightField> highlightFields = hit.getHighlightFields();if(!CollectionUtils.isEmpty(highlightFields)){//根据字段名获取高亮结果HighlightField highlightField = highlightFields.get("name");if(highlightField != null){//获取高亮值String name = highlightField.getFragments()[0].string();hotelDoc.setName(name);}}//打印hotels.add(hotelDoc);}//4.构造返回值PageResult pageResult = new PageResult(total,hotels);return pageResult;}

}pojo

@Data

@NoArgsConstructor

public class HotelDoc {private Long id;private String name;private String address;private Integer price;private Integer score;private String brand;private String city;private String starName;private String business;private String location;private String pic;private Object distance;private Boolean isAD;public HotelDoc(Hotel hotel) {this.id = hotel.getId();this.name = hotel.getName();this.address = hotel.getAddress();this.price = hotel.getPrice();this.score = hotel.getScore();this.brand = hotel.getBrand();this.city = hotel.getCity();this.starName = hotel.getStarName();this.business = hotel.getBusiness();this.location = hotel.getLatitude() + ", " + hotel.getLongitude();this.pic = hotel.getPic();}

}

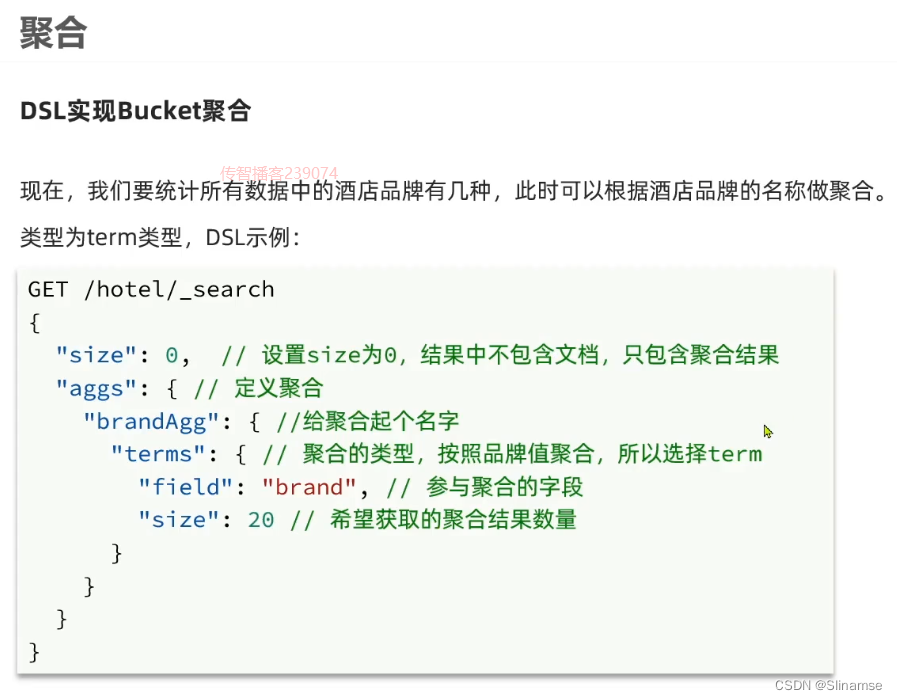

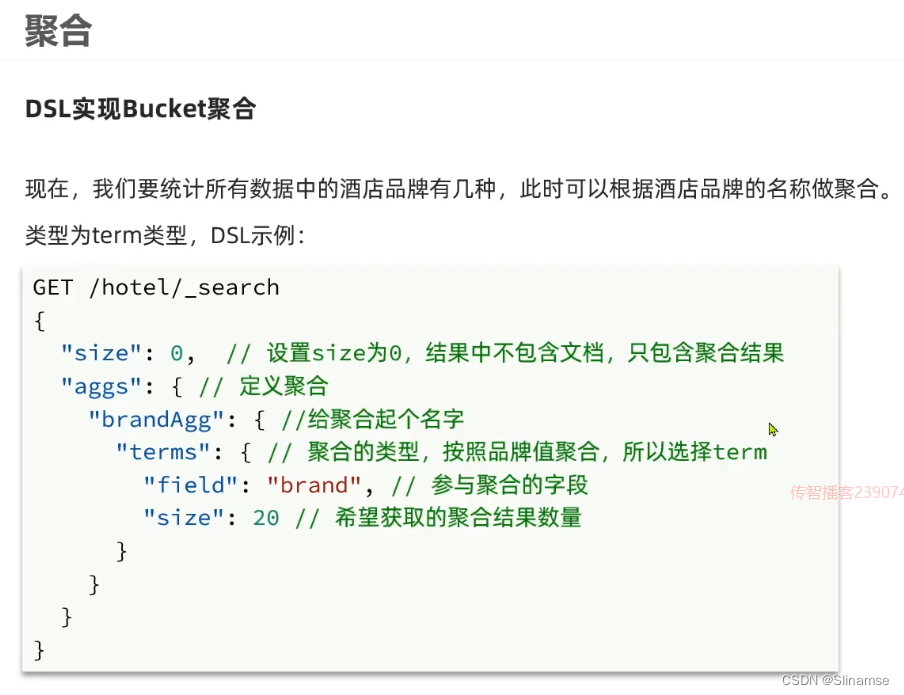

# metrics聚合

GET /hotel/_search

{"size": 0,"aggs": {"brandAgg": {"terms": {"field": "brand","size": 20,"order": {"scoreAgg.avg": "desc"}},"aggs": {"scoreAgg": {"stats": {"field": "score"}}}}}

}

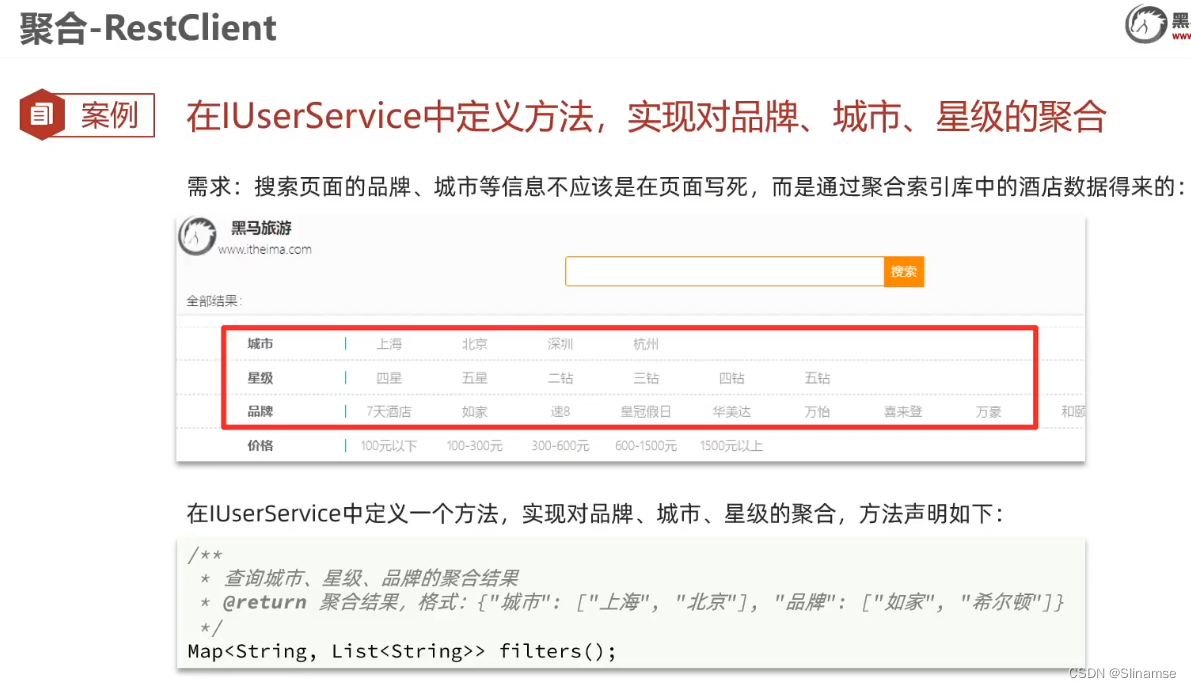

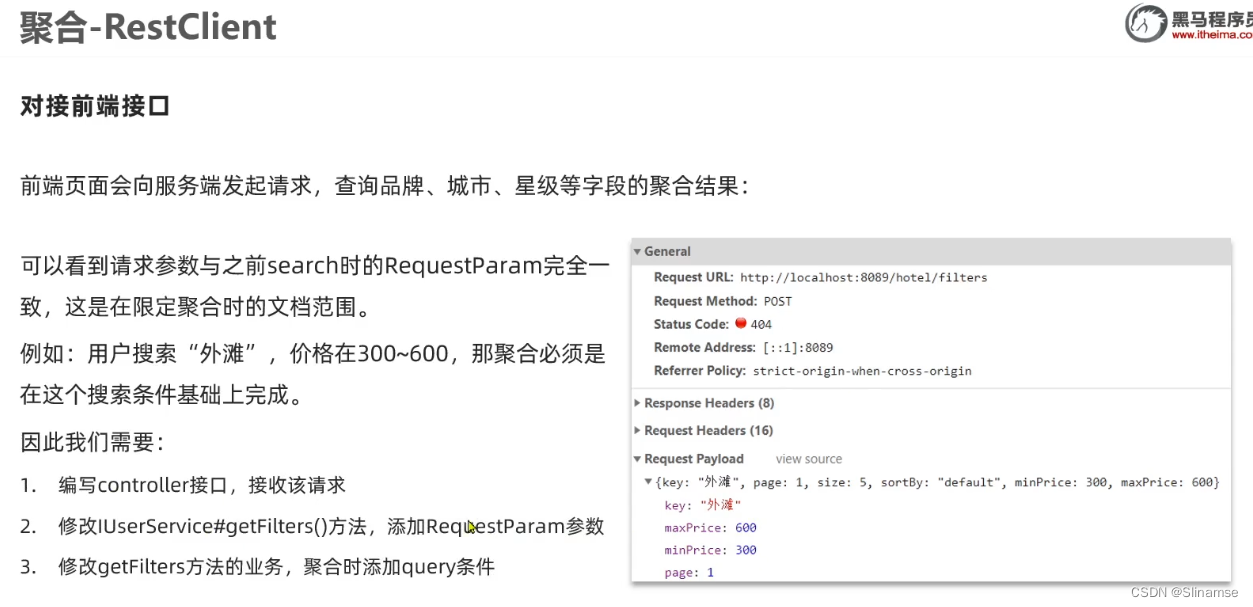

Controller

@PostMapping("/filters")public Map<String, List<String>> filters(@RequestBody RequestParams params) throws IOException {System.out.println("filter:"+params);Map<String, List<String>> map = hotelService.filters(params);System.out.println("Map:"+map);return map;}Service

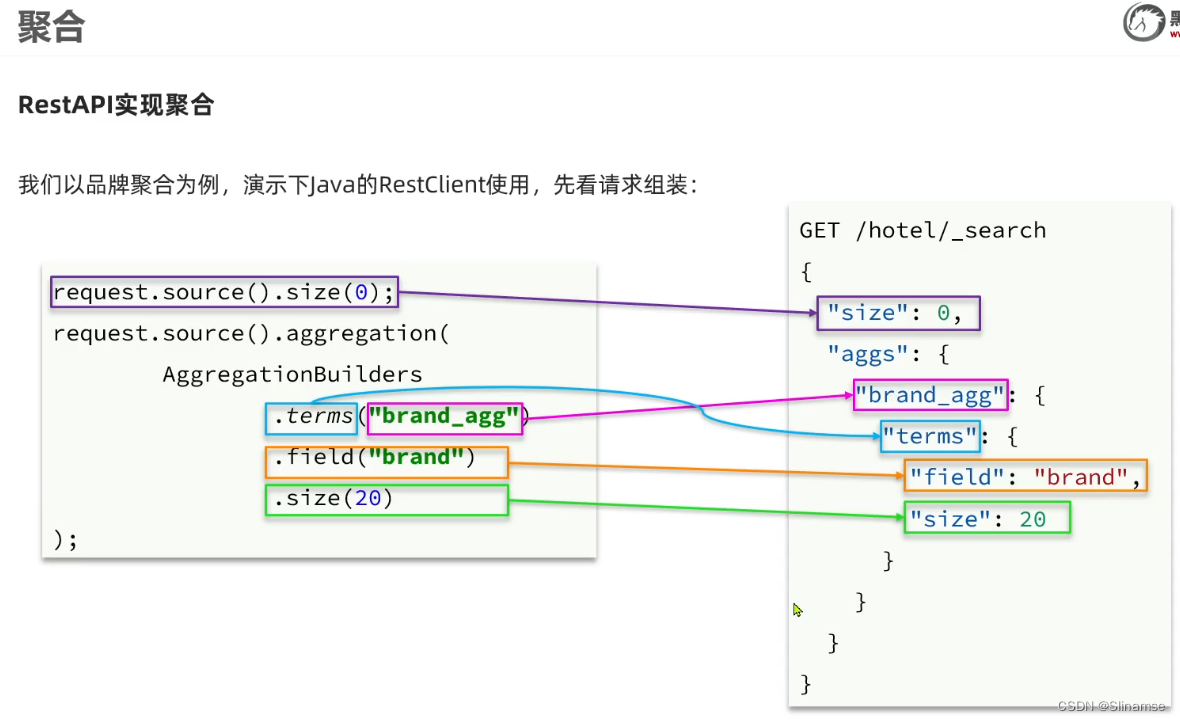

@Overridepublic Map<String, List<String>> filters(RequestParams params) throws IOException {//1.创建RequestSearchRequest request = new SearchRequest("hotel");//2.组织DSL语句//2.1 querybuidBasicQuery(params, request);//2.2 设置sizerequest.source().size(0);//2.3 聚合buildAggregation(request);//3.发送请求SearchResponse response = client.search(request,RequestOptions.DEFAULT);//4.解析结果Map<String,List<String>> map = new HashMap<>();//4.1.根据城市名称,获取聚合结果List<String> cityList = getAggByName(response,"cityAgg");map.put("城市",cityList);//4.2.根据星级名称,获取聚合结果List<String> starList = getAggByName(response,"starAgg");map.put("星级",starList);///4.3.根据品牌名称,获取聚合结果List<String> brandList = getAggByName(response,"brandAgg");map.put("品牌",brandList);return map;}private void buildAggregation(SearchRequest request) {//聚合城市request.source().aggregation(AggregationBuilders.terms("cityAgg").field("city").size(100));//聚合星级request.source().aggregation(AggregationBuilders.terms("starAgg").field("starName").size(100));//聚合品牌request.source().aggregation(AggregationBuilders.terms("brandAgg").field("brand").size(100));}private List<String> getAggByName(SearchResponse response, String aggName) {Aggregations aggregations = response.getAggregations();Terms brandTerms = aggregations.get(aggName);//获取桶List<? extends Terms.Bucket> buckets = brandTerms.getBuckets();//遍历List<String> list = new ArrayList<>();for (Terms.Bucket bucket : buckets) {//获取keyString key = bucket.getKeyAsString();list.add(key);}return list;}

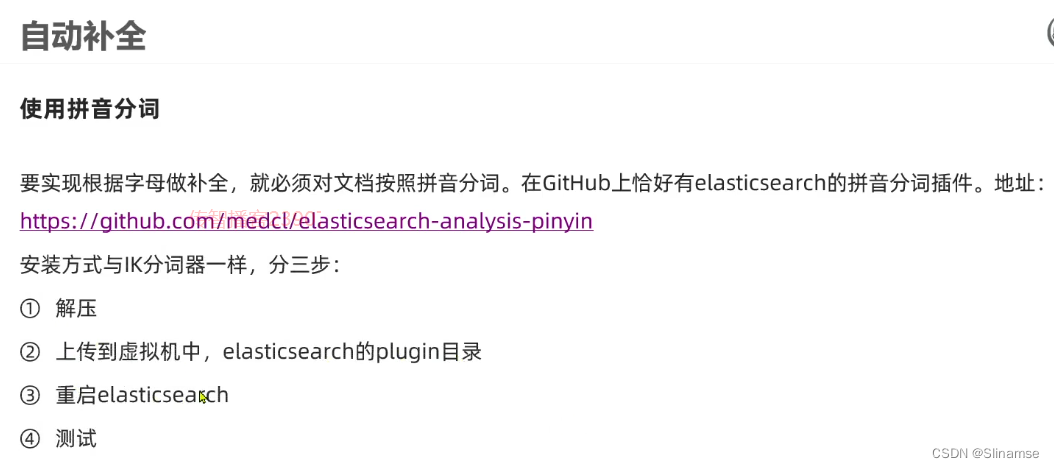

POST /_analyze

{"text": ["如家酒店还不错"],"analyzer": "pinyin"

}

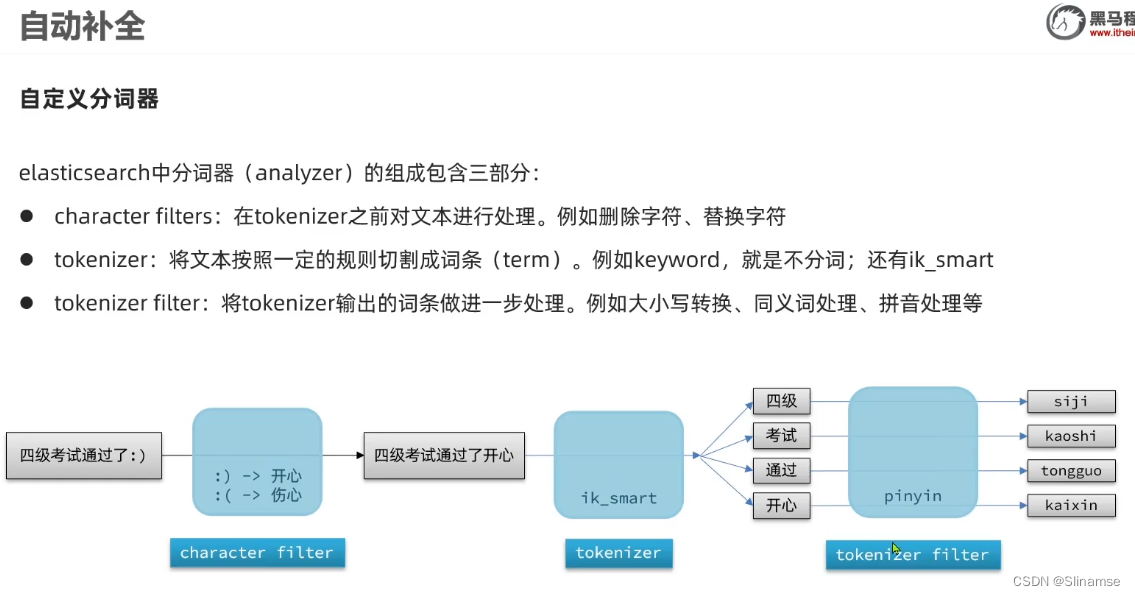

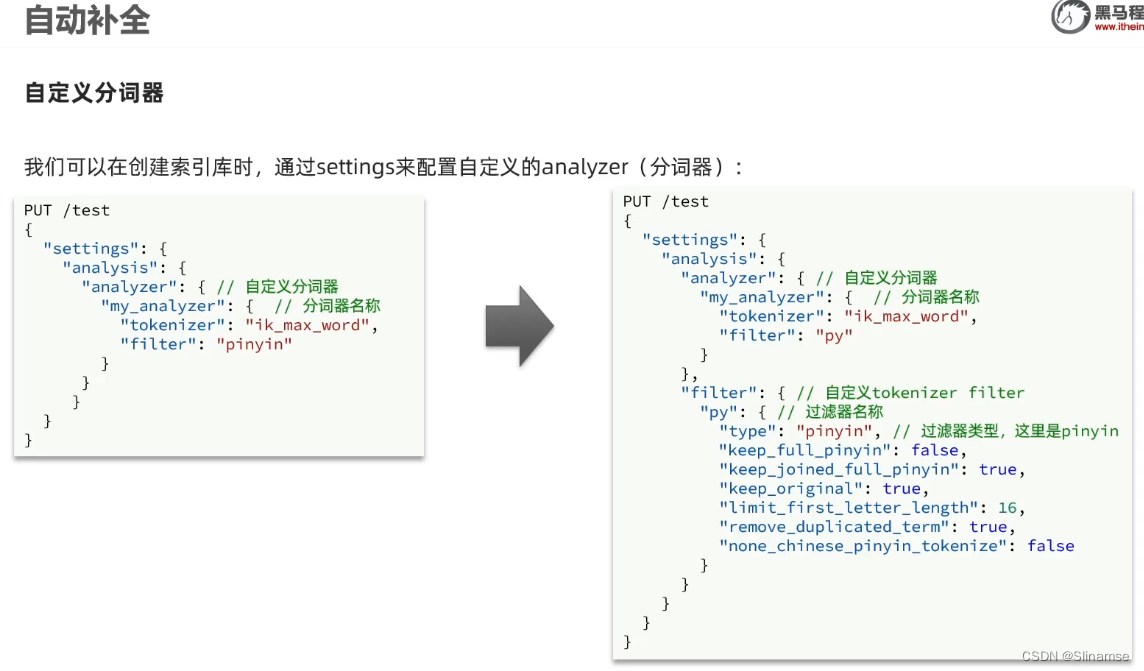

POST /test/_analyze

{"text": ["如家酒店还不错"],"analyzer": "my_analyzer"

}# 自定义拼音分词器

PUT /test

{"settings": {"analysis": {"analyzer": { "my_analyzer": { "tokenizer": "ik_max_word","filter": "py"}},"filter": {"py": { "type": "pinyin","keep_full_pinyin": false,"keep_joined_full_pinyin": true,"keep_original": true,"limit_first_letter_length": 16,"remove_duplicated_term": true,"none_chinese_pinyin_tokenize": false}}}},"mappings": {"properties": {"name":{"type": "text","analyzer": "my_analyzer"}}}

}POST /test/_doc/1

{"id": 1,"name": "狮子"

}

POST /test/_doc/2

{"id": 2,"name": "虱子"

}GET /test/_search

{"query": {"match": {"name": "shizi"}}

}

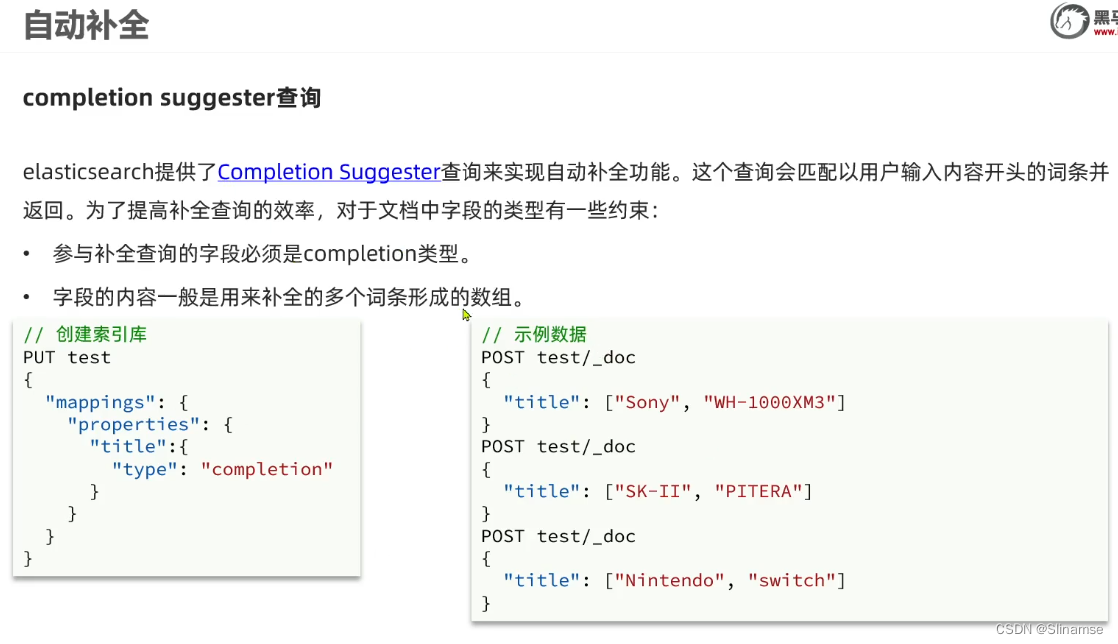

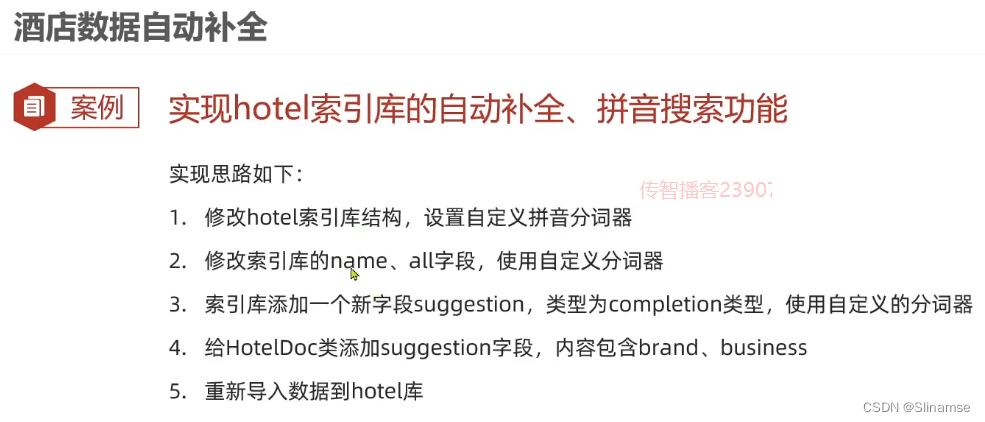

// 酒店数据索引库

PUT /hotel

{"settings": {"analysis": {"analyzer": {"text_anlyzer": {"tokenizer": "ik_max_word","filter": "py"},"completion_analyzer": {"tokenizer": "keyword","filter": "py"}},"filter": {"py": {"type": "pinyin","keep_full_pinyin": false,"keep_joined_full_pinyin": true,"keep_original": true,"limit_first_letter_length": 16,"remove_duplicated_term": true,"none_chinese_pinyin_tokenize": false}}}},"mappings": {"properties": {"id":{"type": "keyword"},"name":{"type": "text","analyzer": "text_anlyzer","search_analyzer": "ik_smart","copy_to": "all"},"address":{"type": "keyword","index": false},"price":{"type": "integer"},"score":{"type": "integer"},"brand":{"type": "keyword","copy_to": "all"},"city":{"type": "keyword"},"starName":{"type": "keyword"},"business":{"type": "keyword","copy_to": "all"},"location":{"type": "geo_point"},"pic":{"type": "keyword","index": false},"all":{"type": "text","analyzer": "text_anlyzer","search_analyzer": "ik_smart"},"suggestion":{"type": "completion","analyzer": "completion_analyzer"}}}

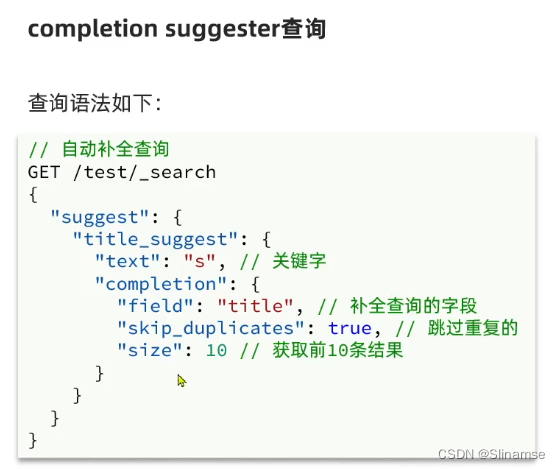

}GET /hotel/_search

{"suggest": {"title_suggest": {"text": "地", "completion": {"field": "suggestion", "skip_duplicates": true, "size": 10 }}}

}

Controller

Controller

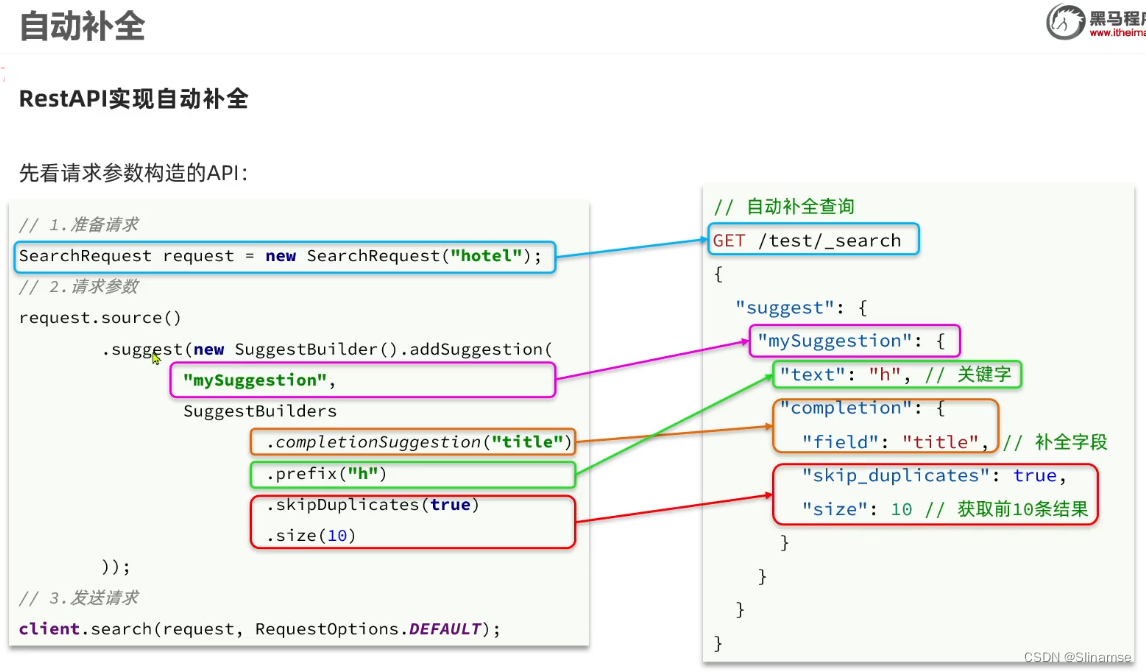

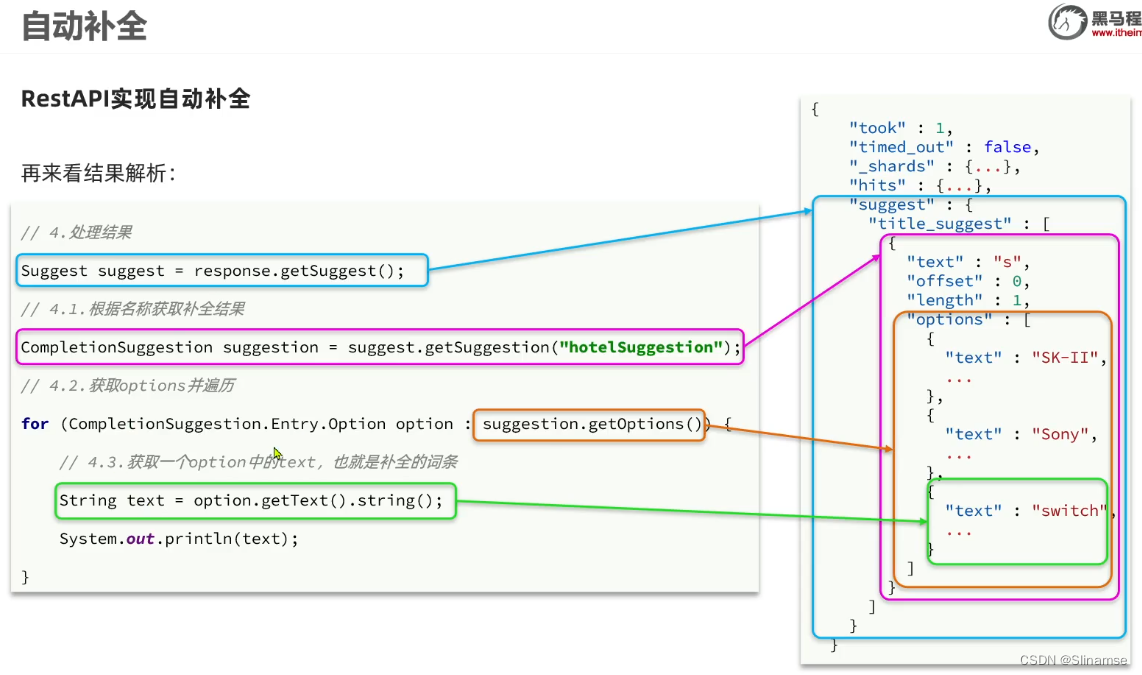

@GetMapping("/suggestion")public List<String> suggestion(String key) throws IOException {System.out.println("suggestion接口被访问了:"+key);List<String> list = hotelService.suggestion(key);return list;}Service

@Overridepublic List<String> suggestion(String key) throws IOException {//1.创建RequestSearchRequest request = new SearchRequest("hotel");//2.组织DSL语句request.source().suggest(new SuggestBuilder().addSuggestion("hotelSuggestion",SuggestBuilders.completionSuggestion("suggestion").prefix(key).skipDuplicates(true).size(100)));//3.发送请求SearchResponse response = client.search(request,RequestOptions.DEFAULT);//4.解析数据Suggest suggest = response.getSuggest();//根据名称获取补全结果CompletionSuggestion suggestion = suggest.getSuggestion("hotelSuggestion");//获取option并遍历List<String> list = new ArrayList<>();for (CompletionSuggestion.Entry.Option option : suggestion.getOptions()) {//获取一个option中的text,也就是补全的词条String text = option.getText().string();list.add(text);}return list;}

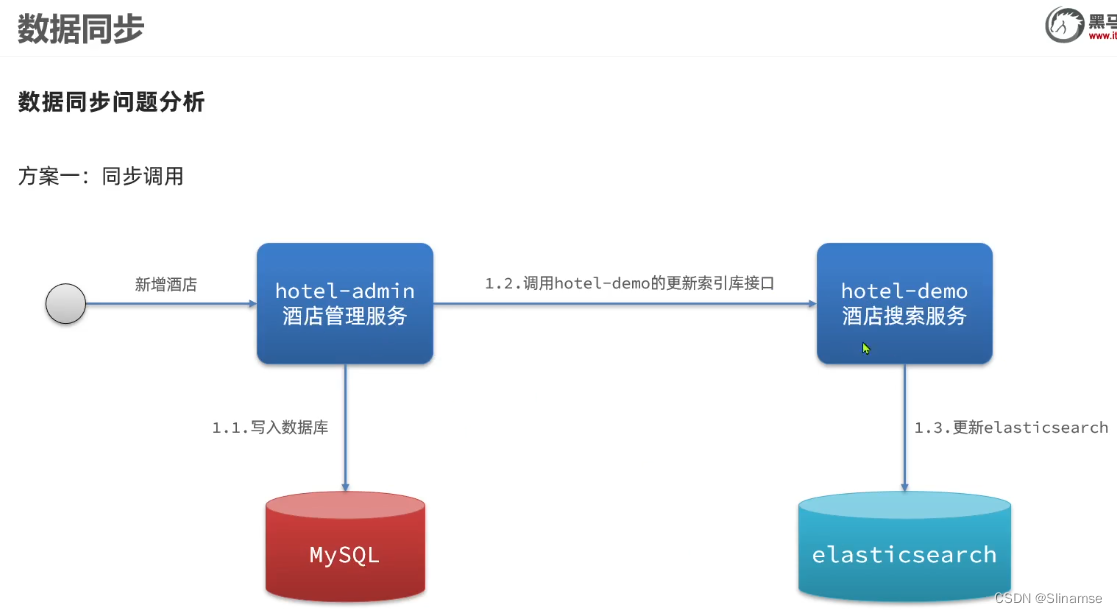

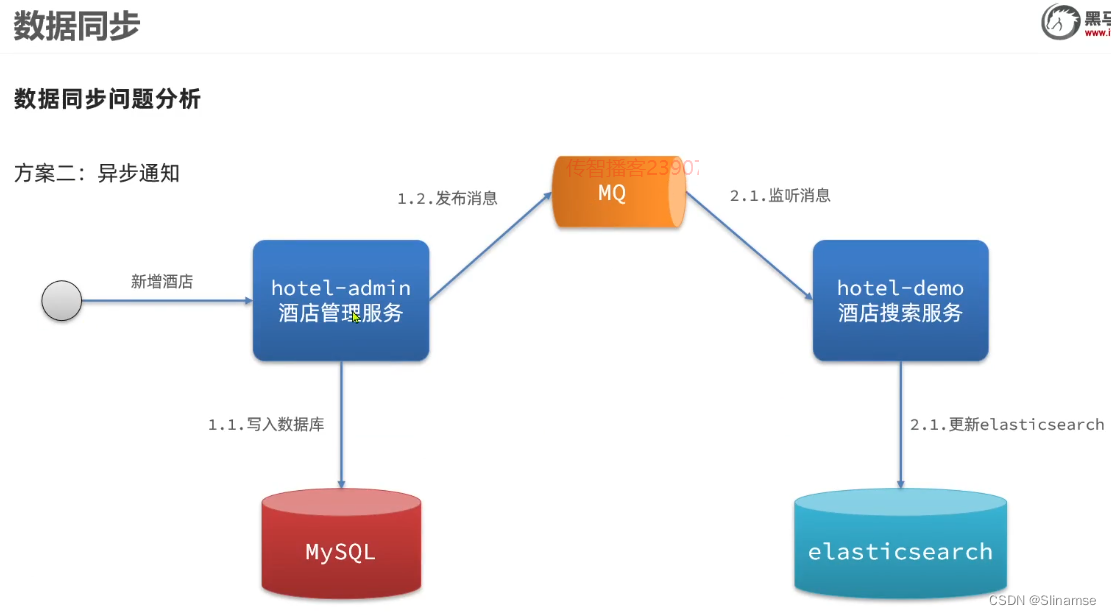

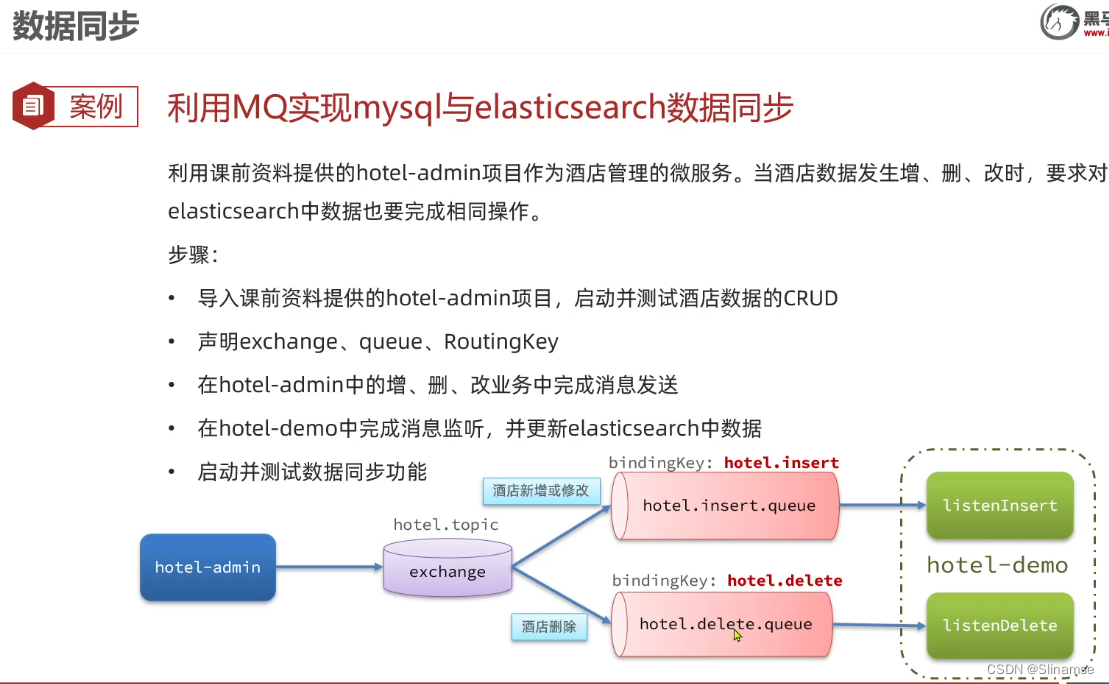

发送MQ消息

@PostMappingpublic void saveHotel(@RequestBody Hotel hotel){hotelService.save(hotel);rabbitTemplate.convertAndSend(MqConstants.HOTEL_EXCHANGE,MqConstants.HOTEL_INSERT_KEY,hotel.getId());}@PutMapping()public void updateById(@RequestBody Hotel hotel){if (hotel.getId() == null) {throw new InvalidParameterException("id不能为空");}hotelService.updateById(hotel);rabbitTemplate.convertAndSend(MqConstants.HOTEL_EXCHANGE,MqConstants.HOTEL_INSERT_KEY,hotel.getId());}@DeleteMapping("/{id}")public void deleteById(@PathVariable("id") Long id) {hotelService.removeById(id);rabbitTemplate.convertAndSend(MqConstants.HOTEL_EXCHANGE,MqConstants.HOTEL_DELETE_KEY,id);}监听MQ消息

public class MqConstants {/* 交换机*/public final static String HOTEL_EXCHANGE = "hotel.topic";/* 监听新增和修改的队列*/public final static String HOTEL_INSERT_QUEUE = "hotel.insert.queue";/* 监听删除的队列*/public final static String HOTEL_DELETE_QUEUE = "hotel.delete.queue";/* 新增或修改的RoutingKey*/public final static String HOTEL_INSERT_KEY = "hotel.insert";/* 删除的RoutingKey*/public final static String HOTEL_DELETE_KEY = "hotel.delete";

}@Configuration

public class MqConfig {//声明一个交换机@Beanpublic TopicExchange topicExchange(){return new TopicExchange(MqConstants.HOTEL_EXCHANGE,true,false);}//定义队列@Beanpublic Queue insertQueue(){return new Queue(MqConstants.HOTEL_INSERT_QUEUE,true);}@Beanpublic Queue deleteQueue(){return new Queue(MqConstants.HOTEL_DELETE_QUEUE,true);}//将队列与交换机进行绑定@Beanpublic Binding insertQueueBanding(){return BindingBuilder.bind(insertQueue()).to(topicExchange()).with(MqConstants.HOTEL_INSERT_KEY);}@Beanpublic Binding deleteQueueBanding(){return BindingBuilder.bind(deleteQueue()).to(topicExchange()).with(MqConstants.HOTEL_DELETE_KEY);}

}@PostMappingpublic void saveHotel(@RequestBody Hotel hotel){hotelService.save(hotel);rabbitTemplate.convertAndSend(MqConstants.HOTEL_EXCHANGE,MqConstants.HOTEL_INSERT_KEY,hotel.getId());}@PutMapping()public void updateById(@RequestBody Hotel hotel){if (hotel.getId() == null) {throw new InvalidParameterException("id不能为空");}hotelService.updateById(hotel);rabbitTemplate.convertAndSend(MqConstants.HOTEL_EXCHANGE,MqConstants.HOTEL_INSERT_KEY,hotel.getId());}@DeleteMapping("/{id}")public void deleteById(@PathVariable("id") Long id) {hotelService.removeById(id);rabbitTemplate.convertAndSend(MqConstants.HOTEL_EXCHANGE,MqConstants.HOTEL_DELETE_KEY,id);}

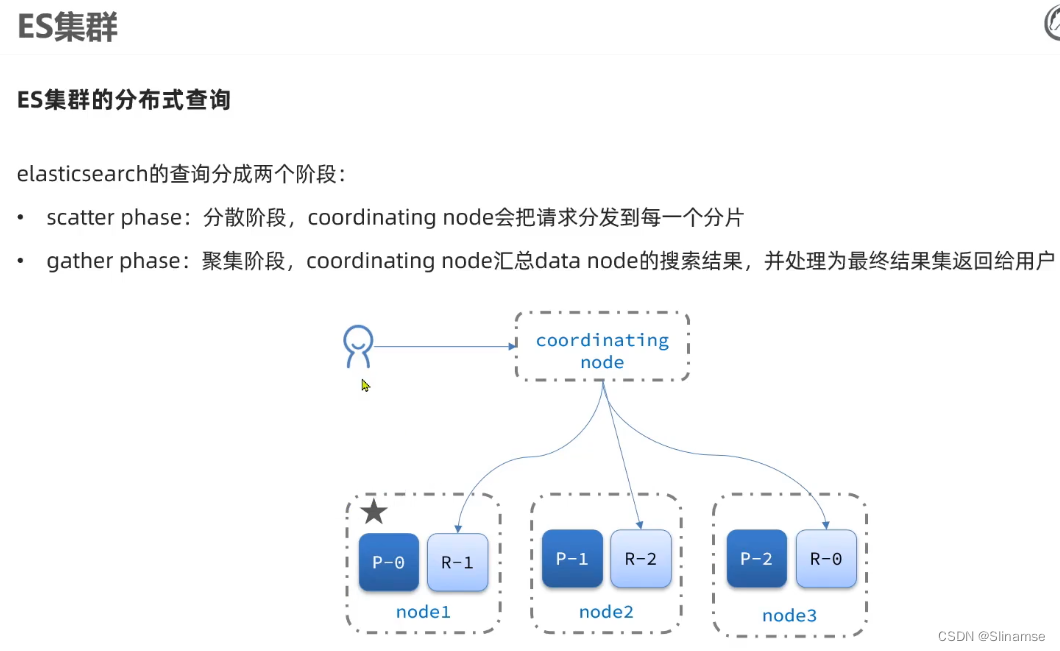

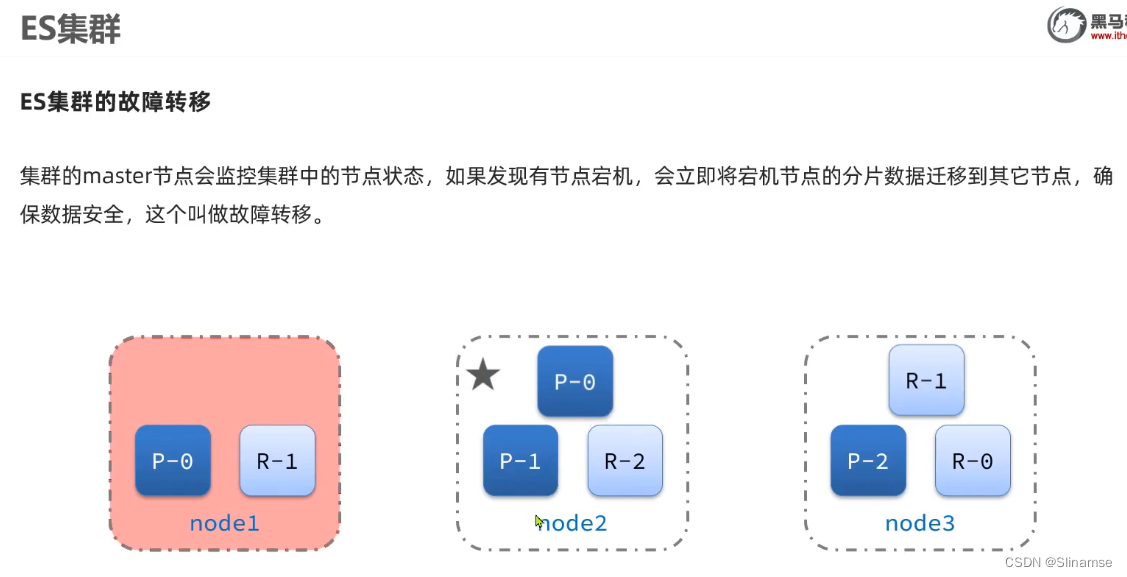

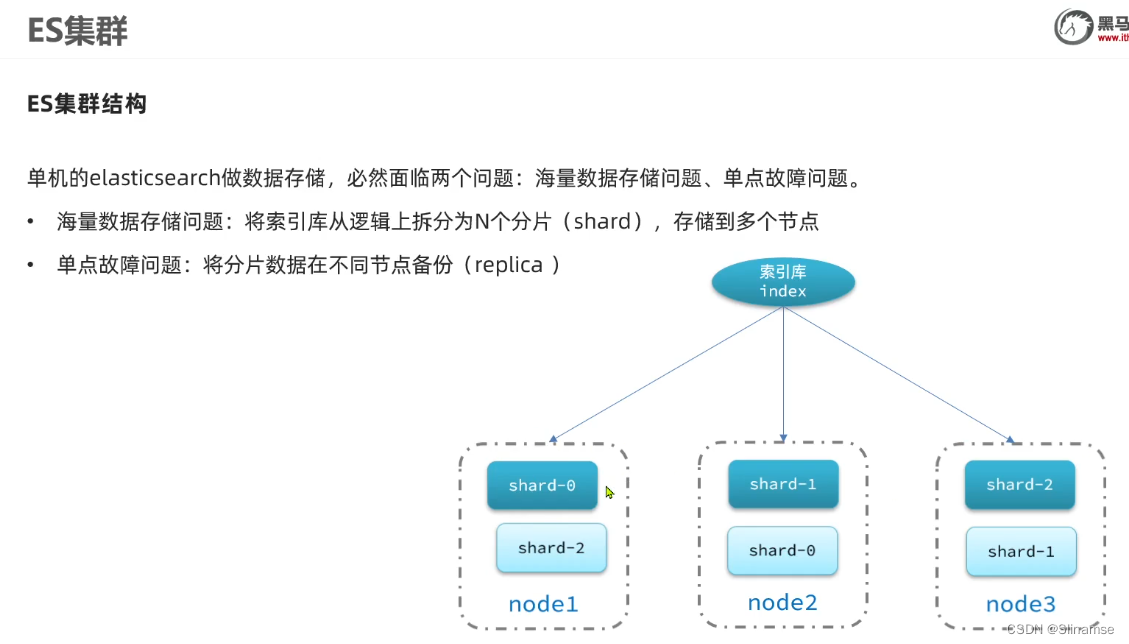

部署es集群

我们会在单机上利用docker容器运行多个es实例来模拟es集群。不过生产环境推荐大家每一台服务节点仅部署一个es的实例。

部署es集群可以直接使用docker-compose来完成,但这要求你的Linux虚拟机至少有4G的内存空间

创建es集群

首先编写一个docker-compose文件,内容如下:

version: '2.2'

services:es01:image: elasticsearch:7.12.1container_name: es01environment:- node.name=es01- cluster.name=es-docker-cluster- discovery.seed_hosts=es02,es03- cluster.initial_master_nodes=es01,es02,es03- "ES_JAVA_OPTS=-Xms512m -Xmx512m"volumes:- data01:/usr/share/elasticsearch/dataports:- 9200:9200networks:- elastices02:image: elasticsearch:7.12.1container_name: es02environment:- node.name=es02- cluster.name=es-docker-cluster- discovery.seed_hosts=es01,es03- cluster.initial_master_nodes=es01,es02,es03- "ES_JAVA_OPTS=-Xms512m -Xmx512m"volumes:- data02:/usr/share/elasticsearch/dataports:- 9201:9200networks:- elastices03:image: elasticsearch:7.12.1container_name: es03environment:- node.name=es03- cluster.name=es-docker-cluster- discovery.seed_hosts=es01,es02- cluster.initial_master_nodes=es01,es02,es03- "ES_JAVA_OPTS=-Xms512m -Xmx512m"volumes:- data03:/usr/share/elasticsearch/datanetworks:- elasticports:- 9202:9200

volumes:data01:driver: localdata02:driver: localdata03:driver: localnetworks:elastic:driver: bridgees运行需要修改一些linux系统权限,修改/etc/sysctl.conf文件

vi /etc/sysctl.conf添加下面的内容:

vm.max_map_count=262144然后执行命令,让配置生效:

sysctl -p通过docker-compose启动集群:

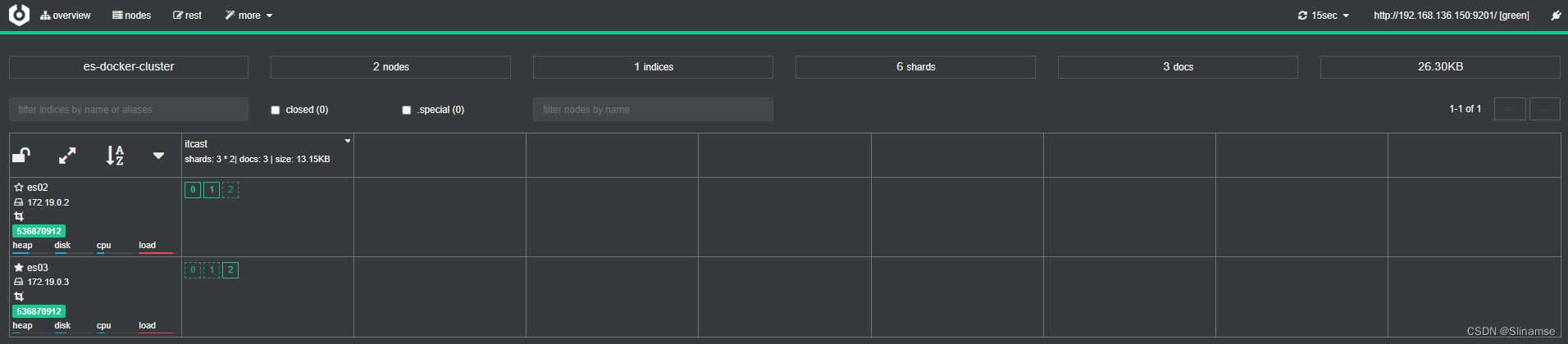

docker-compose up -d4.2.集群状态监控

kibana可以监控es集群,不过新版本需要依赖es的x-pack 功能,配置比较复杂。

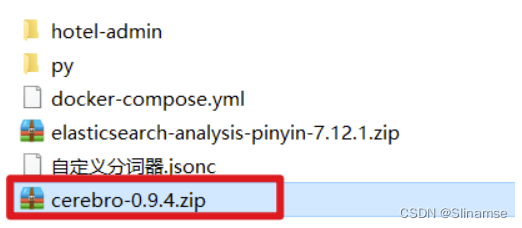

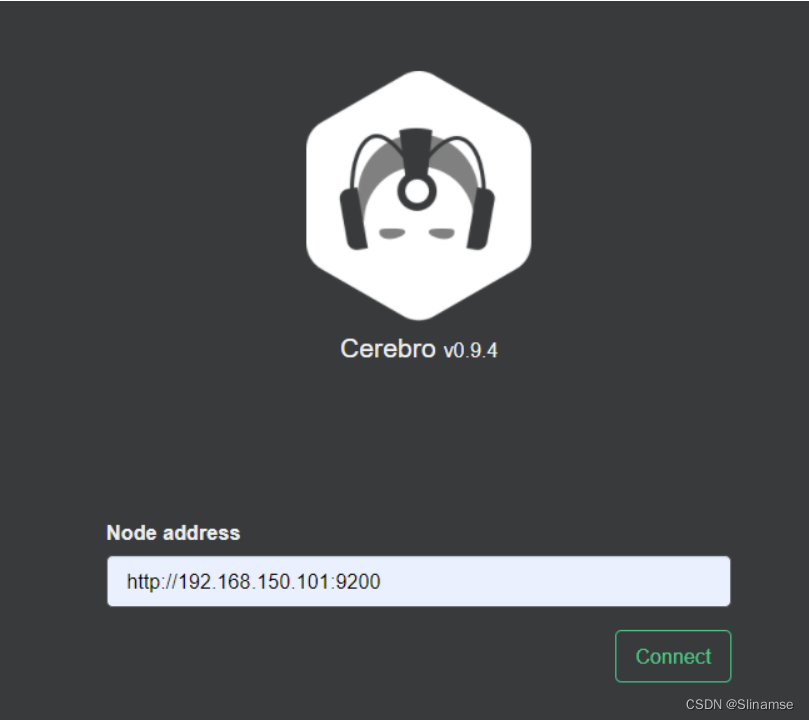

这里推荐使用cerebro来监控es集群状态,官方网址:GitHub - lmenezes/cerebro

课前资料已经提供了安装包:

解压即可使用,非常方便。

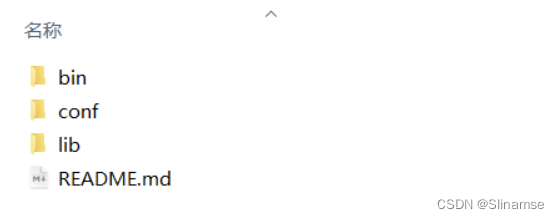

解压好的目录如下:

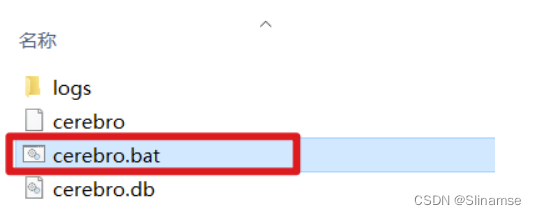

进入对应的bin目录:

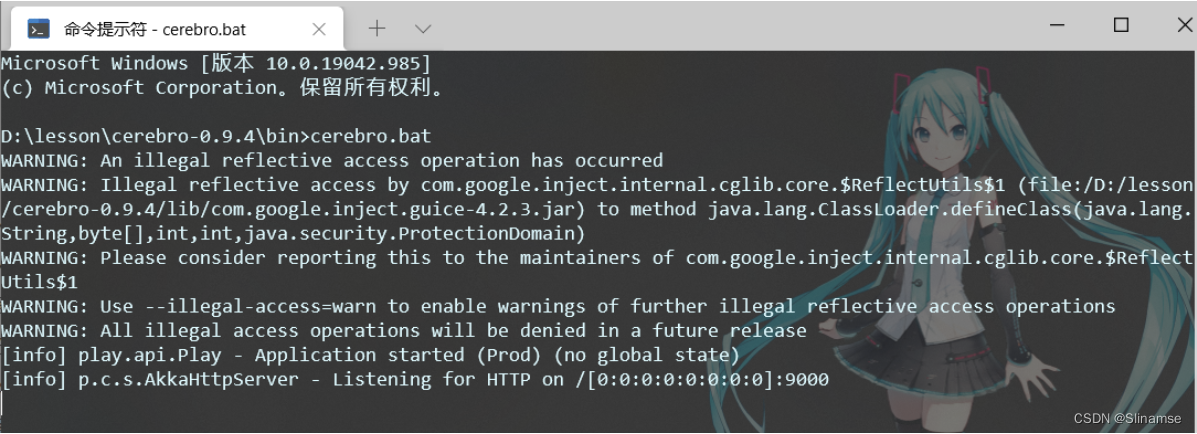

双击其中的cerebro.bat文件即可启动服务。

访问http://localhost:9000 即可进入管理界面:

输入你的elasticsearch的任意节点的地址和端口,点击connect即可:

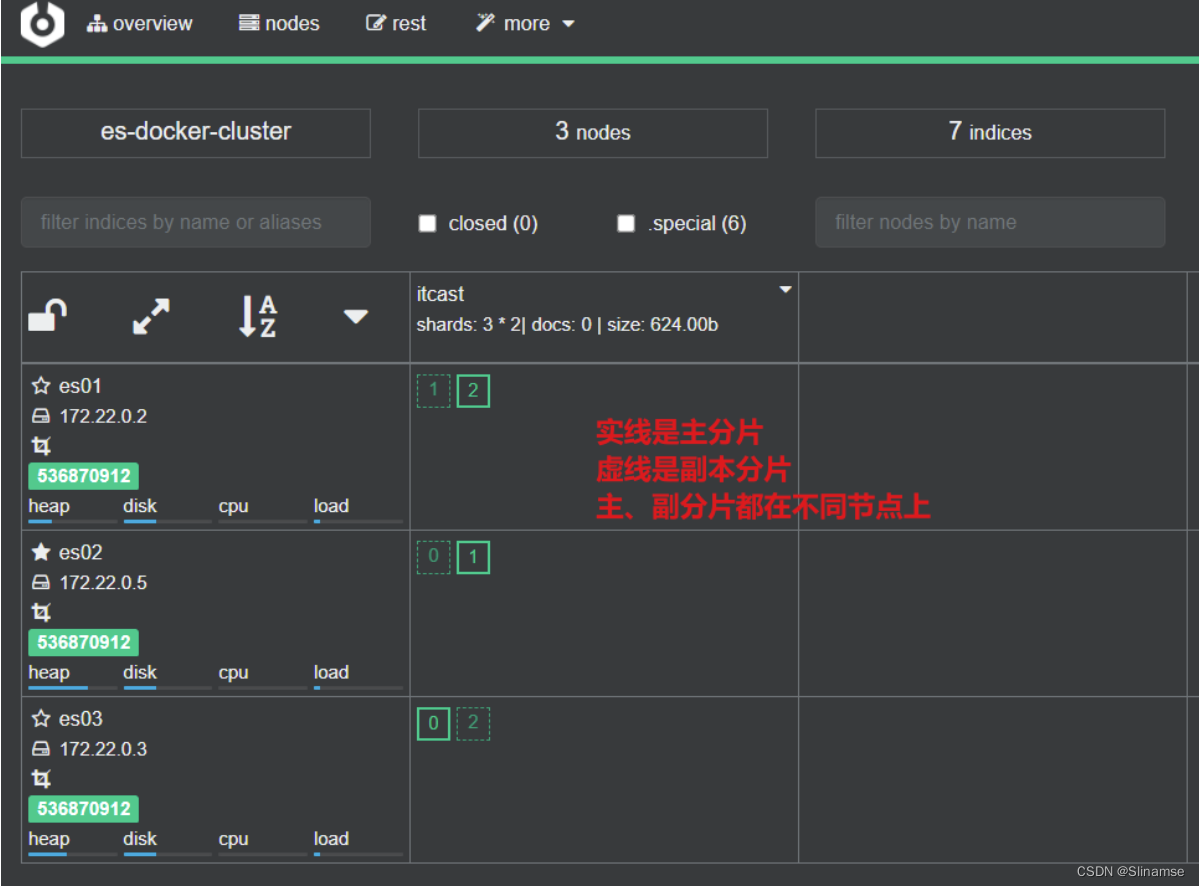

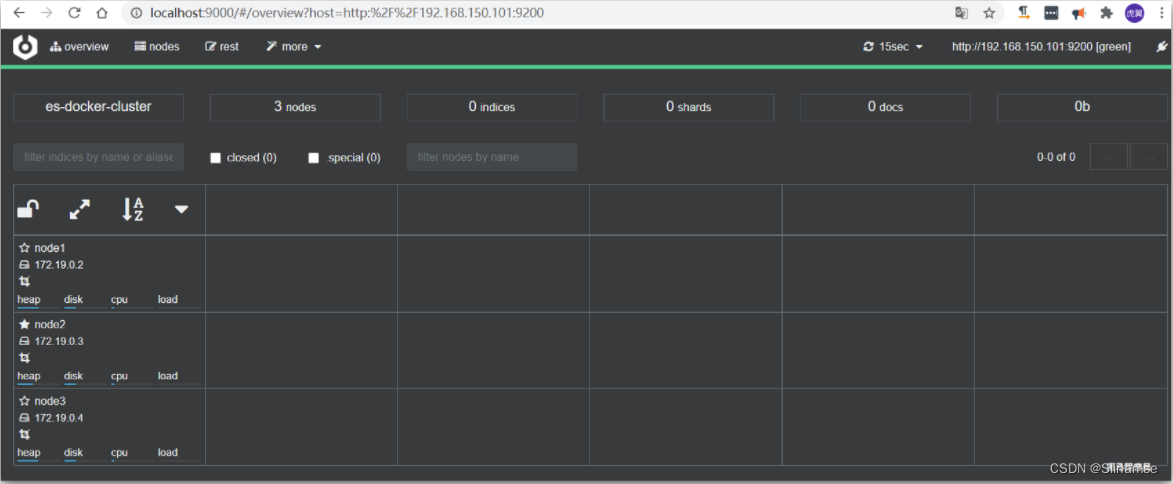

绿色的条,代表集群处于绿色(健康状态)。

创建索引库

1)利用kibana的DevTools创建索引库

在DevTools中输入指令:

PUT /itcast

{"settings": {"number_of_shards": 3, // 分片数量"number_of_replicas": 1 // 副本数量},"mappings": {"properties": {// mapping映射定义 ...}}

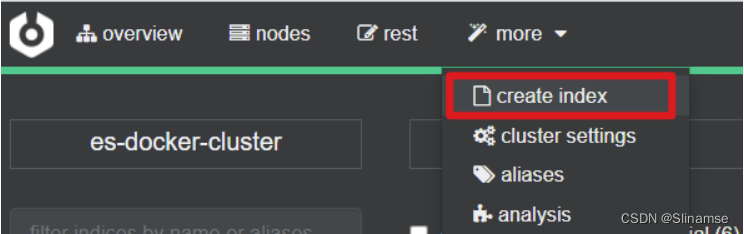

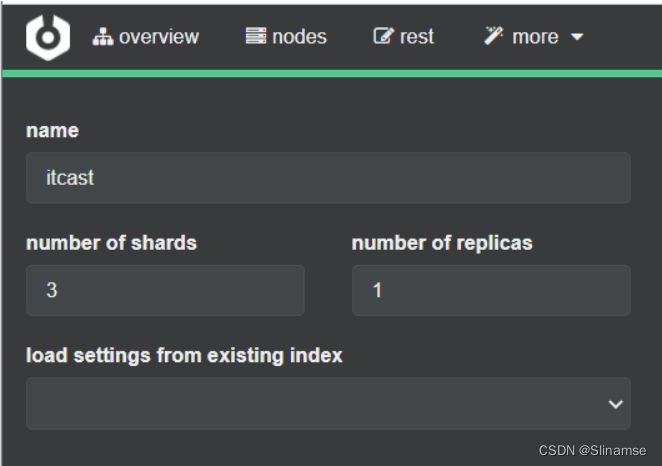

}2)利用cerebro创建索引库

利用cerebro还可以创建索引库:

填写索引库信息:

点击右下角的create按钮:

查看分片效果

回到首页,即可查看索引库分片效果: