Android ART虚拟机 Space类体系

前言

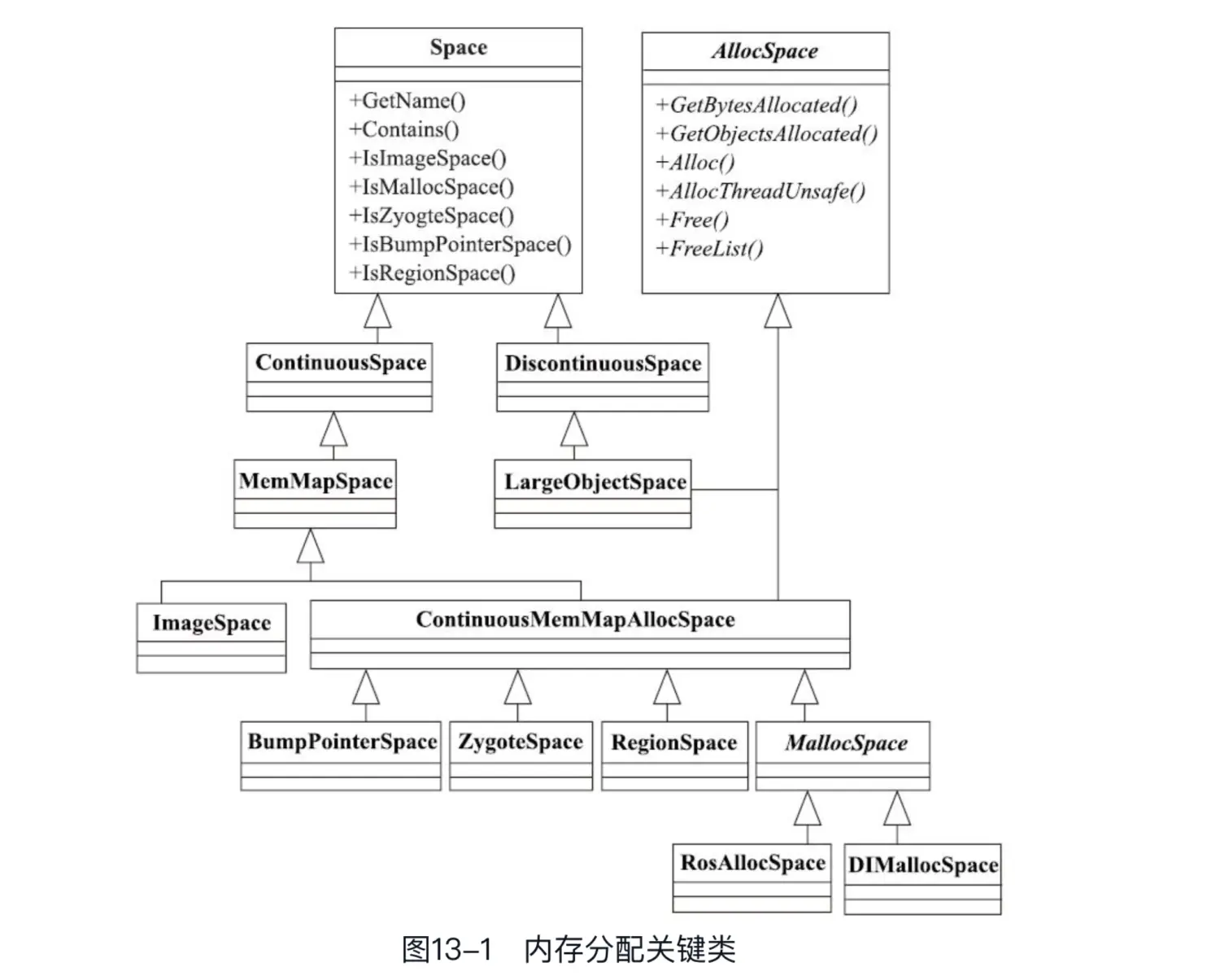

在ART虚拟机实现中,内存分配和释放的算法是封装在不同的Space中来完成的。而外部使用者只能借助Space及派生类的接口来完成内存的分配与释放。通过阅读这些Space的实现,可以看出ART虚拟机的一个重要的特点就是大量使用映射内存,相较于Dalvik虚拟机来说在内存分配上管理的更细致。

ART虚拟机提供了多种内存分配手段,它们分别由LargeObjectSpace、BumpPointerSpace、ZygoteSpace、RegionSpace、DlMallocSpace和RosAllocSpace六个类来实现,虚拟机内部会根据配置情况来使用不同的内存分配类,本文来仔细。

概览

art/runtime/gc/space/space.h

- 第一层包含Space和AllocSpace两个类。

Space代表一块内存空间,而纯虚类AllocSpace则代表一块可用于内存分配的空间。

AllocSpace提供了和内存分配及释放有关的虚函数,比如Alloc、Free等。 - 第二层包含ContinuousSpace和DiscontinuousSpace两个类。它们均派生自Space类。

ContinuousSpace表示一块地址连续的内存空间,

DiscontinuousSpace则表示一块地址不连续的空间。 - 第三层包含MemMapSpace和LargeObjectSpace两个类。

MemMapSpace派生自ContinuousSpace,它表示内存空间里的内存是通过内存映射技术来提供的。

LargeObjectSpace同时派生自DiscontinuousSpace和AllocSpace。该空间里的内存资源可以分配给外部使用。在ART虚拟机中,如果一个Java对象(注意,该对象的类型必须为Java String或基础数据的数组类型,比如int数组)所需内存超过3个内存页时,将使用LargeObjectSpace来提供内存资源。 - 第四层包含ImageSpace和ContinuousMemMapAllocSpace两个类。

ImageSpace用于.art文件的加载。一个ImageSpace创建成功后,其对应的.art文件里所包含的mirror Object对象就算创建完毕并加载到内存里了。

ContinuousMemMapAllocSpace代表一个可对外提供连续内存资源的空间,其内存资源由内存映射技术提供。 - 第五层包含BumpPointerSpace、ZygoteSpace、RegionSpace和MallocSpace四个类。

其中只有MallocSpace是虚类,而其他三个类可直接用于分配内存资源,但所使用的内存分配算法各不相同。 - 第六层包含DlMallocSpace和RosAllocSpace两个类,它们派生自MallocSpace。这两个类也用于内存分配,只不过使用了不同的内存分配算法。

class Space;

class AllocSpace;class ContinuousSpace : public Space {...}

class MemMapSpace : public ContinuousSpace {...}

class ImageSpace : public MemMapSpace {...}class ContinuousMemMapAllocSpace : public MemMapSpace, public AllocSpace {...}

class ZygoteSpace FINAL : public ContinuousMemMapAllocSpace {...}

class BumpPointerSpace FINAL : public ContinuousMemMapAllocSpace {...}

class RegionSpace FINAL : public ContinuousMemMapAllocSpace {...}

class MallocSpace : public ContinuousMemMapAllocSpace {...}

class DlMallocSpace : public MallocSpace {...}

class RosAllocSpace : public MallocSpace {...}class DiscontinuousSpace : public Space {...}

class LargeObjectSpace : public DiscontinuousSpace, public AllocSpace {...}enum SpaceType {kSpaceTypeImageSpace,kSpaceTypeMallocSpace,kSpaceTypeZygoteSpace,kSpaceTypeBumpPointerSpace,kSpaceTypeLargeObjectSpace,kSpaceTypeRegionSpace,

};enum GcRetentionPolicy {// Objects are retained forever with this policy for a space.kGcRetentionPolicyNeverCollect,// Every GC cycle will attempt to collect objects in this space.kGcRetentionPolicyAlwaysCollect,// Objects will be considered for collection only in "full" GC cycles, ie faster partial collections won't scan these areas such as the Zygote.kGcRetentionPolicyFullCollect,

};// A space contains memory allocated for managed objects.

class Space {public:// The policy of when objects are collected associated with this space.GcRetentionPolicy GetGcRetentionPolicy() const {return gc_retention_policy_;}// Is the given object contained within this space?virtual bool Contains(const mirror::Object* obj) const = 0;// The kind of space this: image, alloc, zygote, large object.virtual SpaceType GetType() const = 0;// Returns true if objects in the space are movable.virtual bool CanMoveObjects() const = 0;virtual ~Space() {}protected:Space(const std::string& name, GcRetentionPolicy gc_retention_policy);void SetGcRetentionPolicy(GcRetentionPolicy gc_retention_policy) {gc_retention_policy_ = gc_retention_policy;}// Name of the space that may vary due to the Zygote fork.std::string name_;protected:GcRetentionPolicy gc_retention_policy_;

}// AllocSpace interface.

class AllocSpace {public:// Number of bytes currently allocated.virtual uint64_t GetBytesAllocated() = 0;// Number of objects currently allocated.virtual uint64_t GetObjectsAllocated() = 0;// Allocate num_bytes without allowing growth. If the allocation// succeeds, the output parameter bytes_allocated will be set to the// actually allocated bytes which is >= num_bytes.// Alloc can be called from multiple threads at the same time and must be thread-safe.//// bytes_tl_bulk_allocated - bytes allocated in bulk ahead of time for a thread local allocation,// if applicable. It can be// 1) equal to bytes_allocated if it's not a thread local allocation,// 2) greater than bytes_allocated if it's a thread local// allocation that required a new buffer, or// 3) zero if it's a thread local allocation in an existing// buffer.// This is what is to be added to Heap::num_bytes_allocated_.virtual mirror::Object* Alloc(Thread* self, size_t num_bytes, size_t* bytes_allocated,size_t* usable_size, size_t* bytes_tl_bulk_allocated) = 0;// Thread-unsafe allocation for when mutators are suspended, used by the semispace collector.virtual mirror::Object* AllocThreadUnsafe(Thread* self, size_t num_bytes, size_t* bytes_allocated,size_t* usable_size,size_t* bytes_tl_bulk_allocated)REQUIRES(Locks::mutator_lock_) {return Alloc(self, num_bytes, bytes_allocated, usable_size, bytes_tl_bulk_allocated);}// Return the storage space required by obj.virtual size_t AllocationSize(mirror::Object* obj, size_t* usable_size) = 0;// Returns how many bytes were freed.virtual size_t Free(Thread* self, mirror::Object* ptr) = 0;// Returns how many bytes were freed.virtual size_t FreeList(Thread* self, size_t num_ptrs, mirror::Object** ptrs) = 0;// Revoke any sort of thread-local buffers that are used to speed up allocations for the given// thread, if the alloc space implementation uses any.// Returns the total free bytes in the revoked thread local runs that's to be subtracted// from Heap::num_bytes_allocated_ or zero if unnecessary.virtual size_t RevokeThreadLocalBuffers(Thread* thread) = 0;// Revoke any sort of thread-local buffers that are used to speed up allocations for all the// threads, if the alloc space implementation uses any.// Returns the total free bytes in the revoked thread local runs that's to be subtracted// from Heap::num_bytes_allocated_ or zero if unnecessary.virtual size_t RevokeAllThreadLocalBuffers() = 0;virtual void LogFragmentationAllocFailure(std::ostream& os, size_t failed_alloc_bytes) = 0;protected:struct SweepCallbackContext {SweepCallbackContext(bool swap_bitmaps, space::Space* space);const bool swap_bitmaps;space::Space* const space;Thread* const self;collector::ObjectBytePair freed;};AllocSpace() {}virtual ~AllocSpace() {}

};父类

ContinuousSpace类

// Continuous spaces have bitmaps, and an address range. Although not required, objects within

// continuous spaces can be marked in the card table.

class ContinuousSpace : public Space {private:// The beginning of the storage for fast access.// Address at which the space begins.uint8_t* begin_;// Current end of the space.// Current address at which the space ends, which may vary as the space is filled.Atomic<uint8_t*> end_;// Limit of the space.// The end of the address range covered by the space.uint8_t* limit_;public:// Current size of spacesize_t Size() const {return End() - Begin();}// Maximum which the mapped space can grow to.virtual size_t Capacity() const {return Limit() - Begin();}// pure virtual functionsvirtual accounting::ContinuousSpaceBitmap* GetLiveBitmap() const = 0;virtual accounting::ContinuousSpaceBitmap* GetMarkBitmap() const = 0;

}

DiscontinuousSpace类

// Required object alignment

static constexpr size_t kObjectAlignment = 8;

static constexpr size_t kLargeObjectAlignment = kPageSize;typedef SpaceBitmap<kObjectAlignment> ContinuousSpaceBitmap;

typedef SpaceBitmap<kLargeObjectAlignment> LargeObjectBitmap;// A space where objects may be allocated higgledy-piggledy throughout virtual memory.

// Currently the card table can't cover these objects and so the write barrier shouldn't be triggered. // This is suitable for use for large primitive arrays.

class DiscontinuousSpace : public Space {public:accounting::LargeObjectBitmap* GetLiveBitmap() const {return live_bitmap_.get();}accounting::LargeObjectBitmap* GetMarkBitmap() const {return mark_bitmap_.get();}virtual bool IsDiscontinuousSpace() const OVERRIDE {return true;}virtual ~DiscontinuousSpace() {}protected:DiscontinuousSpace(const std::string& name, GcRetentionPolicy gc_retention_policy);std::unique_ptr<accounting::LargeObjectBitmap> live_bitmap_;std::unique_ptr<accounting::LargeObjectBitmap> mark_bitmap_;private:DISALLOW_IMPLICIT_CONSTRUCTORS(DiscontinuousSpace);

};// 该父类初始化时即初始化了live_bitmap_、mark_bitmap_

DiscontinuousSpace::DiscontinuousSpace(const std::string& name, GcRetentionPolicy gc_retention_policy) : Space(name, gc_retention_policy) {const size_t capacity = static_cast<size_t>(std::numeric_limits<uint32_t>::max());live_bitmap_.reset(accounting::LargeObjectBitmap::Create("large live objects", nullptr, capacity));mark_bitmap_.reset(accounting::LargeObjectBitmap::Create("large marked objects", nullptr, capacity));

}

MemMapSpace

内部持有mem_map_

class MemMapSpace : public ContinuousSpace {protected:// Underlying storage of the spacestd::unique_ptr<MemMap> mem_map_;

};

ContinuousMemMapAllocSpace

针对ContinuousMemMapAllocSpace中的三个位图成员变量,其子类中:

- ZygoteSpace会设置live_bitmap_和mark_bitmap_。注意,这两个成员变量的值由外部传入,并非由ZygoteSpace自己创建。

- BumpPointerSpace和RegionSpace不设置这三个成员变量。

- DlMallocSpace和RosAllocSpace在它们的基类MallocSpace构造函数中初始化live_bitmap_和mark_bitmap_成员变量。

// Required object alignment

static constexpr size_t kObjectAlignment = 8;

static constexpr size_t kLargeObjectAlignment = kPageSize;typedef SpaceBitmap<kObjectAlignment> ContinuousSpaceBitmap;

typedef SpaceBitmap<kLargeObjectAlignment> LargeObjectBitmap;// Used by the heap compaction interface to enable copying from one type of alloc space to another.

class ContinuousMemMapAllocSpace : public MemMapSpace, public AllocSpace {protected:std::unique_ptr<accounting::ContinuousSpaceBitmap> live_bitmap_;std::unique_ptr<accounting::ContinuousSpaceBitmap> mark_bitmap_;std::unique_ptr<accounting::ContinuousSpaceBitmap> temp_bitmap_;

}

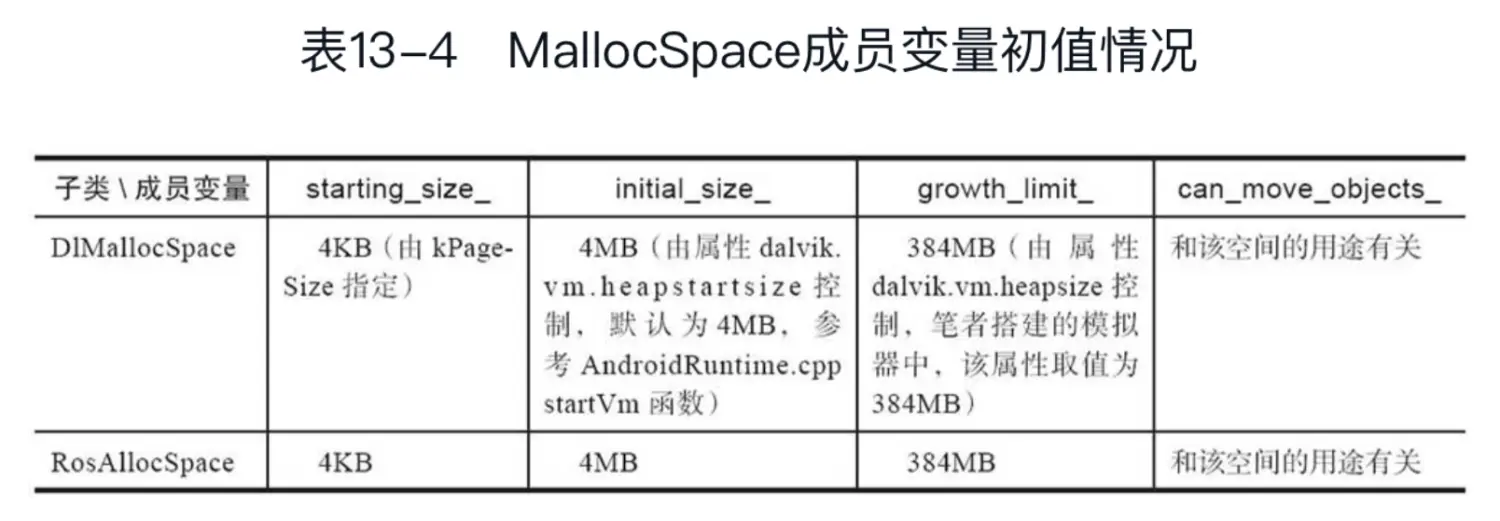

MallocSpace

在构造函数中初始化live_bitmap_和mark_bitmap_成员变量。

// A common parent of DlMallocSpace and RosAllocSpace.

class MallocSpace : public ContinuousMemMapAllocSpace {protected:// The growth_limit_ is used as the capacity of the alloc_space_ 内存分配的最高水位线,最大可分配内存不允许超过growth_limit_size_t growth_limit_;// True if objects in the space are movable. 和gc相关bool can_move_objects_;// Starting and initial sized, used when you reset the space.const size_t starting_size_;const size_t initial_size_;

}// 全局静态变量

size_t MallocSpace::bitmap_index_ = 0; MallocSpace::MallocSpace(const std::string& name, MemMap* mem_map, uint8_t* begin, uint8_t* end, uint8_t* limit, size_t growth_limit, bool create_bitmaps, bool can_move_objects, size_t starting_size, size_t initial_size): ContinuousMemMapAllocSpace(name, mem_map, begin, end, limit, kGcRetentionPolicyAlwaysCollect), recent_free_pos_(0), lock_("allocation space lock", kAllocSpaceLock), growth_limit_(growth_limit), can_move_objects_(can_move_objects), starting_size_(starting_size), initial_size_(initial_size) {if (create_bitmaps) {size_t bitmap_index = bitmap_index_++;static const uintptr_t kGcCardSize = static_cast<uintptr_t>(accounting::CardTable::kCardSize);live_bitmap_.reset(accounting::ContinuousSpaceBitmap::Create(StringPrintf("allocspace %s live-bitmap %d", name.c_str(), static_cast<int>(bitmap_index)),Begin(), NonGrowthLimitCapacity()));mark_bitmap_.reset(accounting::ContinuousSpaceBitmap::Create(StringPrintf("allocspace %s mark-bitmap %d", name.c_str(), static_cast<int>(bitmap_index)),Begin(), NonGrowthLimitCapacity()));}for (auto& freed : recent_freed_objects_) {freed.first = nullptr;freed.second = nullptr;}

}

具体实现类

ZygoteSpace

An zygote space is a space which you cannot allocate into or free from.

ZygoteSpace虽然继承了AllocSpace,但它并没有真正提供内存分配和回收的功能。

art/runtime/gc/space/zygote_space.h

art/runtime/gc/space/zygote_space.cc

class ZygoteSpace FINAL : public ContinuousMemMapAllocSpace {...}ZygoteSpace* ZygoteSpace::Create(const std::string& name, MemMap* mem_map,accounting::ContinuousSpaceBitmap* live_bitmap,accounting::ContinuousSpaceBitmap* mark_bitmap) {size_t objects_allocated = 0;CountObjectsAllocated visitor(&objects_allocated);ReaderMutexLock mu(Thread::Current(), *Locks::heap_bitmap_lock_);live_bitmap->VisitMarkedRange(reinterpret_cast<uintptr_t>(mem_map->Begin()),reinterpret_cast<uintptr_t>(mem_map->End()), visitor);ZygoteSpace* zygote_space = new ZygoteSpace(name, mem_map, objects_allocated);zygote_space->live_bitmap_.reset(live_bitmap);zygote_space->mark_bitmap_.reset(mark_bitmap);return zygote_space;

}ZygoteSpace::ZygoteSpace(const std::string& name, MemMap* mem_map, size_t objects_allocated): ContinuousMemMapAllocSpace(name, mem_map, mem_map->Begin(), mem_map->End(), mem_map->End(), kGcRetentionPolicyFullCollect),objects_allocated_(objects_allocated) {

}void ZygoteSpace::Clear() {UNIMPLEMENTED(FATAL);UNREACHABLE();

}

mirror::Object* ZygoteSpace::Alloc(Thread*, size_t, size_t*, size_t*, size_t*) {UNIMPLEMENTED(FATAL);UNREACHABLE();

}

size_t ZygoteSpace::AllocationSize(mirror::Object*, size_t*) {UNIMPLEMENTED(FATAL);UNREACHABLE();

}

size_t ZygoteSpace::Free(Thread*, mirror::Object*) {UNIMPLEMENTED(FATAL);UNREACHABLE();

}

size_t ZygoteSpace::FreeList(Thread*, size_t, mirror::Object**) {UNIMPLEMENTED(FATAL);UNREACHABLE();

}

BumpPointerSpace

-

BumpPointerSpace提供了一种极其简单的内存分配算法——顺序分配(英文叫Sequential Allocation或Linear Allocation)。即BumpPointerSpace内存分配逻辑就是第N次内存分配的起始位置为第N-1次内存分配的终点位置。所以,算法中只要有一个变量记住最后一次分配的终点位置即可,这个位置就叫Bump Pointer。

-

正因为BumpPointerSpace采用了如此简单的内存分配算法,所以它压根就不能释放某一次所分配的内存(和ZygoteSpace一样,Free等函数没有真正的实现),而只支持一次性释放所有已分配的内存(实现了AllocSpace的Clear函数,详情见下文代码分析)

-

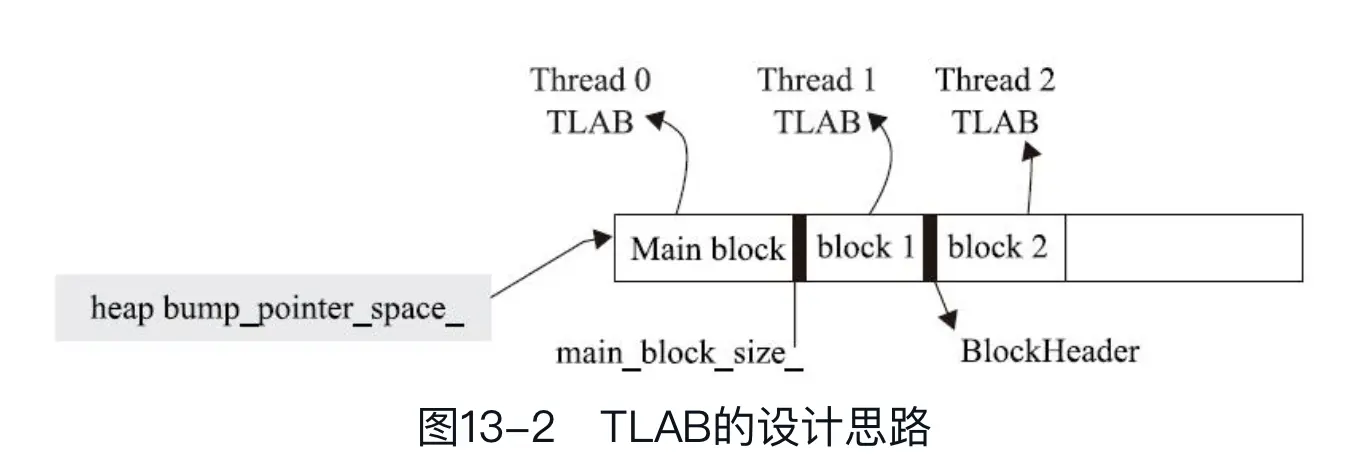

如此简单(换一种角度来说就是非常高效)的内存分配和释放算法使得BumpPointer-Space非常适合做线程本地内存分配——Thread Local Allocation Blocks,简写为TLAB,它代表一块专属某个线程的内存资源。

-

BumpPointerSpace提供了两种内存分配方法。

Alloc用于为某个mirror Object对象分配所需的内存。

AllocNewTlab:当ART虚拟机决定从调用线程的本地存储空间中分配内存时将调用此函数。 -

ART虚拟机里为每个Thread对象分配TLAB的方式:

Heap类中有一个名为bump_pointer_space_成员变量,它指向一个BumpPointerSpace对象。而这个BumpPointerSpace对应的内存空间可以被任意一个线程作为TLAB来使用。

第一个分配TLAB的线程将创建一个Main block。Main block位于内存资源的头部。其尾部位置由main_block_size_指明。

后续线程的TLAB都会有一个BlockHeader来描述。

art/runtime/gc/space/bump_pointer_space-inl.h

art/runtime/gc/space/bump_pointer_space.h

art/runtime/gc/space/bump_pointer_space.cc

class BumpPointerSpace FINAL : public ContinuousMemMapAllocSpace {...}BumpPointerSpace* BumpPointerSpace::Create(const std::string& name, size_t capacity, uint8_t* requested_begin) {capacity = RoundUp(capacity, kPageSize);std::string error_msg;std::unique_ptr<MemMap> mem_map(MemMap::MapAnonymous(name.c_str(), requested_begin, capacity, PROT_READ | PROT_WRITE, true, false, &error_msg));return new BumpPointerSpace(name, mem_map.release());

}BumpPointerSpace* BumpPointerSpace::CreateFromMemMap(const std::string& name, MemMap* mem_map) {return new BumpPointerSpace(name, mem_map);

}BumpPointerSpace::BumpPointerSpace(const std::string& name, uint8_t* begin, uint8_t* limit): ContinuousMemMapAllocSpace(name, nullptr, begin, begin, limit, kGcRetentionPolicyAlwaysCollect),growth_end_(limit),objects_allocated_(0), bytes_allocated_(0),block_lock_("Block lock"),main_block_size_(0),num_blocks_(0) {

}accounting::ContinuousSpaceBitmap* GetLiveBitmap() const OVERRIDE {return nullptr;

}accounting::ContinuousSpaceBitmap* GetMarkBitmap() const OVERRIDE {return nullptr;

}inline mirror::Object* BumpPointerSpace::Alloc(Thread*, size_t num_bytes, size_t* bytes_allocated, size_t* usable_size, size_t* bytes_tl_bulk_allocated) {// 按8字节向上对齐num_bytes = RoundUp(num_bytes, kAlignment);mirror::Object* ret = AllocNonvirtual(num_bytes);if (LIKELY(ret != nullptr)) {*bytes_allocated = num_bytes;if (usable_size != nullptr) {*usable_size = num_bytes;}*bytes_tl_bulk_allocated = num_bytes;}return ret;

}bool BumpPointerSpace::AllocNewTlab(Thread* self, size_t bytes) {MutexLock mu(Thread::Current(), block_lock_);// 先释放self线程原先的TLABRevokeThreadLocalBuffersLocked(self);uint8_t* start = AllocBlock(bytes);if (start == nullptr) {return false;}// 设置线程的TLABself->SetTlab(start, start + bytes);return true;

}size_t BumpPointerSpace::Free(Thread*, mirror::Object*) OVERRIDE {//直接返回0,说明BumpPointerSpace不能释放某一个0bject所占据的内存return 0;

}size_t BumpPointerSpace::FreeList(Thread*, size_t, mirror::Object**) OVERRIDE {//直接返回0,说明BumpPointerSpace不能释放某一个0bject所占据的内存return 0;

}void BumpPointerSpace::Clear() {// Release the pages back to the operating system.if (!kMadviseZeroes) {memset(Begin(), 0, Limit() - Begin());}// Reset the end of the space back to the beginning, we move the end forward as we allocate objects.SetEnd(Begin());objects_allocated_.StoreRelaxed(0);bytes_allocated_.StoreRelaxed(0);growth_end_ = Limit();{MutexLock mu(Thread::Current(), block_lock_);num_blocks_ = 0;main_block_size_ = 0;}

}

RegionSpace

- RegionSpace的内存分配算法是将内存资源划分成一个个固定大小(由kRegionSize指定,默认为1MB)的内存块。每一个内存块由一个Region对象表示。进行内存分配时,先找到满足要求的Region,然后从这个Region中分配资源。

- RegionSpace也可用作线程的TLAB。不过,它的AllocNewTlab比BumpPointerSpace的AllocNewTlab要简单得多,因为RegionSpace本身就是按一个一个地Region来管理的。如此,一个线程需要TLAB的话,我们只要找到一个空闲的Region给它就好了。

class RegionSpace FINAL : public ContinuousMemMapAllocSpace {...}static constexpr size_t kRegionSize = 1 * MB;RegionSpace* RegionSpace::Create(const std::string& name, size_t capacity, uint8_t* requested_begin) {// 按1M向上对齐capacity = RoundUp(capacity, kRegionSize);std::string error_msg;std::unique_ptr<MemMap> mem_map(MemMap::MapAnonymous(name.c_str(), requested_begin, capacity, PROT_READ | PROT_WRITE, true, false, &error_msg));return new RegionSpace(name, mem_map.release());

}RegionSpace::RegionSpace(const std::string& name, MemMap* mem_map): ContinuousMemMapAllocSpace(name, mem_map, mem_map->Begin(), mem_map->End(), mem_map->End(), kGcRetentionPolicyAlwaysCollect),region_lock_("Region lock", kRegionSpaceRegionLock), time_(1U) {size_t mem_map_size = mem_map->Size();// 计算多少个Regionnum_regions_ = mem_map_size / kRegionSize;num_non_free_regions_ = 0U; // 表示已经占有的内存块个数// 创建并初始化Region数组regions_.reset(new Region[num_regions_]); uint8_t* region_addr = mem_map->Begin();for (size_t i = 0; i < num_regions_; ++i, region_addr += kRegionSize) {regions_[i] = Region(i, region_addr, region_addr + kRegionSize); // 1M内存地址}full_region_ = Region(); // 内存资源不足的内存块current_region_ = &full_region_; // 指向当前正在使用的Regionevac_region_ = nullptr;

}inline mirror::Object* RegionSpace::Alloc(Thread*, size_t num_bytes, size_t* bytes_allocated,size_t* usable_size,size_t* bytes_tl_bulk_allocated) {num_bytes = RoundUp(num_bytes, kAlignment);return AllocNonvirtual<false>(num_bytes, bytes_allocated, usable_size,bytes_tl_bulk_allocated);

}bool RegionSpace::AllocNewTlab(Thread* self) {MutexLock mu(self, region_lock_);RevokeThreadLocalBuffersLocked(self);// Retain sufficient free regions for full evacuation.if ((num_non_free_regions_ + 1) * 2 > num_regions_) {return false;}// 遍历regions数组,找到一个free的for (size_t i = 0; i < num_regions_; ++i) {Region* r = ®ions_[i];if (r->IsFree()) {r->Unfree(time_);++num_non_free_regions_;r->SetTop(r->End());// 将线程和该Region关联起来r->is_a_tlab_ = true;r->thread_ = self;self->SetTlab(r->Begin(), r->End());return true;}}return false;

}size_t RegionSpace::Free(Thread*, mirror::Object*) OVERRIDE {UNIMPLEMENTED(FATAL);return 0;

}void RegionSpace::Clear() {MutexLock mu(Thread::Current(), region_lock_);for (size_t i = 0; i < num_regions_; ++i) {Region* r = ®ions_[i];if (!r->IsFree()) {--num_non_free_regions_;}r->Clear();}current_region_ = &full_region_;evac_region_ = &full_region_;

}

Region

class RegionSpace FINAL : public ContinuousMemMapAllocSpace {// 用于描述内存块的类型,和GC相关enum class RegionType : uint8_t {kRegionTypeAll, // All types.kRegionTypeFromSpace, // From-space. To be evacuated.kRegionTypeUnevacFromSpace, // Unevacuated from-space. Not to be evacuated.kRegionTypeToSpace, // To-space.kRegionTypeNone, // None.};// 用于描述内存块的内存分配状态enum class RegionState : uint8_t {kRegionStateFree, // Free region,还没分配过内存的kRegionStateAllocated, // Allocated region,分配过一些内存的// 如3.5M大小的对象,需要4个内存块来分配;第一个类型为kRegionStateLarge,后面三个为kRegionStateLargeTail;注意第四个Region剩余的0.5M不能再用于分配其他内存kRegionStateLarge, // Large allocated (allocation larger than the region size).kRegionStateLargeTail, // Large tail (non-first regions of a large allocation).};class Region {public:Region(): // idx为内存块在regions数组中的索引idx_(static_cast<size_t>(-1)),// begin、end表示内存的起始位置,top为内存分配的水位线begin_(nullptr), top_(nullptr), end_(nullptr),state_(RegionState::kRegionStateAllocated), type_(RegionType::kRegionTypeToSpace),objects_allocated_(0), alloc_time_(0), live_bytes_(static_cast<size_t>(-1)),is_newly_allocated_(false), is_a_tlab_(false), thread_(nullptr) {}Region(size_t idx, uint8_t* begin, uint8_t* end): idx_(idx), begin_(begin), top_(begin), end_(end),state_(RegionState::kRegionStateFree), type_(RegionType::kRegionTypeNone),objects_allocated_(0), alloc_time_(0), live_bytes_(static_cast<size_t>(-1)),is_newly_allocated_(false), is_a_tlab_(false), thread_(nullptr) {}...

}

DlMallocSpace

DlMallocSpace使用开源的dlmalloc来提供具体的内存分配和释放算法。

RosAllocSpace使用了谷歌开发的rosalloc内存分配管理器。相比而言,rosalloc的用法比dlmalloc要复杂得多。而且还需要ART虚拟机中其他模块进行配合。不过,复杂的结果自然是ART虚拟机里rosalloc分配的效果要比dlmalloc更好。

class DlMallocSpace : public MallocSpace {...}DlMallocSpace* DlMallocSpace::Create(const std::string& name, size_t initial_size, size_t growth_limit, size_t capacity, uint8_t* requested_begin, bool can_move_objects) {uint64_t start_time = 0;// Memory we promise to dlmalloc before it asks for morecore.// Note: making this value large means that large allocations are unlikely to succeed as dlmalloc// will ask for this memory from sys_alloc which will fail as the footprint (this value plus the// size of the large allocation) will be greater than the footprint limit.size_t starting_size = kPageSize;MemMap* mem_map = CreateMemMap(name, starting_size, &initial_size, &growth_limit, &capacity, requested_begin);DlMallocSpace* space = CreateFromMemMap(mem_map, name, starting_size, initial_size, growth_limit, capacity, can_move_objects);return space;

}DlMallocSpace* DlMallocSpace::CreateFromMemMap(MemMap* mem_map, const std::string& name,size_t starting_size, size_t initial_size, size_t growth_limit, size_t capacity, bool can_move_objects) {// 使用dlmalloc库创建mspacevoid* mspace = CreateMspace(mem_map->Begin(), starting_size, initial_size);// Protect memory beyond the starting size. morecore will add r/w permissions when necessoryuint8_t* end = mem_map->Begin() + starting_size;// Everything is set so record in immutable structure and leaveuint8_t* begin = mem_map->Begin();return new DlMallocSpace(mem_map, initial_size, name, mspace, begin, end, begin + capacity,growth_limit, can_move_objects, starting_size);

}void* DlMallocSpace::CreateMspace(void* begin, size_t morecore_start, size_t initial_size) {void* msp = create_mspace_with_base(begin, morecore_start, false /*locked*/);return msp;

}virtual mirror::Object* Alloc(Thread* self, size_t num_bytes, size_t* bytes_allocated, size_t* usable_size, size_t* bytes_tl_bulk_allocated)OVERRIDE REQUIRES(!lock_) {return AllocNonvirtual(self, num_bytes, bytes_allocated, usable_size, bytes_tl_bulk_allocated);

}inline mirror::Object* DlMallocSpace::AllocNonvirtual(Thread* self, size_t num_bytes,size_t* bytes_allocated,size_t* usable_size,size_t* bytes_tl_bulk_allocated) {mirror::Object* obj;{MutexLock mu(self, lock_);obj = AllocWithoutGrowthLocked(self, num_bytes, bytes_allocated, usable_size, bytes_tl_bulk_allocated);}return obj;

}inline mirror::Object* DlMallocSpace::AllocWithoutGrowthLocked(Thread* /*self*/, size_t num_bytes,size_t* bytes_allocated,size_t* usable_size,size_t* bytes_tl_bulk_allocated) {mirror::Object* result = reinterpret_cast<mirror::Object*>(mspace_malloc(mspace_, num_bytes));if (LIKELY(result != nullptr)) {size_t allocation_size = AllocationSizeNonvirtual(result, usable_size);*bytes_allocated = allocation_size;*bytes_tl_bulk_allocated = allocation_size;}return result;

}size_t DlMallocSpace::Free(Thread* self, mirror::Object* ptr) {MutexLock mu(self, lock_);const size_t bytes_freed = AllocationSizeNonvirtual(ptr, nullptr);if (kRecentFreeCount > 0) {RegisterRecentFree(ptr);}mspace_free(mspace_, ptr);return bytes_freed;

}inline size_t DlMallocSpace::AllocationSizeNonvirtual(mirror::Object* obj, size_t* usable_size) {void* obj_ptr = const_cast<void*>(reinterpret_cast<const void*>(obj));size_t size = mspace_usable_size(obj_ptr);if (usable_size != nullptr) {*usable_size = size;}return size + kChunkOverhead;

}void DlMallocSpace::Clear() {size_t footprint_limit = GetFootprintLimit();madvise(GetMemMap()->Begin(), GetMemMap()->Size(), MADV_DONTNEED);live_bitmap_->Clear();mark_bitmap_->Clear();SetEnd(Begin() + starting_size_);mspace_ = CreateMspace(mem_map_->Begin(), starting_size_, initial_size_);SetFootprintLimit(footprint_limit);

}

RosAllocSpace

- 和DlMallocSpace的代码逻辑类似,RosAllocSpace中内存分配和释放的核心工作由rosalloc来完成。

- rosalloc的本质和dlmalloc一样,它是一种动态内存分配(dynamic memory allocation)的算法。只不过rosalloc不像dlmalloc那么通用(dlmalloc可以作为libc库中malloc函数的实现),它专门服务于Android系统中的ART虚拟机。

- rosalloc名称中的ros是run of slot的缩写,slot和run都是代码中的类。

art/runtime/gc/space/rosalloc_space.h

art/runtime/gc/space/rosalloc_space.cc

art/runtime/gc/space/rosalloc_space-inl.h

class RosAllocSpace : public MallocSpace {...}RosAllocSpace* RosAllocSpace::Create(const std::string& name, size_t initial_size,size_t growth_limit, size_t capacity, uint8_t* requested_begin,bool low_memory_mode, bool can_move_objects) {size_t starting_size = Heap::kDefaultStartingSize; // 一个内存页大小MemMap* mem_map = CreateMemMap(name, starting_size, &initial_size, &growth_limit, &capacity, requested_begin);RosAllocSpace* space = CreateFromMemMap(mem_map, name, starting_size, initial_size, growth_limit, capacity, low_memory_mode, can_move_objects);return space;

}RosAllocSpace* RosAllocSpace::CreateFromMemMap(MemMap* mem_map, const std::string& name, size_t starting_size, size_t initial_size, size_t growth_limit, size_t capacity, bool low_memory_mode, bool can_move_objects) {bool running_on_memory_tool = Runtime::Current()->IsRunningOnMemoryTool();allocator::RosAlloc* rosalloc = CreateRosAlloc(mem_map->Begin(), starting_size, initial_size, capacity, low_memory_mode, running_on_memory_tool);uint8_t* end = mem_map->Begin() + starting_size;uint8_t* begin = mem_map->Begin();if (running_on_memory_tool) {...} else {return new RosAllocSpace(mem_map, initial_size, name, rosalloc, begin, end, begin + capacity, growth_limit, can_move_objects, starting_size, low_memory_mode);}

}allocator::RosAlloc* RosAllocSpace::CreateRosAlloc(void* begin, size_t morecore_start, size_t initial_size, size_t maximum_size, bool low_memory_mode, bool running_on_memory_tool) {errno = 0;// 创建RosAlloc对象,这里就是rosalloc模块了allocator::RosAlloc* rosalloc = new art::gc::allocator::RosAlloc(begin, morecore_start, maximum_size, low_memory_mode ? art::gc::allocator::RosAlloc::kPageReleaseModeAll : art::gc::allocator::RosAlloc::kPageReleaseModeSizeAndEnd, running_on_memory_tool);if (rosalloc != nullptr) {rosalloc->SetFootprintLimit(initial_size);}return rosalloc;

}mirror::Object* Alloc(Thread* self, size_t num_bytes, size_t* bytes_allocated, size_t* usable_size, size_t* bytes_tl_bulk_allocated) OVERRIDE {return AllocNonvirtual(self, num_bytes, bytes_allocated, usable_size, bytes_tl_bulk_allocated);

}mirror::Object* AllocNonvirtual(Thread* self, size_t num_bytes, size_t* bytes_allocated, size_t* usable_size, size_t* bytes_tl_bulk_allocated) {return AllocCommon(self, num_bytes, bytes_allocated, usable_size, bytes_tl_bulk_allocated);

}template<bool kThreadSafe>

inline mirror::Object* RosAllocSpace::AllocCommon(Thread* self, size_t num_bytes, size_t* bytes_allocated, size_t* usable_size, size_t* bytes_tl_bulk_allocated) {size_t rosalloc_bytes_allocated = 0;size_t rosalloc_usable_size = 0;size_t rosalloc_bytes_tl_bulk_allocated = 0;if (!kThreadSafe) {Locks::mutator_lock_->AssertExclusiveHeld(self);}// 分配内存mirror::Object* result = reinterpret_cast<mirror::Object*>(rosalloc_->Alloc<kThreadSafe>(self, num_bytes, &rosalloc_bytes_allocated, &rosalloc_usable_size, &rosalloc_bytes_tl_bulk_allocated));if (LIKELY(result != nullptr)) {*bytes_allocated = rosalloc_bytes_allocated;if (usable_size != nullptr) {*usable_size = rosalloc_usable_size;}*bytes_tl_bulk_allocated = rosalloc_bytes_tl_bulk_allocated;}return result;

}size_t RosAllocSpace::Free(Thread* self, mirror::Object* ptr) {if (kRecentFreeCount > 0) {MutexLock mu(self, lock_);RegisterRecentFree(ptr);}return rosalloc_->Free(self, ptr);

}

LargeObjectSpace

-

什么时候使用LargeObjectSpace:

如果一个对象所需内存大小超过设定的阈值(由Heap类的large_object_threshold_成员变量指示),默认值为kDefaultLargeObjectThreshold(大小为3个内存页,即12KB)。同时,该对象的类型必须是Java基础类型的数组(比如int[]、boolean[])或当Java对象的类型是java.lang.String时。 -

LargeObjectSpace有两个派生类,分别是LargeObjectMapSpace和FreeListSpace。

LargeObjectMapSpace和FreeListSpace提供了两种不同的内存分配算法。ART虚拟机中要么使用LargeObjectMapSpace,要么使用FreeListSpace。

在堆创建时初始化large_object_space时候根据large_object_space_type选择使用哪一个。 -

LargeObjectMapSpace在分配内存时会为每一次分配都mmap一块内存空间。

LargeObjectMapSpace根本就没有什么算法,有需要它就直接从操作系统中映射一块内存空间。所以,它比BumpPointerSpace的内存分配算法还要简单。

art/runtime/gc/space/large_object_space.h

art/runtime/gc/space/large_object_space.cc

// A discontinuous large object space implemented by individual mmap/munmap calls.

class LargeObjectMapSpace : public LargeObjectSpace {

}// A continuous large object space with a free-list to handle holes.

class FreeListSpace FINAL : public LargeObjectSpace {

}LargeObjectMapSpace::LargeObjectMapSpace(const std::string& name): LargeObjectSpace(name, nullptr, nullptr),lock_("large object map space lock", kAllocSpaceLock) {}LargeObjectMapSpace* LargeObjectMapSpace::Create(const std::string& name) {if (Runtime::Current()->IsRunningOnMemoryTool()) {...} else {return new LargeObjectMapSpace(name);}

}mirror::Object* LargeObjectMapSpace::Alloc(Thread* self, size_t num_bytes, size_t* bytes_allocated, size_t* usable_size, size_t* bytes_tl_bulk_allocated) {std::string error_msg;MemMap* mem_map = MemMap::MapAnonymous("large object space allocation", nullptr, num_bytes, PROT_READ | PROT_WRITE, true, false, &error_msg);mirror::Object* const obj = reinterpret_cast<mirror::Object*>(mem_map->Begin());MutexLock mu(self, lock_);large_objects_.Put(obj, LargeObject {mem_map, false /* not zygote */});const size_t allocation_size = mem_map->BaseSize();begin_ = std::min(begin_, reinterpret_cast<uint8_t*>(obj));uint8_t* obj_end = reinterpret_cast<uint8_t*>(obj) + allocation_size;if (end_ == nullptr || obj_end > end_) {end_ = obj_end;}*bytes_allocated = allocation_size;if (usable_size != nullptr) {*usable_size = allocation_size;}*bytes_tl_bulk_allocated = allocation_size;num_bytes_allocated_ += allocation_size;total_bytes_allocated_ += allocation_size;++num_objects_allocated_;++total_objects_allocated_;return obj;

}FreeListSpace* FreeListSpace::Create(const std::string& name, uint8_t* requested_begin, size_t size) {std::string error_msg;MemMap* mem_map = MemMap::MapAnonymous(name.c_str(), requested_begin, size, PROT_READ | PROT_WRITE, true, false, &error_msg);return new FreeListSpace(name, mem_map, mem_map->Begin(), mem_map->End());

}FreeListSpace::FreeListSpace(const std::string& name, MemMap* mem_map, uint8_t* begin, uint8_t* end): LargeObjectSpace(name, begin, end), mem_map_(mem_map), lock_("free list space lock", kAllocSpaceLock) {const size_t space_capacity = end - begin;free_end_ = space_capacity;const size_t alloc_info_size = sizeof(AllocationInfo) * (space_capacity / kAlignment);std::string error_msg;allocation_info_map_.reset(MemMap::MapAnonymous("large object free list space allocation info map", nullptr, alloc_info_size, PROT_READ | PROT_WRITE, false, false, &error_msg));allocation_info_ = reinterpret_cast<AllocationInfo*>(allocation_info_map_->Begin());

}mirror::Object* FreeListSpace::Alloc(Thread* self, size_t num_bytes, size_t* bytes_allocated,size_t* usable_size, size_t* bytes_tl_bulk_allocated) {MutexLock mu(self, lock_);const size_t allocation_size = RoundUp(num_bytes, kAlignment);AllocationInfo temp_info;temp_info.SetPrevFreeBytes(allocation_size);temp_info.SetByteSize(0, false);AllocationInfo* new_info;// Find the smallest chunk at least num_bytes in size.auto it = free_blocks_.lower_bound(&temp_info);if (it != free_blocks_.end()) {AllocationInfo* info = *it;free_blocks_.erase(it);// Fit our object in the previous allocation info free space.new_info = info->GetPrevFreeInfo();// Remove the newly allocated block from the info and update the prev_free_.info->SetPrevFreeBytes(info->GetPrevFreeBytes() - allocation_size);if (info->GetPrevFreeBytes() > 0) {AllocationInfo* new_free = info - info->GetPrevFree();new_free->SetPrevFreeBytes(0);new_free->SetByteSize(info->GetPrevFreeBytes(), true);// If there is remaining space, insert back into the free set.free_blocks_.insert(info);}} else {// Try to steal some memory from the free space at the end of the space.if (LIKELY(free_end_ >= allocation_size)) {// Fit our object at the start of the end free block.new_info = GetAllocationInfoForAddress(reinterpret_cast<uintptr_t>(End()) - free_end_);free_end_ -= allocation_size;} else {return nullptr;}}*bytes_allocated = allocation_size;if (usable_size != nullptr) {*usable_size = allocation_size;}*bytes_tl_bulk_allocated = allocation_size;++num_objects_allocated_;++total_objects_allocated_;num_bytes_allocated_ += allocation_size;total_bytes_allocated_ += allocation_size;mirror::Object* obj = reinterpret_cast<mirror::Object*>(GetAddressForAllocationInfo(new_info));// We always put our object at the start of the free block, there cannot be another free block before it.new_info->SetPrevFreeBytes(0);new_info->SetByteSize(allocation_size, false);return obj;

}